In response to:

Consciousness & the Philosophers from the March 6, 1997 issue

To the Editors:

In my book The Conscious Mind, I deny a number of claims that John Searle finds “obvious,” and I make some claims that he finds “absurd” [NYR, March 6]. But if the mind-body problem has taught us anything, it is that nothing about consciousness is obvious, and that one person’s obvious truth is another person’s absurdity. So instead of throwing around this sort of language, it is best to examine the claims themselves and the arguments that I give for them, to see whether Searle says anything of substance that touches them.

The first is my claim that consciousness is a nonphysical feature of the world. I resisted this claim for a long time, before concluding that it is forced on one by a sound argument. The argument is complex, but the basic idea is simple: the physical structure of the world—the exact distribution of particles, fields, and forces in spacetime—is logically consistent with the absence of consciousness, so the presence of consciousness is a further fact about our world. Searle says this argument is “invalid”: he suggests that the physical structure of the world is equally consistent with the addition of flying pigs, but that it does not follow that flying is nonphysical.

Here Searle makes two elementary mistakes. First, he gets the form of the argument wrong. To show that flying is nonphysical, we would need to show that the world’s physical structure is consistent with the absence of flying. From the fact that one can add flying pigs to the world, nothing follows. Second, the scenario he describes is not consistent. A world with flying pigs would have a lot of extra matter hovering meters above the earth, for example, so it could not possibly have the same physical structure as ours. Putting these points together: the idea of a world physically identical to ours but without flying, or without pigs, or without rocks, is self-contradictory. But there is no contradiction in the idea of a world physically identical to ours without consciousness, as Searle himself admits.

The underlying point is that the position of pigs—and almost everything else about the world—is logically derivable from the world’s physical structure, but the presence of consciousness is not. So to explain why and how brains support consciousness, an account of the brain alone is not enough; to bridge the gap, one needs to add independent “bridging” laws. One can resist this conclusion only by adopting a hard-line deflationism about consciousness. That path has its own problems, but in any case it is not open to Searle, who holds that consciousness is irreducible. Irreducibility has its consequences. Consistency requires that one face them directly.

The next issue is my nonreductive functionalism. This bridging law claims that systems with the same functional organization have the same sort of conscious experiences. My detailed argument for this claim is not recognizable in the trivial argument that Searle presents as mine and rebuts. The basic idea, presented in Chapter Seven of the book but ignored by Searle, is that if the claim is false, then there can be massive changes in conscious experience which a subject can never notice. (Searle’s own position is rebutted on p. 258.) He also points to patients with Guillain-Barre syndrome as a counterexample to my claim, but this once again gets the logic wrong. My claim concerns functionally identical beings, so it is irrelevant to point to people who function differently. I certainly do not claim that beings whose functioning differs from ours are unconscious.

The final issue is panpsychism: the claim that some degree of consciousness is associated with every system in the natural world. Here Searle misstates my view: he says that I am “explicitly committed” to this position, when I merely explore it and remain agnostic; and he says incorrectly that it is an implication of property dualism and nonreductive functionalism. One can quite consistently embrace those views and reject panpsychism, so the latter could not possibly function as a “reductio ad absurdum” of the former. I note also that the view which I describe as “strangely beautiful,” and which Searle describes as “strangely self-indulgent,” is a view I reject.

I do argue that panpsychism is not as unreasonable as is often supposed, and that there is no knockdown argument against it. Searle helps confirm the latter claim: while protesting “absurdity,” his arguments against panpsychism have no substance. He declares that to be conscious, a system must have the right “causal powers,” which turn out to be the powers to produce consciousness: true, but trivial and entirely unhelpful. And he says that simple systems (such as thermostats) do not have the “structure” required for consciousness; but this is precisely the claim at issue, and he provides no argument to support it (if we knew what sort of structure were required for consciousness, the mind-body problem would be half-solved). So we are left where we started. Panpsychism remains counterintuitive, but it cannot be ruled out at the start of inquiry.

Advertisement

In place of substantive arguments, Searle provides gut reactions: every time he disagrees with a view I discuss, he calls it “absurd.” In the case of panpsychism (a view not endorsed by me), many might agree. In other cases, the word is devalued: it is not even surprising, for example, that mental terms such as “perception” are ambiguous between a process and a subjective experience; and given that a trillion interacting neurons can result in consciousness, there is no special absurdity in the idea that a trillion interacting silicon chips or humans might do the same. I do bite one bullet, in accepting that brain-based explanations of behavior can be given that do not invoke or imply consciousness (although this is not to say that consciousness is causally irrelevant). But Searle’s own view on irreducibility would commit him to this view too, if he could only draw the implication.

Once we factor out mistakes, misrepresentations, and gut feelings, we are left with not much more than Searle’s all-purpose critique: “the brain causes consciousness.” Although this mantra (repeated at least ten times) is apparently intended as a source of great wisdom, it settles almost nothing that is at issue. It is entirely compatible with all of my views: we just need to distinguish cause from effect, and to note that it does not imply that only the brain causes consciousness. Indeed, Searle’s claim is simply a statement of the problem, not a solution. If one accepts it, the real questions are: Why does the brain cause consciousness? In virtue of which of its properties? What are the relevant causal laws? Searle has nothing to say about these questions. A real answer requires a theory: not just a theory of the brain, but also a detailed theory of the laws that bridge brain and consciousness. Without fulfilling this project, on which I make a start in my book, our understanding of consciousness will always remain at a primitive level.

(Further remarks and a further reply to Searle are at http://ling.ucsc.edu/~chalmers/nyrb/.)

David Chalmers

University of California, Santa Cruz

John Searle replies: replies:

I am grateful that David Chalmers has replied to my review of his book, and I will try to answer every substantive point he makes. In my review, I pointed out that his combination of property dualism and functionalism led him to some “implausible” consequences. I did not claim, as he thinks, that these consequences are logically implied by his joint acceptance of property dualism and functionalism; rather I said that when he worked out the details of his position these views emerged. Property dualism is the view that there are two metaphysically distinct kinds of properties in the world, mental and physical. Functionalism is the view that the mental states of a “system,” whether human, machine, or otherwise, consist in physical functional states of that system; and functional states are defined in terms of sets of causal relations.

Here are four of his claims that I found unacceptable:

1.Chalmers thinks each of the psychological words, “pain,” “belief,” etc., has two completely independent meanings, one where it refers to nonconscious functional processes, and one where it refers to states of consciousness.

2.Consciousness is explanatorily irrelevant to anything physical that happens in the world. If you think you are reading this because you consciously want to read it, Chalmers says you are mistaken. Physical events can have only physical explanations, so consciousness plays no explanatory role whatever in your behavior or anyone else’s.

3.Even your own claims about your own consciousness are not explained by consciousness. If you say “I am in pain,” when you are in pain, it cannot be because you are in pain that you said it.

4.Consciousness is everywhere. Everything in the universe is conscious.

This view is called “panpsychism,” and is the view that I characterized as “absurd.”

Now, what has Chalmers to say about these points in his reply? He says that my opposition to these views is just a “gut reaction” without argument. Well, I tried to make my arguments clear, and in any case for views as implausible as these I believe the onus of proof is on him. But just to make my position as clear as possible let me state my arguments precisely:

1.As a native speaker of English, I know what these words mean, and there is no meaning of the word “pain,” for example, where for every conscious pain in the world there must be a correlated nonconscious functional state which is also called “pain.” On the standard dictionary definition, “pain” means unpleasant sensation, and that definition agrees with my usage as well as that of my fellow English speakers. He thinks otherwise. The onus is surely on him to prove his claim.

Advertisement

2.If we know anything about how human psychology works, we know that conscious desires, inclinations, preferences, etc., affect human behavior. I frequently drink, for example, because I am thirsty. If you get a philosophical result that is inconsistent with this fact, as he does, you had better go back and examine your premises. Of course, it is conceivable that science might show that we are mistaken about this, but to do so would require a major scientific revolution and such a revolution could not be established by the armchair theorizing in which he engages.

3.In my own case, when I am in pain, I sometimes say “I am in pain,” precisely because I am in pain. I experience all three: the pain, my reporting the pain, and my reporting it for the reason that I have it. These are just facts about my own experiences. I take it other people’s experiences are not all that different from mine. What sort of arguments does he want in addition to these plain facts? Once again, if you get a result that denies this, then you had better go back and look at your premises. His conclusions are best understood not as the wonderful discoveries he thinks; rather each is a reductio ad absurdum of his premises.

4.What about panpsychism, his view that consciousness is in rocks, thermostats, and electrons (his examples), indeed everywhere? I am not sure what he expects as an argument against this view. The only thing one can say is that we know too much about how the world works to take this view seriously as a scientific hypothesis. Does he want me to tell him what we know? Perhaps he does.

We know that human and some animal brains are conscious. Those living systems with certain sorts of nervous systems are the only systems in the world that we know for a fact are conscious. We also know that consciousness in these systems is caused by quite specific neurobiological processes. We do not know the details of how the brain does it, but we know, for example, that if you interfere with the processes in certain ways—general anesthetic, or a blow to the head, for example—the patient becomes unconscious and if you get some of the brain processes going again the patient regains consciousness. The processes are causal processes. They cause consciousness. Now, for someone seriously interested in how the world actually works, thermostats, rocks, and electrons are not even candidates to have anything remotely like these processes, or to have any processes capable of having equivalent causal powers to the specific features of neurobiology. Of course as a science fiction fantasy we can imagine conscious thermostats, but science fiction is not science. And it is not philosophy either.

The most astonishing thing in Chalmers’s letter is the claim that he did not “endorse” panpsychism, that he is “agnostic” about it. Well, in his book he presents extensive arguments for it and defenses of it. Here is what he actually says. First, he tells us that “consciousness arises from functional organization” (p. 249). And what is it about functional organization that does the job? It is, he tells us, “information” in his special sense of that word according to which everything in the world has information in it. “We might put this by suggesting as a basic principle that information (in the actual world) has two aspects, a physical and a phenomenal aspect” (p. 286). The closest he gets to agnosticism is this: “I do not have any knockdown arguments to prove that information is the key to the link between physical processes and conscious experience,” but he immediately adds, “but there are some indirect ways of giving support to the idea.” Whereupon he gives us several arguments for the double aspect principle (p. 287). He hasn’t proven panpsychism, but it is a hypothesis he thinks is well supported. Since information in his sense is everywhere then consciousness is everywhere. Taken together his premises imply panpsychism. If he argues that functional organization gives rise to consciousness, that it does so in virtue of information, that anything that has information would be conscious and that everything has information, then he is arguing for the view that everything is conscious, by any logic that I am aware of.

And if he is not supporting panpsychism, then why does he devote an entire section of a chapter to “What is it like to be a thermostat?” in which he describes the conscious life of thermostats?

At least part of the section is worth quoting in full:

Certainly it will not be very interesting to be a thermostat. The information processing is so simple that we should expect the corresponding phenomenal states to be equally simple. There will be three primitively different phenomenal states, with no further structure. Perhaps we can think of these states by analogy to our experiences of black, white, and gray: a thermostat can have an all-black phenomenal field, an all-white field, or an all-gray field. But even this is to impute far too much structure to the thermostat’s experiences, by suggesting the dimensionality of a visual field and the relatively rich natures of black, white, and gray. We should really expect something much simpler, for which there is no analog in our experience. We will likely be unable to sympathetically imagine these experiences any better than a blind person can imagine sight, or than a human can imagine what it is like to be a bat; but we can at least intellectually know something about their basic structure.

He then goes on to try to make the conscious life of thermostats more plausible by comparing our inability to appreciate thermostats with our difficulty in understanding the consciousness of animals. And two pages later he adds,

But thermostats are really no different from brains here.

What are we to make of his analogy between the consciousness of animals and the consciousness of thermostats? I do not believe that anyone who writes such prose can be serious about the results of neurobiology. In any case they do not exhibit an attitude that is “agnostic” about whether thermostats are conscious. And, according to him, if thermostats are conscious then everything is.

Chalmers genuinely was agnostic about the view that the whole universe consists of little bits of consciousness. He put this forward as a “strangely beautiful” possibility to be taken seriously. Now he tells us it is a view he “rejects.” He did not reject it in his book.

The general strategy of Chalmers’s book is to present his several basic premises, most importantly, property dualism and functionalism, and then draw the consequences I have described. When he gets such absurd consequences, he thinks they must be true because they follow from the premises. I am suggesting that his conclusions cast doubt on the premises, which are in any case, insufficiently established. So, let’s go back and look at his argument for two of his most important premises, “property dualism” and “nonreductive functionalism,” the two he mentions in his letter.

In his argument for property dualism, he says, correctly, that you can imagine a world that has the same physical features that our world has—but minus consciousness. Quite so, but in order to imagine such a world, you have to imagine a change in the laws of nature, a change in those laws by which physics and biology cause and realize consciousness. But then, I argued, if you are allowed to mess around with the laws of nature, you can make the same point about flying pigs. If I am allowed to imagine a change in the laws of nature, then I can imagine the laws of nature changed so pigs can fly. He points out, again correctly, that that would involve a change in the distribution of physical features, because now pigs would be up in the air. But my answer to that, which I apparently failed to make clear, is that if consciousness is a physical feature of brains, then the absence of consciousness is also a change in the physical features of the world. That is, his argument works to establish property dualism only if it assumes that consciousness is not a physical feature, but that is what the argument was supposed to prove. From the facts of nature, including the laws, you can derive logically that this brain must be conscious. From the facts of nature, including the laws, you can derive that this pig can’t fly. The two cases are parallel. The real difference is that consciousness is irreducible. But irreducibility by itself is not a proof of property dualism.

Now I turn to his argument for “non-reductive functionalism.” The argument is that systems with the same nonconscious functional organization must have the same sort of conscious experiences. But the argument that he gives for this in his book and repeats in his reply, begs the question. Here is how he summarizes it:

If there were not a perfect match between conscious experiences and functional organization, then there could be massive changes in someone’s conscious experiences, which the subject having those experiences would never notice. But in this last statement, the word “notice” is not used in the conscious sense of “notice.” It refers to the noticing behavior of a nonconscious functional organization. Remember for Chalmers all these words have two meanings, one implying consciousness, one nonconscious functional organization. Thus, the argument begs the question by assuming that radical changes in a person’s consciousness, including his conscious noticing, must be matched by changes in the nonconscious functional organization that produces noticing behavior. But that is precisely the point at issue. What the argument has to show, if it is going to work, is that there must be a perfect match between an agent’s inner experiences and his external behavior together with the “functional organization” of which the behavior is a part. But he gives no argument for this. The only argument is the question-begging: there must be such a match because otherwise there wouldn’t be a match between the external noticing part of the match and the internal experience. He says incorrectly that the Guillain-Barre patients who have consciousness but the wrong functional organization are irrelevant, because they “function differently.” But differently from what? As far as their physical behavior is concerned they function exactly like people who are totally unconscious, and thus on his definition they have exactly the same “functional organization” as unconscious people, even though they are perfectly conscious. Remember “functional organization” for him is always nonconscious. The Guillain-Barre patients have the same functional organization, but different consciousness. Therefore there is no perfect match between functional organization and consciousness. Q.E.D.

Chalmers resents the fact that I frequently try to remind him that brains cause consciousness, a claim he calls a “mantra.” But I don’t think he has fully appreciated its significance. Consciousness is above all a biological phenomenon, like digestion or photosynthesis. This is just a fact of nature that has to be respected by any philosophical account. Of course, in principle we might build a conscious machine out of nonbiological materials. If we can build an artificial heart that pumps blood, why not an artificial brain that causes consciousness? But the essential step in the project of understanding consciousness and creating it artificially is to figure out in detail how the brain does it as a specific biological process in real life. Initially, at least, the answer will have to be given in terms like “synapse,” “peptides,” “ion channels,” “40 Hertz,” “neuronal maps,” etc., because those are real features of the real mechanism we are studying. Later on we might discover more general principles that permit us to abstract away from the biology.

But, and this is the point, Chalmers’s candidates for explaining consciousness, “functional organization” and “information,” are nonstarters, because as he uses them, they have no causal explanatory power. To the extent that you make the function and the information specific, they exist only relative to observers and interpreters. Something is a thermostat only to someone who can interpret it or use it as such. The tree rings are information about the age of the tree only to someone capable of interpreting them. If you strip away the observers and interpreters then the notions become empty, because now everything has information in it and has some sort of “functional organization” or other. The problem is not just that Chalmers fails to give us any reason to suppose that the specific mechanisms by which brains cause consciousness could be explained by “functional organization” and “information,” rather he couldn’t. As he uses them, these are empty buzzwords. For all its ingenuity I am afraid his book is really no help in the project of understanding how the brain causes consciousness.

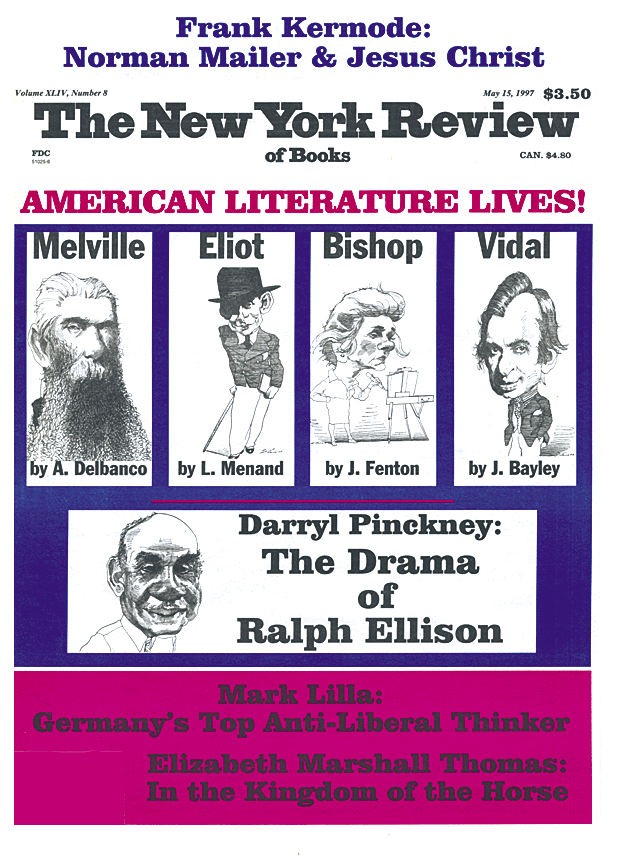

This Issue

May 15, 1997