Fifty years ago no one could confidently have predicted the geopolitical landscape of today. And scientific forecasting is just as hazardous. Three of today’s most remarkable technologies had their gestation in the 1950s. But nobody could then have guessed how pervasively they would shape our lives. It was in 1958 that Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductors built the first integrated circuit—the precursor of today’s ubiquitous silicon chips, each containing literally billions of microscopic circuit elements. This was perhaps the most transformative single invention of the past century. A second technology with huge potential began in Cambridge in the 1950s, when James Watson and Francis Crick discovered the bedrock mechanism of heredity—the famous double helix. This discovery launched the science of molecular biology, opening exciting prospects in genomics and synthetic biology.

And it’s just over fifty years since the launch of Sputnik. This event started the “space race,” and led President Kennedy to inaugurate the program to land men on the moon. Kennedy’s prime motive was of course superpower rivalry—cynics could deride it as a stunt. But it was an extraordinary technical triumph—especially as NASA’s total computing power then was far less than that of a single mobile phone today. And it had an inspirational aspect too: it offered a new perspective on our planet. Distant images of earth—its delicate biosphere of clouds, land, and oceans contrasting with the sterile moonscape where the astronauts left their footprints—have, ever since the 1960s, been iconic for environmentalists.

To young people today, however, this is ancient history: they know that the Americans went to the moon, just as they know the Egyptians built pyramids, but the motives for these two enterprises may seem equally baffling. There was no real follow-up after Apollo: there is no practical or scientific motive adequate to justify the huge expense of NASA-style manned spaceflight, and it has lost its glamour. But unmanned space technology has flourished, giving us GPS, global communications, environmental monitoring, and other everyday benefits, as well as an immense scientific yield. But of course there is a dark side. Its initial motivation was to provide missiles to carry nuclear weapons. And those weapons were themselves the outcome of a huge enterprise, the Manhattan Project, that was even more intense and focused than the Apollo program.

Soon after World War II, some physicists who had been involved in the Manhattan Project founded a journal called the Bulletin of the Atomic Scientists, aimed at promoting arms control. The logo on the Bulletin ‘s cover is a clock, the closeness of whose hands to midnight indicates the Editorial Board’s judgment on how precarious the world situation is. Every year or two, the minute hand is shifted, either forward or backward.

It was closest to midnight at the time of the Cuban missile crisis. Robert MacNamara spoke frankly about that episode in Errol Morris’s documentary Fog of War. He said that “we came within a hairbreadth of nuclear war without realizing it. It’s no credit to us that we escaped—Khrushchev and Kennedy were lucky as well as wise.” Indeed on several occasions during the cold war the superpowers could have stumbled toward Armageddon.

When the cold war ended, the Bulletin’s clock was put back to seventeen minutes to midnight. There is now far less risk of tens of thousands of H-bombs devastating our civilization. The greatest peril to confront the world from the 1950s to the 1980s—massive nuclear annihilation—has diminished. But the clock has been creeping forward again. There is increasing concern about nuclear proliferation, and about nuclear weapons being deployed in a localized conflict. And al-Qaeda-style terrorists might some day acquire a nuclear weapon. If they did, they could willingly detonate it in a city, killing tens of thousands along with themselves, and millions would acclaim them as heros.

And the threat of a global nuclear catastrophe could be merely in temporary abeyance. During this century, geopolitical realignments could be as drastic as those during the last century, and could lead to a nuclear standoff between new superpowers that might be handled less well—or less luckily—than the Cuban crisis was.

The nuclear age inaugurated an era when humans could threaten the entire earth’s future—what some have called the “anthropocene” era. We’ll never be completely rid of the nuclear threat. But the twenty-first century confronts us with new perils as grave as the bomb. They may not threaten a sudden worldwide catastrophe—the doomsday clock is not such a good metaphor—but they are, in aggregate, worrying and challenging.

Energy and Climate

High on the global agenda are energy supply and energy security. These are crucial for economic and political stability, and linked of course to the grave issue of long-term climate change.

Advertisement

Human actions—mainly the burning of fossil fuels—have already raised the carbon dioxide concentration in the atmosphere higher than it’s ever been in the last half-million years. Moreover, according to projections based on “business as usual” practices—i.e., the continued growth of carbon emissions at current rates—atmospheric CO2 will reach twice the pre-industrial level by 2050, and three times that level later in the century. This much is entirely uncontroversial. Nor is there significant doubt that CO2 is a greenhouse gas, and that the higher its concentration rises, the greater the warming—and, more important still, the greater the chance of triggering something grave and irreversible: rising sea levels due to the melting of Greenland’s icecap, runaway greenhouse warming due to release of methane in the tundra, and so forth.

There is a substantial uncertainty in just how sensitive the temperature is to the level of CO2 in the atmosphere. The climate models can, however, assess the likelihood of a range of temperature rises. It is the “high-end tail” of the probability distribution that should worry us most—the small probability of a really drastic climatic shift. Climate scientists now aim to refine their calculations, and to address questions like: Where will the flood risks be concentrated? What parts of Africa will suffer severest drought? Where will the worst hurricanes strike?

The “headline figures” that the climate modelers quote—a 2, 3, or 5 degrees Centigrade rise in the mean global temperature—might seem too small to fuss about. But two comments should put them into perspective. First, even in the depth of the last ice age the mean global temperature was lower by just 5 degrees. Second, these predictions do not imply a uniform warming: the land warms more than the sea, and high latitudes more than low. Quoting a single figure glosses over shifts in global weather patterns that will be more drastic in some regions than in others, and could involve relatively sudden “flips” rather than steady changes. Nations can adapt to some of the adverse effects of warming. But the most vulnerable people—in, for instance, Africa or Bangladesh—are the least able to adapt.

The science of climate change is intricate. But it’s straightforward compared to the economics and politics. Global warming poses a unique political challenge for two reasons. First, the effect is nonlocalized: British CO2 emissions have no more effect in Britain than they do in Australia, and vice versa. That means that any credible regime whereby the “polluter pays” has to be broadly international.

Second, there are long time-lags—it takes decades for the oceans to adjust to a new equilibrium, and centuries for ice sheets to melt completely. So the main downsides of global warming lie a century or more in the future. Concepts of intergenerational justice then come into play: How should we rate the rights and interests of future generations compared to our own? What discount rate should we apply?

In his influential 2006 report for the UK government, Nicholas Stern argued that equity to future generations renders a “commercial” discount rate quite inappropriate. Largely on that basis he argues that we should commit substantial resources now, to preempt much greater costs in future decades.

There are of course precedents for long-term altruism. Indeed, in discussing the safe disposal of nuclear waste, experts talk with a straight face about what might happen more than 10,000 years from now, thereby implicitly applying a zero discount rate. To concern ourselves with such a remote “post-human” era might seem bizarre. But all of us can surely empathize at least a century ahead. Especially in Europe, we’re mindful of the heritage we owe to centuries past; history will judge us harshly if we discount too heavily what might happen when our grandchildren grow old.

To ensure a better-than-even chance of avoiding a potentially dangerous “tipping point,” global CO2 emissions must, by 2050, be brought down to half the 1990 level. This is the target espoused by the G8. It corresponds to two tons of CO2 per year from each person on the planet. For comparison, current CO2 emissions for the US are about twenty tons per person, the average European figure is about ten, and the Chinese level is already four. To achieve this target without stifling economic growth—to turn around the curve of CO2 emissions well before 2050—is a huge challenge. The debates during the July meeting of the G8 in Japan indicated the problems—especially how to get the cooperation of India and China. The great emerging economies have so far made minor contributions to the atmospheric “stock” of CO2, but if they develop in as carbon-intensive a way as ours did, they could swamp and negate any measures taken by the G8 alone.

Advertisement

Realistically, however, there is no chance of reaching this target, or of achieving real energy security, without drastically new technologies. I’m confident that these will have emerged by the second half of the century; the worry is that this may not be soon enough. Efforts to develop a whole raft of techniques for economizing on energy, storing it, and generating it by “clean” or low-carbon methods deserve a priority and commitment from governments akin to that accorded to the Manhattan Project or the Apollo moon landing. Current R&D is far less than the scale and urgency of what the problem requires.1 To speed things up, we need a “shotgun approach”—trying all the options. And we can afford it: the stakes are colossal. The world spends around $7 trillion per year on energy and its infrastructure. The US imports $500 billion worth of oil each year.

I can’t think of anything that could do more to attract the brightest and best into science than a strongly proclaimed commitment—led by the US and Europe—to provide clean and sustainable energy for the developing and the developed world. Even optimists about prospects in solar energy, advanced biofuels, fusion, and other renewables have to acknowledge that it will be at least forty years before they can fully “take over.” Coal, oil, and gas seem set to dominate the world’s ever-growing energy needs for at least that long. Last year the Chinese built a hundred coal-fired power stations. Coal deposits representing a million years’ accumulation of primeval forest are now being burned in a single year.

Coal is the most “inefficient” fossil fuel in terms of energy generated per unit of carbon released. Annual CO2 emissions are rising year by year. Unless this rising curve can be turned around sooner, the atmospheric concentration will irrevocably reach a threatening level.

So an immediate priority has to be a coordinated international effort to develop carbon capture and storage, or CCS. Carbon from power stations must be captured before it escapes into the atmosphere; and then piped to some geological formation where it can be stored without leaking out. It’s crucial to agree on a timetable and a coordinated plan for the construction of CCS demonstration plants to explore all variants of the technology. To jump-start such a program would need up to $10 billion a year of public funding worldwide (preferably as part of public-private partnerships). But this is a small price to pay for bringing forward, by five years or more, the time when CCS can be widely adopted and the graph of CO2 emissions turned around.

What is the role of nuclear power in all this? The concerns are well known—it is an issue where expert and lay opinions are equally divided. I’m myself in favor of the UK and the US having at least a replacement generation of power stations—and of R&D into new kinds of reactors. But the nonproliferation regime is fragile, and before being relaxed about a worldwide program of nuclear power, one would surely require the kind of fuel bank and leasing arrangement that has been proposed by Mohamed ElBaradei at the International Atomic Energy Agency (IAEA). According to this plan, the IAEA would establish an international store of enriched uranium that would be supplied to countries pursuing civilian nuclear power, provided that those countries agree not to develop facilities for enrichment.

Natural Resources and Population

Energy security and climate change are the prime “threats without enemies” that confront us. But there are others. High among these is the threat to biological diversity caused by rapid changes in land use and deforestation. There have been five great extinctions in the geological past; human actions are causing a sixth. The extinction rate is 1,000 times higher than it was before humans came on the scene, and it is increasing. We are destroying the book of life before we have read it.

Biodiversity—manifested in forests, coral reefs, blue marine waters, and all Earth’s other ecosystems—is often proclaimed as a crucial component of human well-being and economic growth. It manifestly is: we’re clearly harmed if fish stocks dwindle to extinction; there are plants whose gene pool might be useful to us. And massive destruction of the rain forests would accelerate global warming. But for environmentalists these “instrumental”—and anthropocentric—arguments aren’t the only compelling ones. For them, preserving the richness of our biosphere has value in its own right, over and above what it means to us humans.

Population growth, of course, aggravates all pressures on energy and environment. Fifty years ago the world population was below three billion. It has more than doubled since then, to 6.7 billion. The percentage growth-rate has slowed, but the global figure is projected to reach eight or even nine billion by 2050. The predicted rise will occur almost entirely in the developing world.

There is, as well, a global trend from rural toward urban living. More than half the world’s population is now urban—and megacities are growing explosively. There is an extensive literature on the “carrying capacity” of our planet—on how many people it can sustain without irreversible degradation. The answer of course depends on lifestyle. The world could not sustain its present population if everyone lived like present-day Americans or Europeans. On the other hand, the pressures would plainly be eased if people traveled little and interacted via super-Internet and virtual reality. And, incidentally, if they were all vegetarians: it takes thirteen pounds of grain to make one pound of beef.

If population growth continues even beyond 2050, one can’t be other than exceedingly gloomy about the prospects. However, there could be a turnaround. There are now more than sixty countries in which fertility is below replacement level—it’s far below in, for instance, Italy and Singapore. In Iran the fertility rate has fallen from 6.5 in 1980 to 2.1 today. We all know the social trends that lead to this demographic transition—declining infant mortality, availability of contraceptive advice, women’s education, and so forth. If the transition quickly extended to all countries, then the global population could start a gradual decline after 2050—a development that would surely be benign.

There is, incidentally, one “wild card” in all these long-term forecasts. This is the possibility that the average lifespan in advanced countries may be extended drastically by some biomedical breakthrough.

The prognosis is especially bleak in Africa, where there could be a billion more people in 2050 than there are today. It’s worth quoting some numbers here. A hundred years ago, the population of Ethiopia was 5 million. It is now 75 million (of whom 8 million need permanent food aid) and will almost double by 2050. Quite apart from the problem of providing services, there is consequent pressure on the water resources of the Nile basin.

Over two hundred years ago, Thomas Malthus famously argued that populations would rise until limited by food shortages. His gloomy prognosis has been forestalled by advancing technology, the green revolution, and so forth, but he could be tragically vindicated in Africa. Continuing population growth makes it harder to break out of the poverty trap—Africa not only needs more food, but a million more teachers annually, just to maintain current standards. And just as today’s population couldn’t be fed by yesterday’s agriculture, a second green revolution may be needed to feed tomorrow’s population.

But the rich world has the resources, if the will is there, to enhance the life chances of the world’s billion poorest people—relieving the most extreme poverty, providing clean water, primary education, and other basics. This is a precondition of achieving in Africa the demographic shift that has occurred elsewhere. The annual amount of foreign aid from most countries, including the US, is far below the UN’s target of 0.7 percent of GNP that was set out in the Millennium Declaration in 2000. It would surely be shameful, as well as against even our narrow self-interests, if the Millennium goals set for 2015 were not met.

(To inject a pessimistic note in parentheses, the underfunding of foreign aid, even in situations where the humanitarian imperative seems so clear, augurs badly for the actual implementation of the measures needed to meet the 2050 carbon emission targets—generally estimated to cost around 1 percent of GNP—where the payoff is less immediately apparent.)

Some New Vulnerabilities

Infectious diseases are mainly associated with developing countries—but in our interconnected world we are now all more vulnerable. The spread of epidemics is aggravated by rapid air travel, plus the huge concentrations of people in megacities with fragile infrastructures. Whether or not a pandemic gets global grip may hinge on the efficiency of worldwide monitoring—how quickly a Vietnamese or Sudanese poultry farmer can diagnose or report any strange sickness.

In our everyday lives, we have a confused attitude toward risk. We fret about tiny risks: carcinogens in food, a one-in-a-million chance of being killed in train crashes, and so forth. But we’re in denial about others that should loom much larger. If we apply to pandemics the same prudent analysis that leads us to buy insurance—multiplying probability by consequences—we’d surely conclude that measures to alleviate this kind of extreme event need higher priority. A global pandemic could kill tens of millions and cost many trillions of dollars.

There are also new vulnerabilities stemming from the misuse of powerful technologies—either through error or by design. Biotechnology, for instance, holds huge promise for health care, for enhanced food production, even for energy. But there is a downside. Here’s a quote from the American National Academy of Sciences:

Just a few individuals with specialized scientific skills…could inexpensively and easily produce a panoply of lethal biological weapons that might seriously threaten the US population…. The deciphering of the human genome sequence and the complete elucidation of numerous pathogen genomes…allow science to be misused to create new agents of mass destruction.

Not even an organized network would be required: just a fanatic, or a weirdo with the mindset of those who now design computer viruses—the mindset of an arsonist. The techniques and expertise for bio or cyber attacks will be accessible to millions.

We’re kidding ourselves if we think that technical expertise is always allied with balanced rationality: it can be combined with fanaticism—not just the religious fundamentalism that we’re so mindful of today, but new age irrationalities. I’m thinking of cults such as the Raelians, and of extreme eco-freaks, violent animal rights campaigners, and the like. The global village will have its village idiots.

In a future era of vast empowerment of individuals to take actions that may cause harm, where even one malign act would be too many, how can our open society be safeguarded? Will there be pressures to constrain diversity and individualism? Or to shift the balance between privacy and intrusion? These are stark questions, but I think they are deeply serious ones. (Though the careless abandon with which younger people post their intimate details on Facebook, and the broad acquiescence in ubiquitous security cameras, suggest that in our society there will be surprisingly little resistance to loss of privacy.)

Developments in cyber-, bio-, or nanotechnology will open up new risks of error or terror.2 Our global society is precariously dependent on elaborate networks—electricity grids, air traffic control, the Internet, just-in-time delivery, and so forth—the collapse of any one of which could stress it to the breaking point. It’s crucial to ensure maximal resilience of all such systems.

The great science-fiction writer Arthur C. Clarke opined that any ultra- advanced technology was indistinguishable from magic. Everyday consumer items like Sony game stations, GPS receivers, and Google would have seemed magic fifty years ago.

I have cited three technologies that now pervade our lives in ways quite unenvisioned fifty years ago. Likewise, by extrapolating from the present, I have surely missed the qualitatively greatest changes that may occur in the next fifty.

In the coming decades, there could be qualitatively new kinds of change. One thing that’s been unaltered for millennia is human nature and human character. But in this century, novel mind-enhancing drugs, genetics, and “cyborg” techniques may start to alter human beings themselves. That’s something qualitatively new in recorded history. And we should keep our minds open, or at least ajar, to concepts on the fringe of science fiction—robots with many human attributes, computers that make discoveries worthy of Nobel Prizes, bioengineered organisms, and so forth. Flaky Californian futurologists aren’t always wrong.

Opinion polls in England show that people are generally positive about science, but are concerned that it may “run away” faster than we can properly cope with it. Some commentators on biotech, robotics, and nanotech worry that when the genie is out of the bottle, the outcome may be impossible to control. They urge caution in “pushing the envelope” in some fields of science.

The uses of academic research generally can’t be foreseen: Rutherford famously said, in the mid-Thirties, that nuclear energy was “moonshine”; the inventors of lasers didn’t foresee that an early application of their work would be to eye surgery; the discoverer of X-rays was not searching for ways to see through flesh. A major scientific discovery is likely to have many applications—some benign, others less so—none of which was anticipated by the original investigator.

We can’t reap the benefits of science without accepting some risks—the best we can do is minimize them. Most surgical procedures, even if now routine, were risky and often fatal when they were being pioneered. In the early days of steam, people died when poorly designed boilers exploded. But something has changed. Most of the old risks were localized. If a boiler explodes, it’s horrible but there’s an “upper bound” to just how horrible. In our ever more interconnected world, there are new risks whose consequences could be so widespread that even a tiny probability is unacceptable. There will surely be a widening gulf between what science enables us to do and what applications it’s prudent or ethical actually to pursue—more doors that science could open but which are best kept closed.

There are already scientific procedures—human reproductive cloning and synthetic biology among them—where regulation is called for, on ethical as well as prudential grounds. And there will be more. Regulations will need to be international, and to contend with commercial pressures—and they may prove as hard to enforce as the drug laws. If one country alone imposed regulations, the most dynamic researchers and enterprising companies would migrate to another that was more permissive. This is happening already, in a small way, in primate and stem cell research.

The International Scientific Community

Science is the only truly global culture: protons, proteins, and Pythagoras’ theorem are the same from China to Peru. Research is international, highly networked, and collaborative. And most science-linked policy issues are international, even global—that’s certainly true of those I’ve addressed here.

It is worth mentioning that the United States and Britain have been until now the most successful in creating and sustaining world-class research universities. These institutions are magnets for talent—both faculty and students—from all over the world, and are in most cases embedded in a “cluster” of high-tech companies, to symbiotic benefit.

By 2050, China and India should at least gain parity with Europe and the US—they will surely become the “center of gravity” of the world’s intellectual power. We will need to aim high if we are to sustain our competitive advantage in offering cutting-edge “value added.”

It’s a duty of scientific academies and similar bodies to ensure that policy decisions are based on the best science, even when that science is still uncertain and provisional; this is the Royal Society’s role in the UK and that of the National Academy of Sciences in the US. The academies of the G8 countries, along with those of Brazil, China, India, Mexico, and South Africa, now meet regularly to discuss global issues. And one thinks of consortia like the Intergovernmental Panel on Climate Change, and bodies like the World Health Organization.

In the UK, an ongoing dialogue with parliamentarians on embryos and stem cells has led to a generally admired legal framework. On the other hand, the debate about genetically modified (GM) crops went wrong in the UK because we came in too late, when opinion was already polarized between eco-campaigners on the one side and commercial interests on the other. I think we have recently done better on nanotechnology, by raising the key issues early. It’s necessary to engage with the public well before there are demands for any legislation or commercial developments.

Some subjects have had the “inside track” and gained disproportionate resources; huge sums, for instance, are still devoted to new weaponry. On the other hand, environmental projects, renewable energy, and so forth deserve more effort. In medicine, the focus is disproportionately on cancer and cardiovascular studies, the ailments that loom largest in prosperous countries, rather than on the infections endemic in the tropics.

Policy decisions—whether about energy, GM technology, mind-enhancing drugs, or whatever—are never solely scientific: strategic, economic, social, and ethical ramifications enter as well. And here scientists have no special credentials. Choices on how science is applied shouldn’t be made just by scientists. That’s why everyone needs a “feel” for science and a realistic attitude toward risk—otherwise public debate won’t rise above the level of tabloid slogans.

Scientists nonetheless have a special responsibility. We feel there is something lacking in parents who don’t care what happens to their children in adulthood, even though this is largely beyond their control. Likewise, scientists shouldn’t be indifferent to the fruits of their ideas—their intellectual creations. They should try to foster benign spin-offs—and of course help to bring their work to market when appropriate. But they should campaign to resist, so far as they can, ethically dubious or threatening applications. And they should be prepared to engage in public debate and discussion.

I mentioned earlier the atomic scientists in World War II. Many of them—and I’ve been privileged to know some, such as Hans Bethe and Joseph Rotblat—set a fine example. They didn’t say that they were “just scientists” and that the use made of their work was up to politicians. They continued as engaged citizens—promoting efforts to control the power they had helped unleash. We now need such individuals—not just in physics, but across the whole range of applicable science.

A Cosmic Perspective

My special subject is astronomy—the study of our environment in the widest conceivable sense. And I’d like to end with a cosmic perspective.

It is surely a cultural deprivation to be unaware of the marvelous vision of nature offered by Darwinism and by modern cosmology—the chain of emergent complexity leading from a still-mysterious beginning to atoms, stars, planets, biospheres, and human brains able to ponder the wonder and the mystery. And there’s no reason to regard humans as the culmination of this emergent process. Our sun is less than halfway through its life. Any creatures witnessing the sun’s demise, here on earth or far beyond, won’t be human—they’ll be as different from us as we are from bacteria.

But even in this cosmic time perspective—extending billions of years into the future, as well as into the past—this century may be a defining moment. It’s the first in our planet’s history where one species—ours—has the earth’s future in its hands.

Suppose some aliens had been watching our planet—a “pale blue dot” in a vast cosmos—for its entire history, what would they have seen? Over nearly all that immense time, 4.5 billion years, the earth’s appearance would have altered very gradually. The continents drifted; the ice cover waxed and waned; successive species emerged, evolved, and became extinct. But in just a tiny sliver of the earth’s history—the last one millionth part, a few thousand years—the patterns of vegetation altered much faster than before. This signaled the start of agriculture. The changes accelerated as human populations rose.

But then there were other changes, even more abrupt. Within fifty years—little more than one hundredth of a millionth of the earth’s age—the carbon dioxide in the atmosphere began to increase anomalously fast. The planet became an intense emitter of radio waves (i.e., the total output from all TV, cell phone, and radar transmissions). And something else unprecedented happened: small projectiles lifted from the planet’s surface and escaped the biosphere completely. Some were propelled into orbits around the earth; some journeyed to the moon and planets.

If they understood astrophysics, the aliens could confidently predict that the biosphere would face doom in a few billion years when the sun flares up and dies. But could they have predicted this unprecedented spike less than half-way through the earth’s life—these human-induced alterations occupying, overall, less than a millionth of the elapsed lifetime and seemingly occurring with runaway speed? If they continued to keep watch, what might these hypothetical aliens witness in the next hundred years? Will a final spasm be followed by silence? Or will the planet itself stabilize? And will some of the objects launched from the earth spawn new oases of life elsewhere?

The answers will depend on us, collectively—on whether we can, as Brent Scowcroft said in his Ditchley lecture last year, “behave wisely, prudently and in close strategic cooperation with each other.”

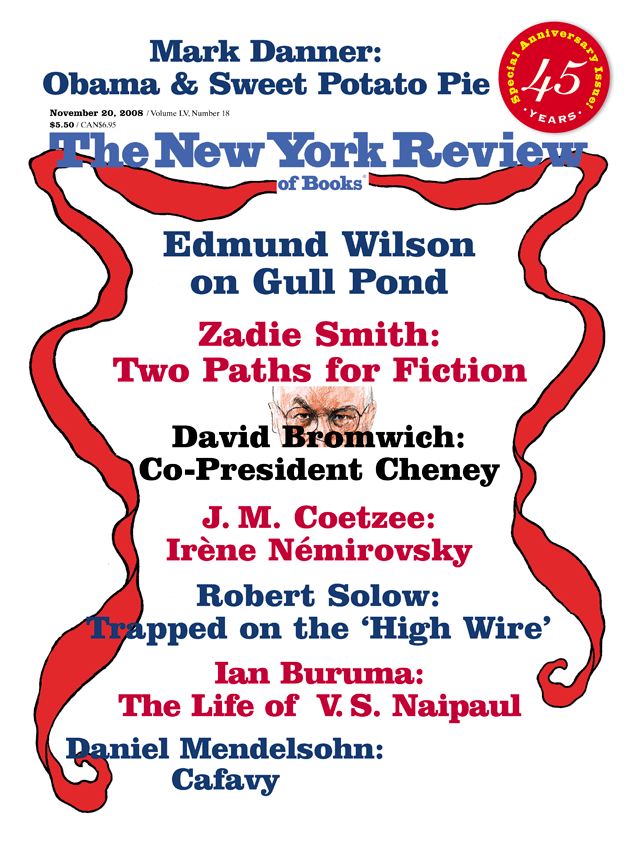

This Issue

November 20, 2008

The Co-President at Work

At Gull Pond

Two Paths for the Novel

-

1

There have been some futuristic proposals to counteract climate change by global-scale projects to attenuate the sunlight reaching the ground, or to “soak up” atmospheric CO2. The huge costs and unpredictable downsides of such “geoengineering” would render it less politically acceptable than more conventional measures. But this approach deserves some study, provided that the focus is not diverted from reductions of CO2 emissions and from adaptation. ↩

-

2

A nanometer is one billionth of a meter, and nanotechnology involves the production and application of systems with crucial structures on this tiny scale, comparable in size to individual molecules. For British reports on the prospects for this subject, and on possible dangers of nanoparticles, see the relevant Web site of the Royal Society: www.nanotec.org.uk. ↩