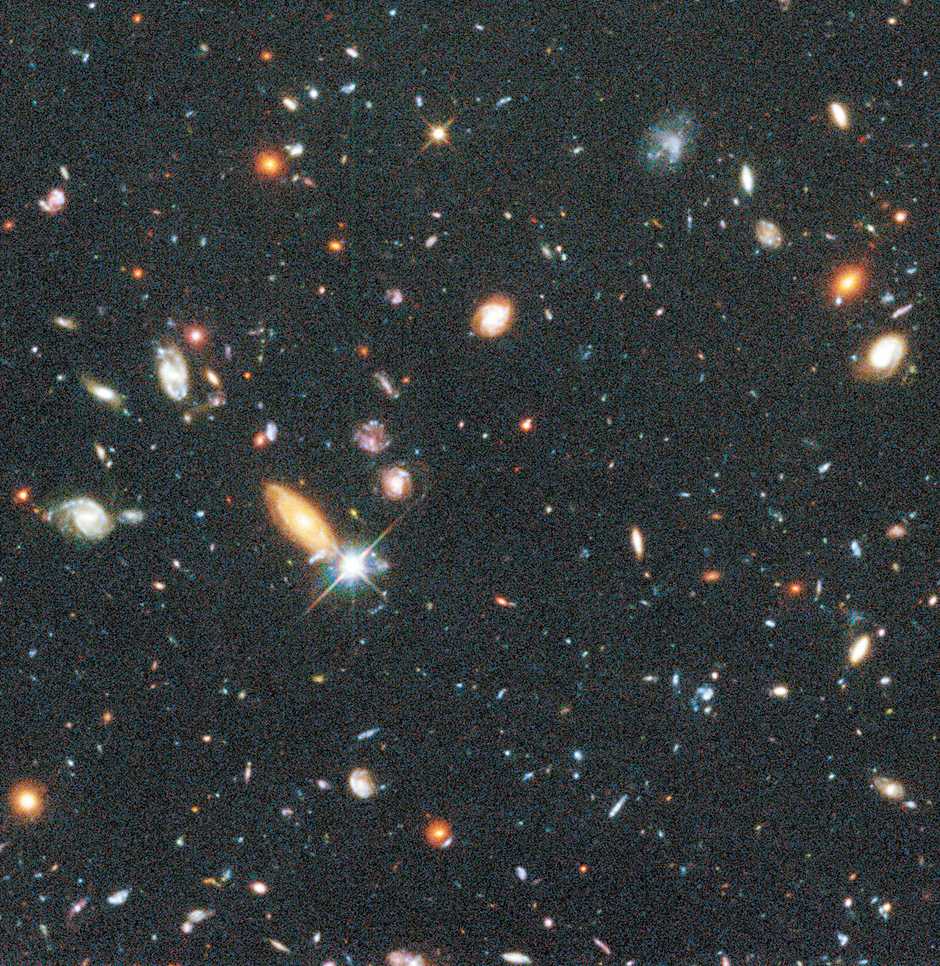

Robert Williams/Hubble Deep Field Team

A view of about a quarter of the ‘Hubble Deep Field,’ a tiny patch of sky about one thirtieth the diameter of the full moon, photographed in December 1995 by the Hubble Space Telescope with an exposure time of ten days, showing the ‘deepest-ever’ view of the universe. The galaxies in this picture are so far away that their light has been traveling to us for most of the history of the universe.

In the past fifty years two large branches of physical science have each made a historic transition. I recall both cosmology and elementary particle physics in the early 1960s as cacophonies of competing conjectures. By now in each case we have a widely accepted theory, known as a “standard model.”

Cosmology and elementary particle physics span a range from the largest to the smallest distances about which we have any reliable knowledge. The cosmologist looks out to a cosmic horizon, the farthest distance light could have traveled since the universe became transparent to light over ten billion years ago, while the elementary particle physicist explores distances much smaller than an atomic nucleus. Yet our standard models really work—they allow us to make numerical predictions of high precision, which turn out to agree with observation.

Up to a point the stories of cosmology and particle physics can be told separately. In the end, though, they will come together.

1.

Scientific cosmology got its start in the 1920s. It was discovered then that little clouds always visible at fixed positions among the stars are actually distant galaxies like our own Milky Way, each containing many billions of stars. Then it was found that these galaxies are all rushing away from us and from each other. For decades cosmological research consisted almost entirely of an attempt to pin down the rate of expansion of the universe, and to measure how it may be changing.

Oddly, little attention was given to an obvious conclusion: if the galaxies are rushing apart, there would have been a time in the past when they were all crunched together. From the measured expansion rate, one could conclude that this time was some billions of years ago. Calculations in the late 1940s showed that the early universe must have been very hot, or else all the hydrogen in the universe (by far the most common element now) would have combined into heavier elements. The hot matter would have radiated light, light that would have survived to the present as a faint static of microwave radiation cooled by the expansion of the universe to a present temperature of a few degrees above absolute zero.1

No search was carried on then for this leftover cosmic microwave background radiation, and the prediction was largely forgotten. For a while some theorists even speculated that the universe is in a steady state, always looking pretty much the same, with new matter continually created to fill the gaps between the receding galaxies.

The modern era of scientific cosmology began forty-eight years ago, with the accidental discovery of the cosmic microwave background radiation. So much for the steady state cosmology—there was an early universe. This microwave radiation has been under intensive study since the mid-1960s, both from unmanned satellites in orbit and from large ground-based radio telescopes. Its present temperature is now known to be 2.725 degrees Centigrade above absolute zero. When this datum is used in calculations of the formation of the nuclei of atoms in the first three minutes after the start of the big bang, the predicted present abundance of light elements (isotopes of hydrogen, helium, and lithium) comes out pretty much in agreement with observation. (Heavier elements are known to be produced in stars.)

More important than the measurement of the precise value of the temperature is the discovery in 1977 that the temperature of the microwave radiation is not the same throughout the sky. There are small ripples in the temperature, fluctuations of about one part in a hundred thousand. This was not entirely a surprise. There would have to have been some such ripples, caused by small lumps in the matter of the early universe that are needed to serve as seeds for the later gravitational condensation of matter into galaxies.

These lumps and ripples are due to chaotic sound waves in the matter of the early universe. As long as the temperature of the universe remained higher than about 3,000 degrees, the electrons in this hot matter remained free, continually scattering radiation, so that the compression and rarefaction in the sound waves produced a corresponding variation in the intensity of radiation. We cannot directly see into this era, because the interaction of radiation and free electrons made the universe opaque, but when the universe cooled to 3,000 degrees the free electrons became locked in hydrogen atoms, and the universe became transparent. The radiation present at that time has survived, cooled by the subsequent expansion of the universe, but still bearing the imprint of the sound waves that filled the universe before it became transparent.

Advertisement

Inevitably these physical processes came under intense observation and theoretical study. This work showed that the universe became suddenly transparent at a time some 380,000 years after the creation of atomic nuclei. From observed details of the ripples in the cosmic microwave background we can calculate the abundances of the various types of elementary particle that must have been present in the years before transparency began.

The results reveal a mystery. It turns out that particles already known to us are not enough to account for the mass of the hot matter in which the sound waves must have propagated. Fully five sixths of the matter of the universe would have to be some kind of “dark matter,” which does not emit or absorb light. The existence of this much dark matter in the present universe had already been inferred from the fact that clusters of galaxies hold together gravitationally, despite the high random speeds of the galaxies in the clusters. So this is a great puzzle: What is the dark matter? Theories abound, and attempts are underway to catch ambient dark matter particles or remnants of their annihilation in detectors on Earth or to create dark matter in accelerators. But so far dark matter has not been found, and no one knows what it is.

Astronomers have kept working on the old program of mapping the speed at which galaxies are rushing away from us and from each other. Their work has led to a great discovery. It had been naturally supposed that the expansion of the universe was slowing down, due to the gravitational attraction of the galaxies for each other, just as a stone thrown upward slows its rise under the influence of the Earth’s gravity. The big question had always been whether the expansion of the universe would eventually stop and go into reverse, like a stone falling back to Earth, or, although slowing, would nevertheless continue forever, like a stone thrown upward with the velocity needed to escape the Earth’s gravity.

In 1998, using the apparent brightness of exploding stars to measure the distance of far galaxies, two groups of astronomers found that the expansion of the universe is not slowing down at all, but rather speeding up. Within the rules of the general theory of relativity, this could only be explained by an energy that is not contained in the masses of any sort of particles, dark or otherwise, but in a “dark energy” inherent in space itself, which produces a sort of antigravity pushing the galaxies apart.

From these measurements, and also from studies of the effect of the expansion of the universe on the cosmic radiation background, it has been found that the dark energy now makes up about three quarters of the total energy of the universe. We have also learned that the universe has been expanding for 13.8 billion years since the time it became transparent. So now we have a standard cosmological model: our expanding universe is mostly dark energy and dark matter. In this darkness there is a small admixture, a few percent of the whole, which consists of the ordinary matter that makes up the stars and planets and us.

2.

The history of elementary particle physics has followed a very different course from that of cosmology. Rather than being starved for data fifty years ago, we were deluged by data we could not understand. Progress when it came was generally initiated by theoretical advances, with experimentation serving as a referee between competing theories and providing occasional healthy surprises.

By the late 1940s we had a good theory for one kind of force that acts on elementary particles, such as electrons—the force of electromagnetism. This theory, quantum electrodynamics, is a particular instance of a general class known as quantum field theories. That is, the quantities appearing in the fundamental equations are fields, which fill space like water filling a tub. Elementary particles are secondary; they are “quanta” of the fields, bundles of the fields’ energy and momentum, like eddies in water. Photons, the massless particles of light, are the quanta of the electromagnetic field, and electrons are the quanta of an electron field.

Calculations in quantum electrodynamics could be done with great accuracy because the forces are fairly weak. The rate of any process in a quantum field theory is given by a sum, each term in the sum corresponding to one possible sequence of intermediate steps by which the process can occur. For instance, when two electrons collide, one electron can emit a photon that is absorbed by the other electron, or one electron can emit two photons that are absorbed by the other electron in the same or the reverse order that they were emitted, or one electron can emit two photons, with one photon absorbed by the electron that emitted it and the other photon absorbed by the other electron, and so on.

Advertisement

There are always an infinite number of these scenarios, which generally makes exact calculations impossible, but when forces are weak the main contributions to calculating the rates of the process come from the simplest scenarios. Neglecting all but a few of the largest contributions in quantum electrodynamics gave results in stunning agreement with experiment. Some of us fifty years ago dreamed of finding a more comprehensive quantum field theory that would describe all the particles and forces of nature as happily as quantum electrodynamics had already described photons and electrons. And so it has (more or less) turned out.

It took a while. There is another force, even weaker than electromagnetism, called the weak nuclear force, which occasionally turns a neutron in an atomic nucleus into a proton, or vice versa. By the 1950s studies of radioactivity had led to a quantum field theory of the weak nuclear force that worked well in accounting for existing data. The trouble was that when the theory was pushed beyond the familiar ambit of radioactivity, and used to calculate the rates of exotic processes that for practical reasons could not be studied experimentally, it gave infinite results, clearly nonsense. Similar infinities had been encountered in the early days of quantum electrodynamics, but then theorists realized that the infinities would all cancel out2 if we took care with the definition of the electron’s mass and electric charge (a procedure called “renormalization”). No such cancellation seemed to be possible for the weak nuclear force.

The solution found in the late 1960s was a new quantum field theory of the weak nuclear forces. This theory was not only patterned after quantum electrodynamics, but incorporated quantum electrodynamics as a special case. Just as electromagnetic forces are transmitted by the exchange of photons, the weak nuclear force in this “electroweak” theory is transmitted by the exchange of related particles called W+, W-, and Z0.

Speculations of this sort ran into an obvious difficulty: photons have no mass, while any new particles such as W+, W-, and Z0 would have to be very heavy, or they would have been discovered decades earlier—the heavier the particle, the higher the energy needed to create it in a particle accelerator, and the more expensive the accelerator. There was also the stubborn problem of infinities. The solution lay in an idea known as broken symmetry, which had been developed and successfully applied in other areas of particle physics since 1960. The equations of a theory may possess certain simplicities, such as relations among the photon, W+, W-, and Z0, which are not present in the solutions of the equations that describe what we actually observe.3 In the electroweak theory there is an exact symmetry between weak and electromagnetic forces, which would make the W+, W-, and Z0 massless, if it were not that the symmetry is broken by four proposed “scalar” fields4 that permeate the universe, from which the W+, W-, and Z0as well as the electron get masses. A new particle discovered last year appears to be the predicted quantum of one of these scalar fields.

Because the equations of the electroweak theory are similar to those of quantum electrodynamics, it seemed likely that all infinities in the theory would cancel. This was proved in 1971. Effects of the exchange of Z0 particles were detected in 1973, and turned out to agree with the predictions of the electroweak theory. The W+, W-, and Z0 particles themselves were discovered a decade later, all with the expected properties.

It took a little longer to understand another force, the strong nuclear force that holds protons and neutrons together inside atomic nuclei. Fifty years ago we had mountains of data about this force, and we could imagine any number of quantum field theories that could potentially describe it, but we had no way to use the data to pick out the right theory. Because this force is strong, every possible sequence of intermediate steps makes a significant contribution to whatever we are calculating. It was hopeless to add up these contributions even approximately, the way we could do in the electroweak theory.

Worse yet, as time passed more and more types of particles were discovered that are affected by the strong nuclear force. It seemed unlikely that all these hundreds of particle types could be the quanta of different fields, i.e., bundles of the fields’ energy, one for each particle type. Some sense could be made of all these particles by supposing that they were composites of a few kinds of truly elementary particles, called quarks. Three quarks were supposed to combine to make up each proton and neutron in an atomic nucleus. But if so, why had experimenters been unable to find these quarks? I remember a widespread despairing doubt about whether the strong forces could be described by any quantum field theory.

Then in the early 1970s the right theory was discovered. Like the successful electroweak theory, it turned out to resemble quantum electrodynamics, only now with a quantity called “color” taking the place of electric charge. In this theory, known as quantum chromodynamics, the strong forces between quarks are produced by the exchange of eight kinds of photon-like particles known as gluons. Quantum chromodynamics explained an experimental result: the strong interactions among the quarks seem to become weaker when the quarks are studied at fine scales of distance, as when they are hit with high-energy electrons. This weakening of the force made it possible to do various approximate calculations like those done in the electroweak theory, and the results agreed with experiment, validating the theory.

Gluons have never been found in any experiment. At first it was supposed that this was because these particles’ masses are too large for them to have been produced in existing accelerators. Gluons could get large masses through a symmetry breakdown in the same way that the W+, W-, and Z0 get large masses in the electroweak theory. Even if so, there would still be a mystery about why quarks had never been found. It was hard to believe that quarks were very heavy; they could hardly be much heavier than the particles like protons and neutrons that contain them.

Then a few theorists suggested that since the strong force in quantum chromodynamics becomes weak when studied at small distance scales, perhaps it becomes very strong at large distances, so strong that it is never possible to pull colored particles like quarks and gluons apart. No one has proved mathematically that this is true, but most physicists believe it is, and there seems no prospect that isolated quarks or gluons will ever be found.

So now we have a standard model of elementary particles. Its ingredients are quantum fields, and the various elementary particles that are the quanta of those fields: the photon, W+, W-, and Z0 particles, eight gluons, six types of quarks, the electron and two types of similar particles, and three kinds of nearly massless particles called neutrinos. The equations of this theory are not arbitrary; they are tightly constrained by various symmetry principles and by the condition of cancellation of infinities.

Even so, the standard model is clearly not the final theory. Its equations involve a score of numbers, like the masses of quarks, that have to be taken from experiment without our understanding why they are what they are. If the standard model were the whole story, it would require neutrinos to have zero mass, while in fact their masses are merely very small, less than a millionth the mass of an electron. Further, the standard model does not include the longest-known and most familiar force, the force of gravitation. We commonly describe gravitation using a field theory, the general theory of relativity, but this is not a quantum field theory in which infinities cancel as they do in the standard model.

Since the 1980s a tremendous amount of mathematically sophisticated work has been devoted to the development of a quantum theory whose fundamental ingredients are not particles or fields but tiny strings, whose various modes of vibration we observe as the various kinds of elementary particle. One of these modes corresponds to the graviton, the quantum of the gravitational field. String theory if true would not invalidate field theories like the standard model or general relativity; they would just be demoted to “effective field theories,” approximations valid at the scales of distance and energy that we have been able to explore.

String theory is attractive because it incorporates gravitation, it contains no infinities, and its structure is tightly constrained by conditions of mathematical consistency, so apparently there is just one string theory. Unfortunately, although we do not yet know the exact underlying equations of string theory, there are reasons to believe that whatever these equations are, they have a vast number of solutions. I have been a fan of string theory, but it is disappointing that no one so far has succeeded in finding a solution that corresponds to the world we observe.

3.

The problems of elementary particle physics and cosmology have increasingly merged. There is a classic problem of cosmology: Why is the universe so nearly uniform? In the 13.8 billion years since the universe became transparent there has not been time for any physical influence to have connected parts of the universe that we see in opposite directions, and to have brought them to the homogeneity of density and temperature that we observe them to have. In the early 1980s it was found that in various quantum field theories, before atomic nuclei formed, there would have been an earlier period of “inflation,” during which the universe expanded exponentially. Highly uniform regions that were tiny during inflation would expand to become larger than the size of the present observed universe, remaining approximately uniform. This is highly speculative, but it has had an impressive success: calculations show that quantum fluctuations during inflation would trigger just the sort of chaotic sound waves, a few hundred thousand years later, whose imprint we now see in the cosmic radiation background.

Inflation is naturally chaotic. Bubbles form in the expanding universe, each developing into a big or small bang, perhaps each with different values for what we usually call the constants of nature. The inhabitants (if any) of one bubble cannot observe other bubbles, so to them their bubble appears as the whole universe. The whole assembly of all these universes has come to be called the “multiverse.”

These bubbles may realize all the different solutions of the equations of string theory. If this is true, then the hope of finding a rational explanation for the precise values of quark masses and other constants of the standard model that we observe in our big bang is doomed, for their values would be an accident of the particular part of the multiverse in which we live. We would have to content ourselves with a crude anthropic explanation for some aspects of the universe we see: any beings like ourselves that are capable of studying the universe must be in a part of the universe in which the constants of nature allow the evolution of life and intelligence. Man may indeed be the measure of all things, though not quite in the sense intended by Protagoras.

So far, this anthropic speculation seems to provide the only explanation of the observed value of the dark energy. In the standard model and all other known quantum field theories, the dark energy is just a constant of nature. It could have any value. If we didn’t know any better we might expect the density of dark energy to be similar to the energy densities typical of elementary particle physics, such as the energy density in an atomic nucleus. But then the universe would have expanded so rapidly that no galaxies or stars or planets could have formed. For life to evolve, the dark energy could not be much larger than the value we observe, and there is no reason for it to be any smaller.

Such crude anthropic explanations are not what we have hoped for in physics, but they may have to content us. Physical science has historically progressed not only by finding precise explanations of natural phenomena, but also by discovering what sorts of things can be precisely explained. These may be fewer than we had thought.

This Issue

November 7, 2013

Love in the Gardens

Gambling with Civilization

On Reading Proust

-

1

Radiation is said to have a certain temperature if its energy per volume at each wavelength is the same as for the radiation in a cave whose walls are kept at that temperature. This radiation is chiefly visible light if its temperature is a few thousand degrees. It is infrared radiation if its temperature is what we are used to in everyday life; and it is microwave radiation if its temperature is a few degrees above absolute zero. ↩

-

2

That is, some contributions to a rate or energy are positive and infinite, and others are negative and infinite, but the sum is finite. ↩

-

3

I discussed broken symmetry in these pages in “Symmetry: A ‘Key to Nature’s Secrets,’” October 27, 2011. ↩

-

4

A scalar field is one that does not point in any direction in space, unlike magnetic and electric fields, which carry a definite sense of direction. ↩