Princeton Architectural Press

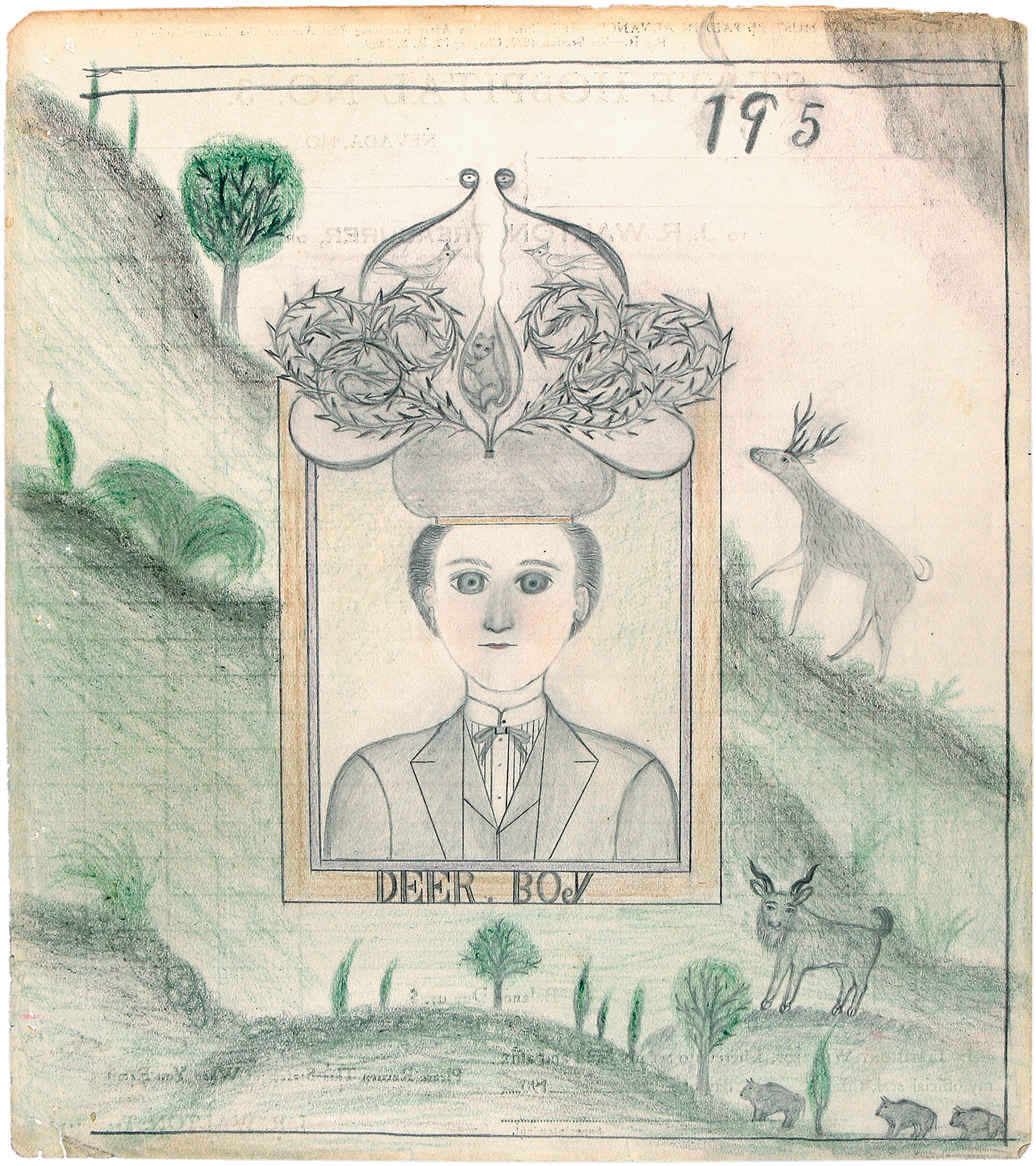

‘Deer, Boy’; drawing by James Edward Deeds Jr. from an album of nearly three hundred drawings that he made during his thirty-seven years as an inmate at a psychiatric hospital in Nevada, Missouri, starting in 1936. The drawings are collected in The Electric Pencil: Drawings from Inside State Hospital No. 3, with an introduction by Richard Goodman and a foreword by Harris Diamant, just published by Princeton Architectural Press.

As you sit reading this, you probably experience an internal voice, unheard by any outsider, that verbally repeats the words you see on the page. That voice (which, in your case, speaks perfect English) is part of what we call your conscious mind.

And the physical organ that causes what you see on the page to be simultaneously voiced internally is what we call your brain. The scientific study of how the brain relates to the mind is what we call cognitive neuroscience.

The brain is an incredibly complex organ, and for most of modern history it has defied serious scientific study. But the development in the past few decades of various technologies, collectively called “brain scans,” that enable us to trace certain operations of the brain have considerably increased our knowledge of how its activities correlate with various mental states.

The law, for its part, is deeply concerned with mental states, particularly intentions. As Justice Oliver Wendell Holmes Jr. famously put it, “Even a dog distinguishes between being stumbled over and being kicked.” Distinctions of intent frequently determine, as a matter of law, the difference between going to prison and going free. Cognitive neuroscience thus holds out the promise of helping us to perceive, decide, and explain how intentions are arrived at and carried out. In theory, therefore, cognitive neuroscience could have a huge impact on the development and refinement of the law.

But there is reason to pause. Cognitive neuroscience is still in its infancy, and much of what has so far emerged that might be relevant to the law consists largely of hypotheses that are far from certainties. The natural impulse of forward-thinking people to employ the wonders of neuroscience in making the law more “modern” and “scientific” needs to be tempered with a healthy skepticism, or some dire results are likely. Indeed, the history of using “brain science” to alter the law is not a pretty picture. A few examples will illustrate the point.

In the early twentieth century, a leading “science” was eugenics, which put forward, among other ideas, a genetic theory about the brain, and also had philosophical components akin to Social Darwinism (and coincidentally, was first developed by Darwin’s half-cousin, Francis Galton).

Eugenics claimed to be based on scientific principles that “proved” that certain deleterious mental states, most notably “feeblemindedness,” were sufficiently directly inheritable that they could be greatly reduced in number by prohibiting the carriers of the defective genes from procreating. Not only would this be advantageous to society as a whole, it would also virtually eliminate the misery that would be the lot of any child born feebleminded, since very few would be born.

So convincing was this argument, and so attractive its “scientific” basis, that eugenics quickly won the support of a great many enlightened people, such as Alexander Graham Bell, Winston Churchill, W.E.B. Du Bois, Havelock Ellis, Herbert Hoover, John Maynard Keynes, Linus Pauling, Theodore Roosevelt, Margaret Sanger, and George Bernard Shaw. Many of the major universities in the US included a eugenics course in their curriculum.

This widespread acceptance of eugenics also prepared the way for the enactment of state laws that permitted the forced sterilization of women thought to be carriers of “feeblemindedness.” At first such laws were controversial, but in 1927 they were held constitutional by a nearly unanimous Supreme Court in the infamous case of Buck v. Bell. Writing for the eight justices in the majority (including such notables as Louis D. Brandeis, Harlan F. Stone, and William Howard Taft), Justice Oliver Wendell Holmes Jr. found, in effect, that the Virginia state legislature was justified in concluding (based on eugenics) that imbecility was directly heritable, and that the findings of the court in the case showed that not just Carrie Buck but also her mother and illegitimate child were imbecilic. It was therefore entirely lawful to sterilize Buck against her will, because, in Holmes’s words, “Three generations of imbeciles are enough.”

In the first half of the twentieth century, more than 50,000 Americans were sterilized on the basis of eugenics-based laws. It was not until Adolf Hitler became a prominent advocate of eugenics, praising it in Mein Kampf and repeatedly invoking it as a justification for his extermination of Jews, Gypsies, and gays, that the doubtful science behind eugenics began to be subjected to widespread criticism.

Yet even as eugenics began to be discredited in the 1940s, a new kind of “brain science” began to gain legal acceptance, namely, lobotomies. A lobotomy is a surgical procedure that cuts the connections between the prefrontal cortex (the part of the brain most associated with cognition) and the rest of the brain (including the parts more associated with emotions). From the outset of its development in the 1930s, it was heralded as a way to rid patients of chronic obsessions, delusions, and other serious mental problems. It was generally regarded, moreover, as the product of serious science, to the point that its originator, the Portuguese neurologist António Egas Moniz, shared the Nobel Prize for Medicine in 1949 in recognition of its development.

Advertisement

Indeed, lobotomy science was so widely accepted that in the United States alone at least 40,000 lobotomies were performed between 1940 and 1965. While most of these were not court-ordered, the law, accepting lobotomies as sound science, required only the most minimal consent on the part of the patient; often, indeed, the patient was a juvenile and the consent was provided by the patient’s parents. Lobotomies were also performed on homosexuals, who, in what was the official position of the American psychiatric community until 1973, suffered from a serious mental disorder by virtue of their sexual orientation.

Nonetheless, some drawbacks to lobotomies were, or should have been, evident from the outset. About 5 percent of those who underwent the operation died as a result. A much larger percentage were rendered, in effect, human vegetables, with a limited emotional life and decreased cognition. But many of these negative results were kept secret. For example, it was not until John F. Kennedy ran for president that it became widely known that his sister Rosemary had become severely mentally incapacitated as a result of the lobotomy performed on her in 1941, when she was twenty-three years old.

Still, by the early 1960s, enough of the bad news had seeped out that lobotomies began to be subject to public scrutiny and legal limitations. Eventually, most nations banned lobotomies altogether; but they are still legal in the US in limited circumstances.

While US law in the mid-twentieth century tolerated lobotomies, it positively embraced psychiatry in general and Freudian psychoanalysis in particular. This was hardly surprising, since, according to Professor Jeffrey Lieberman, former president of the American Psychiatric Association, “by 1960, almost every major psychiatry position in the country was occupied by a psychoanalyst” and, in turn, “the psychoanalytic movement had assumed the trappings of a religion.” In the judicial establishment, the original high priest of this religion was the brilliant and highly influential federal appellate judge David L. Bazelon.

Having himself undergone psychotherapy, Judge Bazelon, for the better part of the 1950s and 1960s, sought to introduce Freudian concepts and reasoning into the law. For example, in his 1963 opinion in a robbery case called Miller v. United States, Judge Bazelon, quoting from Freud’s article “Psychoanalysis and the Ascertaining of Truth in Courts of Law,” suggested that judges and juries should not infer a defendant’s consciousness of guilt from the fact that the defendant, confronted by a victim with evidence that he had stolen a wallet, tried to flee the scene. Rather, said Bazelon, “Sigmund Freud [has] warned the legal profession [that] ‘you may be led astray…by a neurotic who reacts as though he were guilty even though he is innocent.’” If Freud said it, it must be right.

Eventually, much of the analysis Judge Bazelon used to introduce Freudian notions into the law proved both unworkable as law and unprovable as science, and some of his most important rulings based on such analysis were eventually discarded, sometimes with his own concurrence. Ultimately, Judge Bazelon himself became disenchanted with psychoanalysis. In a 1974 address to the American Psychiatric Association, he denounced certain forms of psychiatric testimony as “wizardry” and added that “in no case is it more difficult to elicit productive and reliable expert testimony than in cases that call on the knowledge and practice of psychiatry.” But this change of attitude of Judge Bazelon, and others, came too late to be of much use to the hundreds of persons who had been declared incompetent, civilly committed to asylums, or otherwise deprived of their rights on the basis of what Judge Bazelon subsequently denounced as “conclusory statements couched in psychiatric terminology” (a form of testimony that persists to this day in many court proceedings).

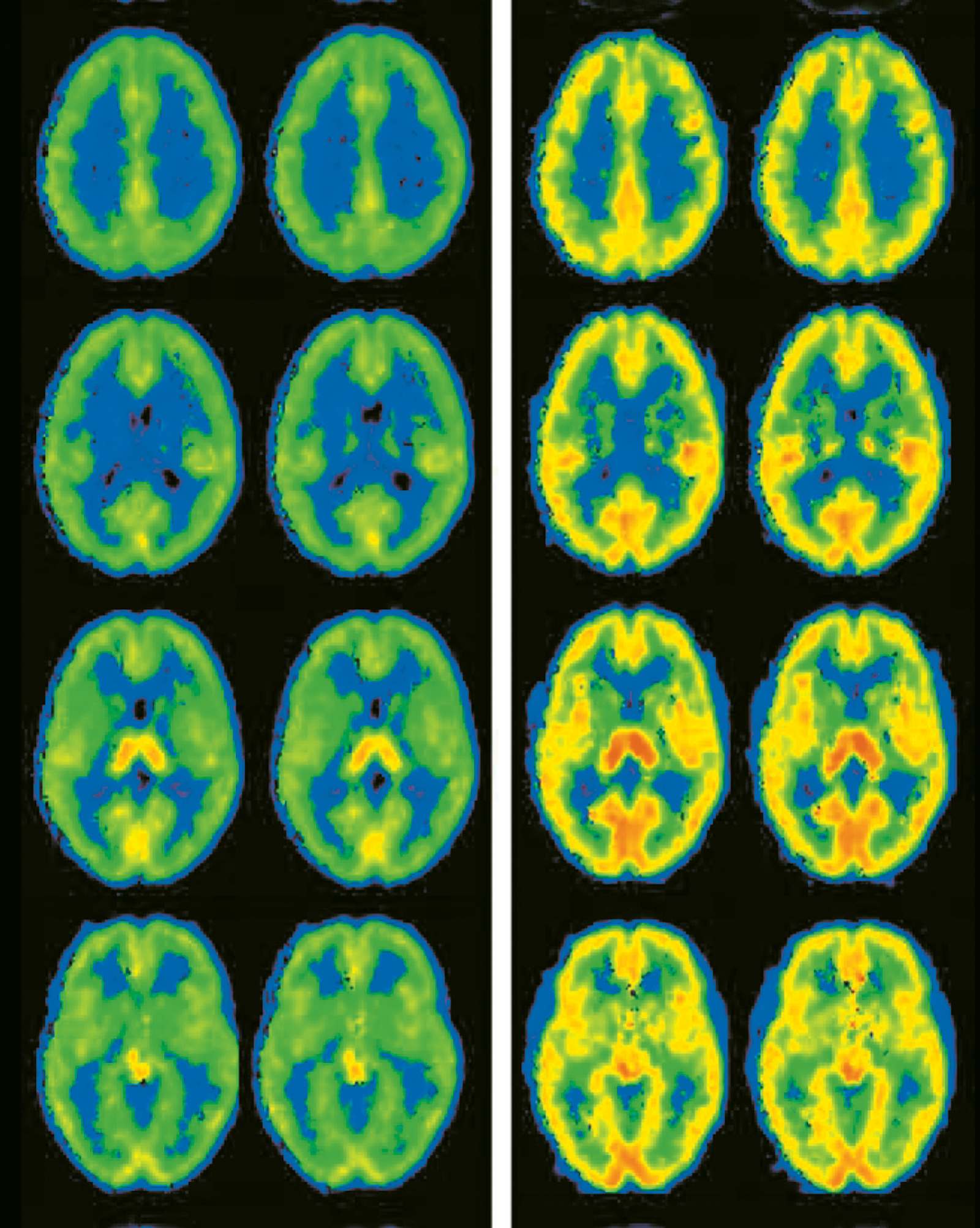

Nelly Alia-Klein et al., “Brain Monoamine Oxidase A Activity Predicts Trait Aggression,” Journal of Neuroscience, May 7, 2008

Brain activity in ‘aggressive and nonaggressive participants’; from a 2008 study of trait aggression as a predictor of future violence, published in The Journal of Neuroscience

A final example of how the law was misled by what previously passed for good brain science must be mentioned, since it was the subject of controversy in these very pages. Beginning in the 1980s, a growing number of prominent psychotherapists advocated suggestive techniques to help their patients “recover” supposedly repressed memories of past traumas, such as childhood incestuous rapes. Eventually, in the early 1990s, more than a hundred people were prosecuted in the United States for sexual abuse based on such retrieved memories, and even though in most of these cases there was little or no other evidence, more than a quarter of the accused were convicted.

Advertisement

But also beginning in the early 1990s, careful studies undertaken by memory experts, most prominently Professor Elizabeth Loftus, showed that many of the techniques used in helping people to recover repressed memories had the ability to implant false memories in them, thus casting doubt on the entire enterprise. In reviewing Professor Loftus’s work in these pages in 1994, Frederick Crews argued that the reason the recovered memory approach had gained such acceptance despite its limited scientific legitimacy was that it was politically correct.* His analysis provoked some irate letters in response. In the end, however, the work of Loftus and other serious scientists was so convincing that it prevailed. But while many of those convicted on the basis of recovered memory evidence were then released, others were not, and some may still be in prison.

It is only fair to note that, just as the law has often been too quick to accept, and too slow to abandon, the “accepted” brain science of the moment, it is equally the case that the law has sometimes asked of brain scientists more than they are equipped to deliver. Consider, for example, the process of civil commitment, by which persons with serious mental disorders are involuntarily confined to psychiatric wards, mental facilities, insane asylums, and the like. Although these facilities (many of which have now been shut down) are in some respects like prisons, especially since the “patients” are not free to leave the premises and must follow the orders of their keepers, commitment to these facilities differs from commitment to prison in that the committed individuals are confined for an “indefinite period” (sometimes forever) until their treatment is sufficiently successful as to warrant their release. Moreover, the commitment comes about not by their being criminally convicted by a jury on proof beyond a reasonable doubt, but rather by a judge making a determination—almost exclusively on the basis of psychiatric testimony—that it is more likely than not that they meet the civil standard for commitment.

What is that standard? In most American jurisdictions prior to 1960, the standard was that the individual was “in serious need of [mental] treatment.” This standard had the virtue of being one that was acceptable to most psychiatrists, for a large part of their everyday practice was determining who needed mental treatment and of what kind. But the standard suffered from a vagueness and concomitant arbitrariness that troubled courts and legislators. Accordingly, beginning in the mid-1960s it was replaced, in nearly all jurisdictions, by the current standard: that a particular person, by reason of mental problems, “is a danger to himself or others.”

What this means, in practice, is that the psychiatrist testifying at a civil commitment hearing must make a prediction about whether the person is likely to engage in violence. But if there is one thing psychiatrists are not very good at, it is predicting future violence. Indeed, in an amicus brief submitted to the Supreme Court in 1980, the American Psychiatric Association reported that its members were frequently no better than laypeople in predicting future violence. The “future danger” test, it argued, was therefore not a very useful one.

Yet it remains the test, and the law thus forces psychiatrists called to testify at a civil commitment hearing to make the very prediction they have difficulty making.

The foregoing illustrates that brain science and the law have not easily meshed in the past, suggesting that future interactions should be approached with caution. Which brings us to the interplay of law and cognitive neuroscience. As noted at the outset of this article, cognitive neuroscience has made considerable advances in recent years; and given the law’s focus on matters of the mind, it is hardly surprising that many commentators have suggested that neuroscience has much to offer the law.

Judges themselves have expressed similar interests. For example, a few years ago, in connection with preparing, along with Professor Michael S. Gazzaniga (the “father” of modern cognitive neuroscience) and various other contributors, A Judge’s Guide to Neuroscience, I did an informal survey of federal judges and found that they had a host of questions about the possible impact of neuroscience on the law, ranging from “What is an fMRI?” to “Does neuroscience give us new insights into criminal responsibility?” and much in between. But when we consider the actual impact of modern neuroscience on the law thus far, the record is mixed.

Most attempts to apply new advances in neuroscience to individual cases either have been rejected by the courts or have proven of little value. Consider, for example, the so-called “neuroscientific lie detector.” While a “scientific” way to determine if a witness is lying or telling the truth would seemingly be of great value to the legal system, the existing lie-detecting machine, the polygraph—which presupposes that telling an intentional lie is accompanied by increased sweating, a rising pulse rate, and the like—has proven notoriously unreliable and is banned from almost all courts. But relying on the not unreasonable hypothesis that devising an intentional lie involves different mental activities than simply telling the truth, some neuroscientists have hypothesized that certain brain movements correlated with lying might be detected.

Some early studies seemed promising. While undergoing brain scans, subjects were asked to randomly lie in response to simple questions (for example, “Is the card you are looking at the ace of spades?”), and the brain activity when they lied was greater than, and different from, the brain activity when they told the truth. But there were problems with these studies, both technical and theoretical. For example, while the subjects were supposed to remain perfectly still in the brain scanner, even very small (and not easily detected) physical movements led to increased brain activity appearing on the scans, thus confounding the results.

More significantly, these studies did nothing to resolve the question of whether the increased brain activity was the result of lying or was the result of what the subjects were actually doing cognitively, i.e., following orders to randomly make up lies. In these and numerous other ways, the studies were far removed from what might be involved in a court witness’s lying.

Nevertheless, on the basis of the early studies, two companies were formed to market “neuroscientific lie detection” to the public, and one of them tried to introduce its evidence in court in two cases. In one case, in New York state court, the evidence was held inadmissible on the ground that it “infringed” the right of the jury to determine credibility. This rationale is not very convincing. Jurors have no special competence to determine the truth: they do it in the same imperfect way that everyday citizens do in everyday life. So if there were truly a well-tested, highly reliable instrument for helping them determine the truth, its results would be as welcome as, say, DNA evidence is today in assisting juries to decide guilt or innocence.

However, in the second case, US v. Semrau, in federal court in Tennessee, the evidence was also held inadmissible, but this time on much more convincing grounds. The case involved a psychologist who owned two businesses that provided government-reimbursed psychological services to nursing home patients, and the charge was that he had manipulated the billing codes so as to overcharge the government by $3 million. His defense was that the mistakes in coding were unintentional. In that regard, defense counsel sought to introduce testimony from the neuroscientist founder of one of the companies marketing neuroscientific lie detection, to the effect that he had placed the defendant in a brain scanner and asked him questions of the form “Did you intend to cheat the government when you coded and billed these services?” According to the expert, the brain scan evidence showed that the defendant was being honest when he answered such questions “No.”

The expert conceded that during one of the three sessions when these questions had been put to the defendant, the brain scan results were consistent with lying; but the expert contended that this was the result of the defendant’s being “fatigued” in ways he was not at the other two sessions. The judge found, however, that this supposed “anomaly” was actually indicative of the doubts already expressed in the scientific literature about the validity and reliability of neuroscientific lie detection. Accordingly, he excluded the evidence as unreliable.

As this example suggests, cognitive neuroscience, despite the considerable publicity it has received, is still not able to produce well-tested, reliable procedures for detecting and measuring specific mental states in specific individuals. If the legal system were to embrace such evidence before it was far better developed than it is at present, the same kinds of dire “mistakes” that occurred in the situations involving eugenics, lobotomies, psychoanalysis, and recovered memories might well again occur.

This is not to say, however, that the considerable advances in neuroscience over the past few decades are without any relevance to the legal system. Even if it is not yet ready to resolve individual cases, some of its more general conclusions may be helpful in making broad policy decisions of consequence to the legal system. Consider, for example, the common observation that adolescents have less control over impulses than adults. Neuroscience helps explain why this occurs. To put it simply, at puberty several parts of the brain rapidly enlarge. This includes the parts associated with impulsive activity and, a bit later, the parts associated with control over impulses. But the connections between these parts only slowly improve (through “mylineation”) to the point where the communication between the enlarged areas can be sufficiently swift to hold in check new impulsive urges of adolescents. On average, this takes more than a year, and the Supreme Court has cited studies of this kind in reaching its conclusions that the death penalty and life imprisonment are unconstitutional when imposed on those below the age of eighteen.

On the other hand, neuroscience is not yet close to developing a test for determining the precise degree to which impulse control has developed in any given adolescent—so that, for example, a prosecutor could not fairly rely on neuroscience to determine whether any particular adolescent should be prosecuted as an adult or as a juvenile. Again, the point is that neuroscience is at a stage where it may be able to provide helpful insights to the general development of the law, but not much, if anything, in the way of evidence about individuals in particular cases.

Still, neuroscience may be helpful to the development of legal policy in dealing with the problem of drug addiction—a problem that consumes much of both state and federal criminal systems. The United States spends billions of dollars each year both punishing and treating drug addicts, but the success rate of even the most sophisticated treatment programs, as measured by the former addicts who do not resume drug use over the period of the program, is rarely better than 50 percent, and considerably lower if measured by relapses after the program is over.

Why is this so, since most addicts want to quit? A considerable amount of neuroscientific research over the past few decades has been addressed to this problem, and while much of the research remains controversial or simply inconclusive, at least some of the results are now generally accepted in the neuroscientific community. These include, for example, the finding that drug addiction actually alters the way the synapses in certain areas of the brain operate (so that an addict has to have his drug just to feel “normal”) and also the finding that the cravings associated with drug addiction will over time come to be generated by secondary cues (ranging from the sight of a needle to a return to a party scene).

These findings suggest, first, that simply getting an addict drug-free and over the symptoms of withdrawal is unlikely to be sufficient to prevent re-addiction in many cases; and second, that only very long-term programs, including drug testing over many years and training and retraining addicts to avoid the cues that will trigger a relapse, are likely to be truly effective. (A different approach, in the form of a vaccine, is also possible, but insufficiently well developed to be considered here.)

Such programs would be very expensive, though probably not as expensive as the combined cost of incarceration and short-term treatment programs now imposed on some drug-addicted individuals again and again. Nor would such programs work in every case, for once again, the neuroscience says more about the population as a whole than any given individual. But if nothing else, the neuroscience about the subject does seem to indicate not just that the present approaches are unlikely to be successful overall, but also why this is so.

Here, as in the case of adolescent impulse-control, the neuroscience has already had some modest impact on the courts. Indeed, as early as 1962, the Supreme Court, in deciding that it was unconstitutional to criminalize the status of being a drug addict, expressly adopted the position that addiction was an “illness” rather than a form of criminal misconduct (although this decision meant little when, a decade later, most states made even mere drug possession a serious felony). But the neuroscience has also been used to support the creation of drug courts, the success record and fairness of which remain controversial.

Neuroscience is mind-boggling. It is developing at a rapid pace, and may have more to offer the legal system in the future. For now, however, the lessons of the past suggest that, while neuroscientific advances may be a useful aid in evaluating broad policy initiatives, a too-quick acceptance by the legal system of the latest neuroscientific “discoveries” may be fraught with danger.

-

*

See Frederick Crews, “The Revenge of the Repressed,” The New York Review, November 17, 1994, and December 1, 1994. ↩