Noam Chomsky’s long-standing engagement with American politics—recently described in these pages*—has tended to obscure his seminal work as a scholar. Yet for him, academe has been anything but a tranquil retreat from the hurly-burly of public life. His principal (but far from exclusive) field of linguistics was already a hotbed of controversy long before he arrived on the scene; so much so that as early as 1866 the Linguistic Society of Paris specifically banned discussion of the origin of language as being altogether too disruptive for the contemplative atmosphere of a learned association. And on assuming an assistant professorship of linguistics at MIT in 1956, Chomsky lost no time in throwing yet another cat among the pigeons.

When Chomsky entered the field of linguistics it was widely assumed that the human mind began life as a blank slate, upon which later experience was written. Accordingly, language was seen as a learned behavior, imposed from the outside upon the infants who acquire it. This was certainly the view of the renowned behavioral psychologist B.F. Skinner, and the young Chomsky gained instant notoriety by definitively trashing Skinner’s 1957 book Verbal Behavior in a review published in the journal Language in 1959. In place of Skinner’s behaviorist ideas, Chomsky substituted a core set of beliefs about language that he had already begun to articulate in his own 1957 book, Syntactic Structures.

In stark contrast to the behaviorist view, Chomsky saw human language as entirely unique, rather than as an extension of other forms of animal communication. And for all that humans were notoriously linguistically diverse, he also insisted that all languages were variants on one single basic theme. What is more, because all developmentally normal children rapidly and spontaneously acquire their first language without being specifically taught to do so (indeed, often despite parental inattention), he saw the ability to acquire language as innate, part of the specifically human biological heritage.

Delving deeper, he also viewed most basic aspects of syntax as innate, leaving only the peripheral details that vary among different languages to be learned by each developing infant. Accordingly, as Chomsky then saw it, the differences among languages are no more than differences in “externalization.” Whatever the biological element might have been that underwrote the propensity for language (and it was not necessary to know exactly what that was to recognize that it exists), the “Language Acquisition Device,” the basic human facility that allows humans and nothing else in the living world to possess language, imposed a set of constraints on language learning that provided the backbone of a hard-wired “Universal Grammar.”

Early formulations of Chomsky’s theory further saw language as consisting both of the “surface structures” represented by the spoken word and of the “deep structures” that reflect the underlying concepts formed in the brain. The deeper meanings and the surface sounds were linked by a “transformational grammar” that governed the conversion of the brain’s internal output into the externalized sounds of speech.

Over the last half-century or so, much of what Chomsky initially argued has become uncontroversial in linguistics. Most significantly, only a few chimp-language enthusiasts (whose ideas are roundly trampled in the book under review) would now reject the idea that, among living creatures, the full-blown ability to acquire and express language is both an innate quality and a uniquely human specialization. But many of his detailed proposals have had deeply polarizing effects, to the extent that a substantial coterie of colleagues view Chomsky and his followers with the kind of suspicion that in other settings is accorded to members of cults.

What is more, with the passage of time, and in collaboration with a variety of colleagues (full disclosure: your reviewer is marginally among them), Chomsky himself has significantly modified his views, both about those features that are unique to language—and that thus have to be accounted for in any theory of its origin—and about its underlying mechanism. Since the 1990s, Chomsky and his collaborators have developed what has come to be known as the “Minimalist Program,” which seeks to reduce the language faculty to the simplest possible mechanism. Doing this has involved ditching niceties like the distinction between deep and surface structures, and concentrating instead on how the brain itself creates the rules that govern language production.

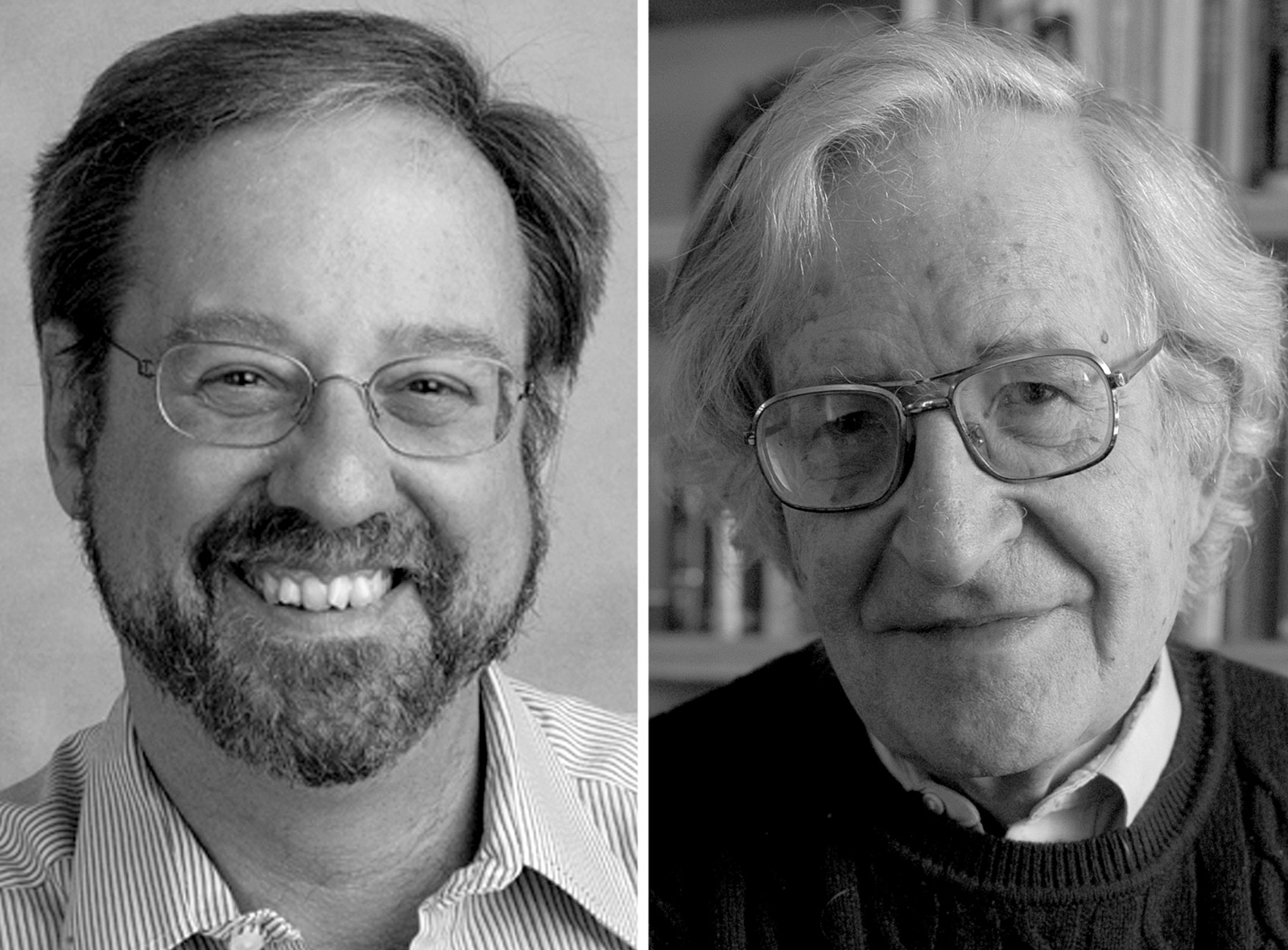

Chomsky’s latest general statement of his slimmed-down hypothesis of language now comes in an attractive short book coauthored with his colleague Robert Berwick, a computational cognition expert at MIT. Why Only Us: Language and Evolution is a loosely connected collection of four essays that will fascinate anyone interested in the extraordinary phenomenon of language. It argues that “the basic engine that drives language syntax…is far simpler than most would have thought just a few decades ago.”

Advertisement

According to Berwick and Chomsky, a single operation that they call “Merge” (basically, the simplest form of the process of “recursion” that Chomsky used to view as the bedrock of language) is sufficient for building the entire hierarchical structure that they see as required to produce human language syntax. In their compact definition, Merge “takes any two syntactic elements and combines them into a new, larger hierarchically structured expression,” a notion that is introduced early in the book and is then enlarged upon in some detail in later essays. In the first account they give, they write:

For example, given read and books, Merge combines these into {read, books}, and the result is labeled via minimal search, which locates the features of the “head” of the combination, in this case, the features of the verbal element read. This agrees with the traditional notion that the constituent structure for read books is a “verb phrase.” This new syntactic expression can then enter into further computations, capturing what we called earlier the Basic Property of human language.

Reducing the essence of language in this way, then, allows Berwick and Chomsky to divide the problem of how language evolved into three distinct parts: first, the internal computational system for hierarchically structured expressions (such as “read books”); second, sensory and motor systems for speech production; and finally, the underlying conceptual system, in other words the complex of thought on which language depends. Helpfully, the overall system they outline works with almost any sensory modality, and this explains how spoken and signed languages contrive to map onto each other so closely.

Berwick and Chomsky go on to suggest that the biology underwriting the Merge operation emerged as the result of a “minor mutation” in a member of an early modern human population. As judged from the archaeological record, this event occurred in East Africa some 80,000 years ago, and it produced a neural novelty that could yield “structured expressions” from “computational atoms” to provide a “rich language of thought.” Only at a later stage was “the internal language of thought…connected to the sensorimotor system” that makes speech possible. In human evolution, then, the existence of language for thought preceded that of spoken language: a currently controversial idea, albeit with a respectable pedigree that traces back to the writings of John Locke in the eighteenth century.

At this point, any reviewer with pretensions to objectivity is obliged to point out that the press has greeted this scenario with some derision. The Economist, for one, found much to chortle about:

Why would this be of any use? No one [other than the original possessor] had Merge. Whom did Prometheus talk to? Nobody, at least not using Merge…. Rather, it let Prometheus take simple concepts and combine them…in his own head…. Only later…did human language emerge…. Many scholars find this to be somewhere between insufficient, improbable and preposterous.

The condescending attitude reminds one of the tired old joke about Dolly Pentreath, the last native speaker of Cornish, who died in 1777: “Nobody knows who she spoke it to.”

Still, if we are prepared to put the issue in a larger context, there is plenty in Berwick and Chomsky’s argument that deserves close consideration, and that fits very well with what we know about the circumstances in which language is likely to have arisen. Today, opinion on the matter of language origins is still deeply divided. On the one hand, there are those who feel that language is so complex, and so deeply ingrained in the human condition, that it must have evolved slowly over immense periods of time. Indeed, some believe that its roots go all the way back to Homo habilis, a tiny-brained hominid that lived in Africa not far short of two million years ago. On the other, there are those like Berwick and Chomsky who believe that humans acquired language quite recently, in an abrupt event. Nobody is in the middle on this one, except to the extent that different extinct hominid species are seen as the inaugurators of language’s slow evolutionary trajectory.

That this deep dichotomy of viewpoint has been able to persist (not only among linguists, but among paleoanthropologists, archaeologists, cognitive scientists, and others) for as long as anyone can remember is due to one simple fact: at least until the very recent advent of writing systems, language has left no trace in any durable record. Whether any early humans possessed language, or didn’t, has had to be inferred from indirect proxy indicators. And views have diverged greatly on the matter of what is an acceptable proxy.

One widely used putative proxy is the manufacture of stone tools, an activity that inaugurated the archaeological record some two and a half million years ago, or possibly more. It is argued that explaining to someone else how to make a stone tool (or, in some versions, an elaborate stone tool) is such a complicated matter that language had to be involved in passing the tradition along. But this seems contradicted by an interesting experiment that some Japanese researchers undertook several years ago. They divided up a class of undergraduates, none of whom knew anything about stone tools, and taught one half to make a relatively fancy kind of stone implement by demonstration and elaborate verbal explanation. The other half they taught by visual demonstration alone. And they discovered that there was basically no difference in the speed or the efficiency with which the two groups learned.

Advertisement

What’s more—and this was the most amazing thing to me—in both groups there were students who never quite got it. Making any kind of stone tool evidently takes a lot of smarts of some kind, but not necessarily of the kind specific to all language-wielding humans today. Our predecessors were very evidently not just less intelligent versions of ourselves, as we have so often been tempted to suppose.

As a result, we have to look for a durable proxy that is more closely related to language than the manufacture of stone tools appears to be. So why not start with the kind of language-associated intelligence that all modern humans have today? What we uniquely do (or at least what we have no way of showing any other living creatures do) is deconstruct our internal and external worlds into vocabularies of abstract symbols that we can then combine and recombine in our minds, to make statements not only about those worlds as they are, but as they might be. We are able to do this because pathways in our brains allow us to make associations between the outputs of various brain structures that are apparently not similarly connected in the brains of our closest relatives, the apes.

Of course, the apes are nonetheless extraordinarily intelligent creatures, who can recognize and combine symbols in simple statements such as “take…red…ball…outside.” But the algorithm involved is a simple additive one, and it is ultimately limiting because it is tough to keep track of increasing strings of symbols. In contrast, the algorithm associated with human language (whatever it may be) is apparently unlimited, because through the use of simple rules a limited number of symbols can be manipulated to form an infinity of different statements.

Notice that the metaphor for human cognitive function I have chosen steers dangerously close to a description of language. This is why Locke believed that words “stand for nothing but the ideas in the mind of him that uses them,” and why increasing attention is being paid by linguists to the notion that, as the linguist Wolfram Hinzen has put it, “language and thought are not two independent domains of inquiry.” If that is true, perhaps the best proxies we can seek for language in the human archaeological record are objects or activities that reflect the working of symbolic human minds: minds capable of envisioning that the world could be otherwise than it is at this moment.

So what do we find when we look at the material record? It is pretty clear by now that the first stone tools were made by anatomically primitive “australopiths” with small (ape-sized) brains, big faces, and rather archaic limb proportions. But at the same time they appear to have been much more flexible and generalist in their food-seeking behaviors than living apes are; and certainly by the time they began to make stone tools, they had crossed a cognitive boundary within which the apes are still confined. Nonetheless, as I’ve noted, the mere act of stone tool making is no indicator of modern human-style symbolic cognition; and intelligent as these early ancestors clearly were, there is no reason to believe that they were even anticipating our peculiar modern mode of thought.

The same applies to the earliest members of our genus Homo, who show up in the fossil record a little under two million years ago. They were taller than the australopiths and had body proportions basically like our own, indicating that they had become committed to the expanding savannah environments of Africa, far away from the protection of the ancestral forests. Yet to begin with, at least, they made crude stone tools just like those the australopiths had made: sharp flakes, bashed from one lump of stone using another. Only later did the new humans start regularly manufacturing stone “handaxes,” the first tools made to a regular (teardrop) shape. And after that, tools of the new kind continued to be made in Africa (with only minor refinement along the way) until around 160,000 years ago. Cultural monotony was the order of the day, and within the tenure of “early Homo” there are virtually no artifacts known that could be considered “symbolic” in nature. Clever these hominids undoubtedly were by the standards prevailing at the time; but once again, there is no unequivocal evidence that they were thinking as we do, even in an anticipatory form.

Around 300,000 years ago a conceptually new type of stone implement began to be made in both Africa and Europe. This was the “prepared-core” tool, in which a nucleus of good-quality stone was elaborately shaped with numerous blows until a final strike would detach a more-or-less finished implement, such as a scraper or a point. This conceptual advance was made within the tenure of a modestly large-brained species called Homo heidelbergensis that is also associated with some other important conceptual advances, among them the building of the first artificial shelters and the earliest routine domestication of fire. But significantly, in this time range there is only one putative—and hugely arguable—symbolic artifact known: a vaguely anthropomorphic lump of rock from the Golan Heights that may have been slightly modified to look more human. Certainly, symbolic reasoning was not a routine part of the behavioral repertoire of Homo heidelbergensis.

Homo neanderthalensis, the earliest hominid with a brain as big as ours, emerged about 200,000 years ago; and in a hugely extensive archaeological record, it furnished only the most slender and sporadic evidence for symbolic behaviors. Yet more amazingly, the exact same was true for the very first Homo sapiens. Anatomically modern humans appeared in Ethiopia at about the same time the Neanderthals made their debut in Europe, and at first they left a comparable material record. It is only at about 100,000 years ago that we begin to find—again in Africa—evidence of hominid activities that were qualitatively different from anything that had gone before. Suddenly, Homo sapiens was making items such as shell beads destined for bodily decoration—which invariably make a statement—as well as explicitly symbolic objects such as ochre plaques engraved with deliberate geometric designs that clearly held meaning for their makers.

At about the same time complex multistage technologies appeared—such as the fire-hardening of silcrete, a substance found in soil that was otherwise indifferent for tool-making—that clearly demanded complex forward planning. Even more importantly, from this point on, the signal in the archaeological record, far from being one of stability over long periods, became one of continuous change and refinement. Soon the era of figurative Ice Age art had arrived in both Europe and eastern Asia, announcing more clearly than anything else could the arrival of the fully fledged human sensibility.

Clearly, something revolutionary had happened to our species, well within the tenure of Homo sapiens as an anatomical entity. All of a sudden, humans were manipulating information about the world in an entirely unprecedented way, and the signal in the archaeological record shifted from being one of long-term stability to one of constant change. Hard on the heels of the first signs of significant behavioral change, Homo sapiens had left Africa and taken over the world (displacing all the hominid competition in the process), settled life had begun, cities had started to form, and by fifty years ago we were already standing on the moon.

The clear implication is that something had abruptly changed the way in which humans handled information. Most likely, the biological underpinnings for this change (both neural and vocal) were established in the events that gave rise to our species as a (very) distinctive anatomical entity some 200,000 years ago. But the new potential then lay fallow, until it was released by a necessarily behavioral stimulus. Most plausibly, that stimulus was the spontaneous invention of language, in an isolated African population that already, for reasons not fully understood, possessed a “language-enabled” brain. One can readily imagine—at least in principle—how a group of hunter-gatherer children in some dusty corner of Africa began to attach spoken names to objects and feelings, giving rise to a feedback loop between language and thought. This innovation would then have rapidly spread through a population already biologically predisposed to acquire it.

That is, of course, just one construction of the facts. But it accommodates better than anything else what we know from archaeology. And no other scenario currently available from linguistics fits the archaeological facts better than the essentials of Berwick and Chomsky’s vision. Something occurred in human evolution, very abruptly and very recently, that radically changed the way in which human beings interact with the world around them. It is extremely hard to imagine that the beings who initiated that change were not users of language, and there is no substantive evidence that their predecessors were. So we need an explanation for the abrupt emergence of language; and the one Berwick and Chomsky provide is the most plausible such explanation currently on offer.

Certainly, the details need fine-tuning. For example, it is more plausible in terms of evolutionary process that effective language and symbolic thought emerged simultaneously, in a feedback process involving an already preadapted brain (and with the modern vocal tract necessary for speech conveniently already in place), than that an otherwise invisible mutation promoting an internal Merge was only later recruited for language by the sensorimotor system. But then science is always a progress report; and just as what we believed yesterday always looks quaint today, what we believe today will inevitably look hopelessly naive tomorrow. If we keep that in mind, what Berwick and Chomsky have to say looks like progress; and it has the added advantage of being a good read.

-

*

See Kenneth Roth’s review of Chomsky’s Who Rules the World? (Metropolitan, 2016), The New York Review, June 9, 2016. ↩