This September, Uber, the app-summoned taxi service, launched a fleet of driverless Volvos and Fords in the city of Pittsburgh. While Google has had its own autonomous vehicles on the roads of Mountain View, California, Austin, Texas, Kirkland, Washington, and Phoenix, Arizona, for a few years, gathering data and refining its technology, Uber’s Pittsburgh venture marks the first time such cars will be available to be hailed by the American public. (The world’s first autonomous taxi service began offering rides in Singapore at the end of August, edging out Uber by a few weeks.)

Pittsburgh, with its hills, narrow side streets, snow, and many bridges, may not seem like the ideal venue to deploy cars that can have difficulty navigating hills, narrow streets, snow, and bridges. But the city is home to Carnegie Mellon’s renowned National Robotics Engineering Center, and in the winter of 2015, Uber lured away forty of its researchers and engineers for its new Advanced Technologies Center, also in Pittsburgh, to jump-start the company’s entry into the driverless car business.

Uber’s autonomous vehicles have already begun picking up passengers, but they still have someone behind the wheel in the event the car hits a snag. It seems overstated to call this person a driver since much of the time the car will be driving itself. Uber’s ultimate goal, and the goal of Google and Lyft and Daimler and Ford and GM and Baidu and Delphi and Mobileye and Volvo and every other company vying to bring autonomous vehicles to market, is to make that person redundant. As Hod Lipson and Melba Kurman make clear in Driverless: Intelligent Cars and the Road Ahead, the question is not if this can happen, but when and under what circumstances.

The timeline is a bit fuzzy. According to a remarkably bullish report issued by Morgan Stanley in 2013, sometime between 2018 and 2022 cars will have “complete autonomous capability”; by 2026, “100% autonomous penetration” of the market will be achieved. A study by the market research firm IHS Automotive predicts that by 2050, nearly all vehicles will be self-driving; a University of Michigan study says 2030. Chris Urmson, who until recently was project manager of Google’s autonomous car division, is more circumspect.

“How quickly can we get this into people’s hands? If you read the papers, you see maybe it’s three years, maybe it’s thirty years. And I am here to tell you that honestly, it’s a bit of both,” he told an audience at Austin’s South By Southwest Festival in March. “This technology,” Urmson went on, “is almost certainly going to come out incrementally. We imagine we are going to find places where the weather is good, where the roads are easy to drive—the technology might come there first. And then once we have confidence with that, we will move to more challenging locations.”

As anyone looking to buy a new car these days knows, a number of technologies already cede certain tasks to the vehicle. These include windshield wipers that turn on when they sense rain, brakes that engage automatically when the car ahead is too close, blind-spot detectors, drift warnings that alert the driver when the car has strayed into another lane, cruise control that maintains a set distance from other vehicles, and the ability of the car to parallel park itself. Tesla cars go further. In “autopilot” mode they are able to steer, change lanes, and maintain proper speed, all without human intervention. YouTube is full of videos of Tesla “drivers” reading, playing games, writing, and jumping into the back seat as their cars carry on with the mundane tasks of driving. And though the company cautions drivers to keep their hands on the steering wheel when using autopilot, one of the giddiest hands-free Tesla videos was posted by Talulah Riley, the (then) wife of Elon Musk, the company’s CEO.

These new technologies are sold to consumers as safety features, and it is easy to see why. Cars that slow down in relation to the vehicle in front of them don’t rear-end those cars. Cars that warn drivers of lane-drift can be repositioned before they cause a collision. Cars that park themselves avoid bumping into the cars around them. Cars that sense the rain and clear it from the windshield provide better visibility. Paradoxically, though, cars equipped with these features can make driving less safe, not more, because of what Lipson and Kurman call “split responsibility.” When drivers believe the car is in control, their attention often wanders or they choose to do things other than drive. When Tesla driver Joshua Brown broadsided a truck at seventy-four miles per hour last May, he was counting on the car’s autopilot feature to “see” the white eighteen-wheeler turning in front of it in the bright Florida sun. It didn’t, and Brown was killed. When the truck driver approached the wreckage, he told authorities, a Harry Potter video was still playing in a portable DVD player.

Advertisement

While Brown’s collision could be considered a one-off—or, more accurately, a three-off, since there have been two other accidents involving Tesla’s autopilot software—preliminary research suggests that these kinds of collisions soon may become more frequent as more cars become semiautonomous. In a study conducted by Virginia Tech in which twelve subjects were sent on a three-hour test drive around a track, 58 percent of participants in cars with lane-assist technology watched a DVD while driving and 25 percent used the time to read. Not everyone was tempted by the videos, magazines, books, and food the researchers left in the cars, but enough were that overall safety for everyone on the road diminished. As Lipson and Kurman point out, “Clearly there’s a tipping point at which autonomous driving technologies will actually create more danger for human drivers rather than less.” This is why Google, most prominently, is aiming to bypass split responsibility and go directly to cars without steering wheels and brake pedals, so that humans will have no ability to drive at all.

For generations of Americans especially, and young Americans even more, driving and the open road promised a kind of freedom: the ability to light out for the territory, even if the territory was only the mall one town over. Autonomous vehicles also come with the promise of freedom, the freedom of getting places without having to pay attention to the open (or, more likely, clogged) road, and with it, the freedom to sleep, work, read e-mail, text, play, have sex, drink a beer, watch a movie, or do nothing at all. In the words of the Morgan Stanley analysts, whose enthusiasm is matched by advocates in Silicon Valley and cheerleaders in Detroit, driverless vehicles will deliver us to a “utopian society.”

That utopia looks something like this: fleets of autonomous vehicles—call them taxi bots—owned by companies like Uber and Google, able to be deployed on demand, that will eliminate, for the most part, the need for private car ownership. (Currently, most privately owned cars sit idle for most of the day, simply taking up space and depreciating in value.) Fewer privately owned vehicles will result in fewer cars on the road overall. With fewer cars will come fewer traffic jams and fewer accidents. Fewer accidents will enable cars to be made from lighter materials, saving on fuel. They will be smaller, too, since cars will no longer need to be armored against one another.

With less private car ownership, individuals will be freed of car payments, fuel and maintenance costs, and insurance premiums. Riders will have more disposable income and less debt. The built environment will improve as well, as road signs are eliminated—smart cars always know where they are and where they are going—and parking spaces, having become obsolete, are converted into green spaces. And if this weren’t utopian enough, the Morgan Stanley analysts estimate that switching to full vehicle autonomy will save the United States economy alone $1.3 trillion a year.

There are many assumptions embedded in this scenario, the most obvious being that people will be willing to give up private car ownership and ride in shared, driverless vehicles. (Depending on the situation, sharing either means using cars owned by fleet companies in place of privately owned vehicles, or shuttling in cars owned by fleet companies with other riders, most likely strangers going to proximate destinations.) There is no way to know yet if this will happen. In a survey by the Insurance Information Institute last May, 55 percent of respondents said they would not ride in an autonomous vehicle. But that could change as self-driving cars become more commonplace, and as today’s young adults, who have been slower to get drivers’ licenses and own cars than their parents’ generation, and who have been early adopters of car-sharing businesses like Zipcar and Uber, become the dominant demographic.

Yet even if car-sharing membership continues its upward trajectory, these services may remain marginal. (Predictions are that 3.8 million Americans will be members of car-sharing programs by 2020—about 1 percent of the population.) And while it seemed as though the millennial generation was eschewing automobile ownership—the car industry called it the “go-nowhere” generation—its reluctance to buy cars may have had more to do with a weak economy (student debt, unemployment, and underemployment, especially) than with desire. More millennials are buying cars now than ever before, and car sales overall are increasing. If Singapore, which has attempted to curb private car ownership by imposing heavy taxes, licensing fees, and congestion pricing, and by providing free public transit during the morning commute, is any guide, it suggests that moving the public away from private car ownership will be challenging: despite all these efforts, subway use in Singapore has gone down, not up. It is far from clear whether the country’s new self-driving taxi service will be able to shift behavior and buying patterns.

Advertisement

There is little doubt that so far, in carefully chosen settings, where the weather is temperate, the roads are well marked, and the environment is mapped in exquisite detail, fully autonomous vehicles are safer than cars driven by humans. There were over 35,000 traffic fatalities in the United States last year, and over six million accidents, almost all due to human error. Since they were introduced in 2009, Google’s self-driving cars have logged more than 1.5 million miles and have been involved in eighteen accidents, with only one considered to be the fault of the car. (At the same time, Google’s human copilots have had to take over hundreds, and possibly thousands, of times.)

But Google’s cars are being tested in relatively tame environments. The crucial exam begins when they are let loose, to go hither and yon, on roads without clear line markers, in snowstorms and ice storms, in heavily forested areas, and in places where GPS signals are weak or nonexistent. As Chris Urmson told that South By Southwest audience, this experiment is an unlikely prospect in the near term. But his remarks came before Baidu, Google’s Chinese rival, announced it will have autonomous cars for sale in two years. It is a bold claim since so far, according to Lipson and Kurman, “to date, no robotic operating system can claim to have fully mastered three crucial capabilities: real-time reaction speed, 99.999% reliability, and better than human-level perception.”

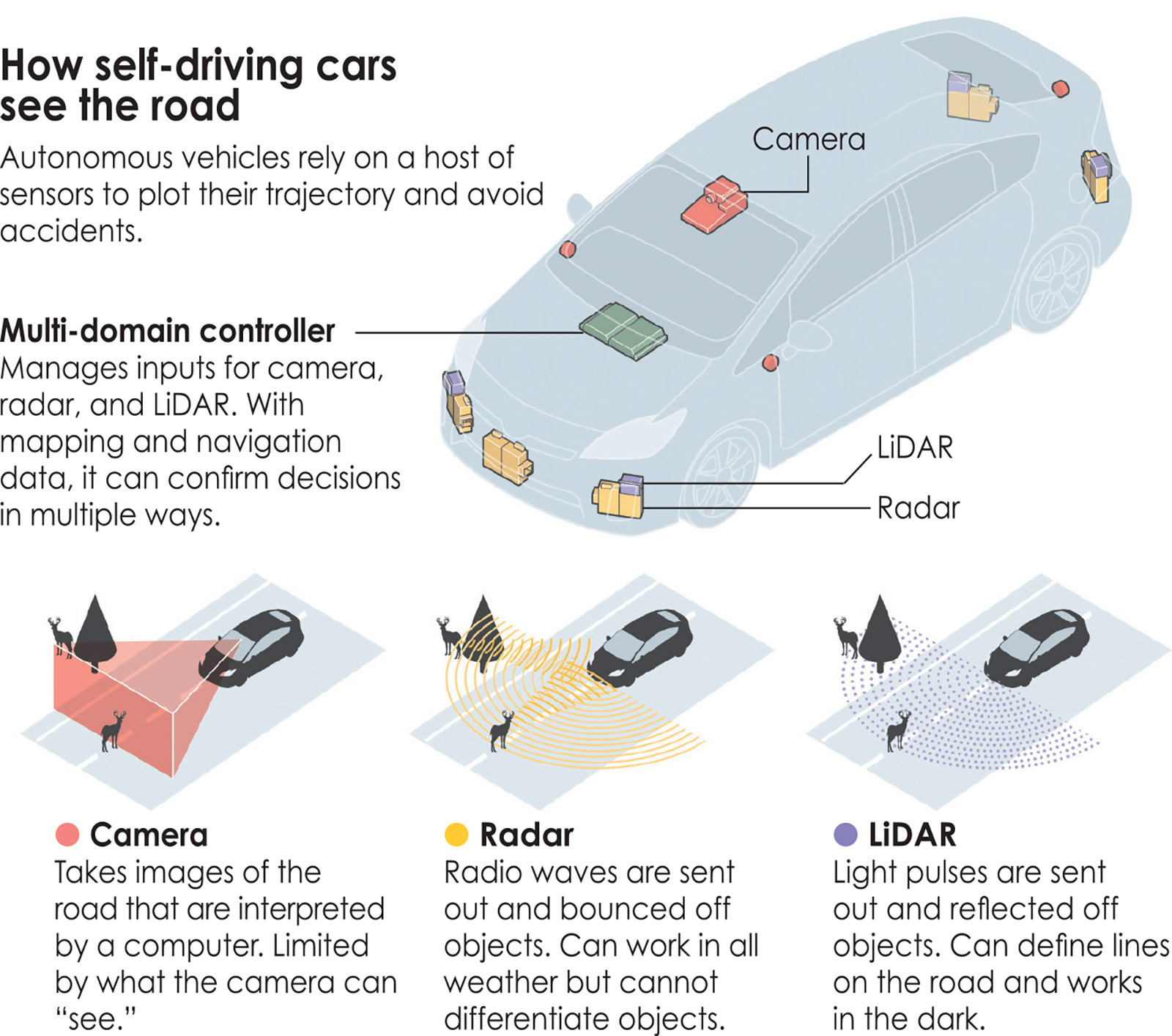

Still, the artificial intelligence guiding vehicles to full autonomy gets better and better the more miles and terrain those vehicles drive, and this is by design. As data is fed into an onboard computer from a car’s many sensors and cameras, that data is parsed by an algorithm looking for statistical patterns. Those patterns enable the computer to instantaneously build a model of possible outcomes and instruct the car to proceed accordingly. The more data the algorithm has to work with—from GPS, radar, LIDAR (laser radar), sonar, inertial measurement unity (IMU), or visual clues matched to a high-definition map of topographical and geographical features—the more accurate its predictions become. The algorithm also benefits from “fleet learning”: acquiring data, and thus “experience,” from other vehicles equipped with the same operating system. (Autonomous cars can also communicate with each other on the road.)

This artificial intelligence enables the car to determine, for example, if the obstruction ahead is a small child or a cardboard box that has blown into the road. AI will teach it, by repetition, that the category “people” includes both those who wear trousers and those who wear skirts, those who are small and those who are tall, those who walk alone and those who walk hand-in-hand. The car will know to stop when the traffic light is red, and not to obey the green traffic light when a traffic cop is standing in the road, gesturing for it to stop.

At least that’s the ideal. A car’s “perception” can be stymied by mud on its cameras and by environments that do not have many distinguishing landmarks. Complex situations, like what to do when a squirrel runs into the road, followed by a dog, followed by a child, are beyond its competence. As Lipson and Kurman observe, “the technological last mile in driverless-car design is the development of software that can provide reliable artificial perception. If history is any guide, this last mile could prove to be a long haul.”

There are other issues, too, that are likely to slow, though not stop, the widespread adoption of autonomous vehicles even if these technical obstacles can be overcome. Insurance is one. Now that the National Highway Traffic Safety Administration has determined that the driver of an autonomous car is its computer, will insurance need to be carried by the car manufacturer or by the software developer? (Volvo has already said it will cover the cost of accidents in its autonomous vehicles if the system has been used correctly.) Will members of car-sharing services have to waive their right to sue if a fleet car gets in an accident? And how will blame be assessed? Was the accident the fault of software that didn’t accurately read the road, or the municipality that didn’t maintain the road? Tort law is likely to be as challenged by the advent of self-driving cars as the automobile industry itself.

Then there are the ethical considerations. Machines can learn, but they can’t process information without instructions, and as a consequence autonomous vehicles will have to be programmed in advance to respond to various life-and-death scenarios. Human drivers make such calculations all the time. They are idiosyncratic and unsystematic, two things computers are not, which is why robotic cars are safer than cars driven by humans.

But there are ethical precepts embedded in artificial intelligence. Cars will be programmed to execute a predetermined calculus that puts a value on the living creatures that come into their path. Will it be utilitarian, and steer the car in the direction that will kill or injure the least number of pedestrians, or will there be other rules—when possible, spare children, for instance? Someone will have to write the code to set these limits, and it is not clear yet who will tell the coders what to write. Will we have a referendum, or will these decisions be made by engineers or corporate executives? If we needed a reminder that autonomous vehicles are actually robots, this may be it.

At the moment, it is easy not to notice. Cars with semiautonomous features look like other cars on the road. Most likely, no one passing the Audi Q5 that drove itself (for the most part) from San Francisco to New York last March knew it was being piloted by GPS and an array of sensors, cameras, and algorithms. This will change when steering wheels and brake pedals are museum pieces, when cars are made of carbon fiber that has been punched out by 3D printers, and when they display the names of tech companies like video card maker NVIDIA and optical sensor pioneer Mobileye, rather than GM and Chrysler, on their grilles—if they have grilles.

The major car makers, rushing to make alliances with tech companies, understand their days of dominance are numbered. “We are rapidly becoming both an auto company and a mobility company,” Bill Ford, the chairman of Ford Motor Company, told an audience in Kansas City in February. He knows that if the fleet model prevails, Ford and other car manufacturers will be selling many fewer cars. More crucially, the winners in this new system will be the ones with the best software, and the best software will come from the most robust data, and the companies with the most robust data are the tech companies that have been hoovering it up for years: Google most of all.

“The mobility revolution is going to affect all of us personally and many of us professionally,” Ford said that day in Kansas City. He might have been thinking about car salespeople, whose jobs are likely to become obsolete, but before that it will be the taxi drivers and truckers who will be displaced by vehicles that drive themselves. Historically these have been the jobs that have provided incomes to recently arrived immigrants and to people without college degrees. Without them yet another trajectory into the middle class will be eliminated.

What of Uber drivers themselves? These are the poster people for the gig-economy, “entrepreneurs”—which is to say freelancers—who use their own cars to ferry people around. “Obviously the self-driving car thing is freaking people out a little bit,” an Uber driver in Pittsburgh named Ryan told a website called TechRepublic. And, he went on, he learned about Uber’s plans from the media, not from the company. “If it’s a negative thing, they let you find out for yourself.” As media critic Douglas Rushkoff has written, “Uber’s drivers are the R&D for Uber’s driverless future. They are spending their labor and capital investments (cars) on their own future unemployment.”

All economies have winners and losers. It does not take a sophisticated algorithm to figure out that the winners in the decades ahead are going to be those who own the robots, for they will have vanquished labor with their capital. In the case of autonomous vehicles, a few companies are now poised to control a necessary public good, the transportation of people to and from work, school, shopping, recreation, and other vital activities. This salient fact is often lost in the almost unanimously positive reception of the coming “mobility revolution,” as Bill Ford calls it.

Also lost is this: the most optimistic estimates of the safety and environmental benefits of the transition to fleet-owned autonomous vehicles, and the ones often used to tout it, are based on models derived from cities with high-capacity public transit systems. Obviously there are many cities without such systems. Where they exist, according to the Boston Consulting Group, it would take only two passengers sharing an autonomous taxi to make the per-passenger cost comparable to that of mass transit. Perhaps not surprisingly, lawmakers in this country are now using the autonomous vehicle future laid out by companies like Uber and Google to block investment in mass transit.

But why take a train or a bus to a central location on a fixed schedule when you can take a car, at will, to the exact place you want to go? One can imagine Google offering rides for free as long as passengers are willing to “share” the details of where they are going, what they are buying, who they are with, and which products their eyes are drawn to on the ubiquitous (but targeted!) ads that are playing in the car’s cabin. Like websites that won’t load if you block ads or disallow cookies, or like Gmail, which does not allow users to opt out of having their mail read by the company’s automated scanners, one can also imagine feeling as if one had no choice: give up your data or take a hike.

Lipson and Kurman worry that the software driving driverless cars, like all software, will be ripe for hacking and sabotage. While this is not a concern to be taken lightly, especially after a pair of hacker/researchers were able to take remote control of a Jeep Cherokee speeding down the highway last year, this is an engineering problem, with an engineering solution. (To be clear: the hack was an experiment.) More worrisome is the authors’ suggestion that autonomous vehicles, with their high-definition cameras and sensors, could “morph into ubiquitous robotic spies,” sending information to intelligence agencies and law enforcement departments, among others, about people inside and outside the car, tracking anyone, anywhere. Welcome to utopia, where cars are autonomous, but their passengers not so much.

This Issue

November 24, 2016

Why You Won’t Get Your Day in Court

De Quincey: So Original, So Truly Weird