Not far from the hospital in Edinburgh where I work there’s a graveyard; it can be a calm, if morbid, place to reflect after a tough shift. Passing it acts as a memento mori on days when I need to be reminded of the value of medical practice—which for all its modern complexity remains the art of postponing death. Benches are set out in the shade of trees, between red-shingle walkways and rows of Victorian tombstones. Many of the stones commemorate dead children, but there’s a memorial near the entrance that always stops me short. It’s dedicated to Mary West, a woman who died in 1865, at the age of thirty-two—two years before Joseph Lister published his groundbreaking work on antisepsis. The reason for her death is unrecorded. Beneath her own name are listed the names of her six children in their order of death—at ages two, eleven, four, twelve, and fourteen. Only one lived to adulthood.

The death of any child is a tragedy, but to lose so many is now almost unthinkable. In the Victorian period, when infectious diseases were rife, it was routine. I trained in medicine through the 1990s and never saw a case of one of the most virulent, measles, though my tutors told me to learn about it from textbooks. Yet working in the emergency room recently I saw a girl with a rash, fever, conjunctivitis, swollen lymph glands—all classic symptoms of the measles virus. “Do you know if she has had her MMR [measles, mumps, and rubella] vaccine?” I asked her father. He nodded, but something made me doubt his sincerity.

“Are you sure?” I asked again.

He nodded, then broke my gaze. “Maybe she skipped that one,” he said at last.

One in twenty children with measles develops pneumonia. Only about one in a thousand develops the most serious complication, encephalitis (a viral infection of brain cells). About two in a thousand will die. Having to second-guess parents about whether a patient has been vaccinated is new: physicians are accustomed to trusting the parents of the children they see—after all, we both want what’s best for the child. But when fears about vaccine safety cause a drop in vaccination rates, cases of serious infectious disease start rising. Parents who decline to vaccinate their children sense a growing opprobrium toward their choices. They have a consequent incentive to lie or, perhaps worse, stay away from the emergency room for fear of having their parenting challenged by medical professionals.

In 2014 Eula Biss examined this crisis of confidence in her book On Immunity: An Inoculation and proposed that we think of infection control as an ecology to be kept in balance rather than a war between opposing sides.1 Writing with the perspective of a new mother who ultimately chose vaccination for her own child, Biss explored the metaphors we use to think about disease and the body. The word “inoculate” has its origin in the care of gardens and orchards, and was originally used to describe the grafting of a bud onto a tree. It’s unfortunate that vaccination has come to be seen as an unnatural and dangerous intervention, when in truth it’s through “grafting” that the natural power of the recipient’s own immune system is harnessed. The testimony of our graveyards is that before public health, clean water, antisepsis, and vaccination, it was perfectly natural that most children died.

Several recent books by doctors, scientists, and journalists have delved further into the history and science of vaccines and immunity, and the anxieties that accompany them. In Calling the Shots: Why Parents Reject Vaccines, Jennifer Reich, a sociologist who has also written about child protective services, brings meticulousness and sensitivity to this emotional issue. One parent tells Reich that she lies about having vaccinated her children to avoid disapproval from pro-vaccine neighbors: “I think it’s to the point where we need to keep quiet about our health choices if we are not within a like-minded community.” Another sees modern intensive care as a more natural intervention than vaccination, and is reassured by the safety net it provides for some illnesses. Explaining why she has chosen tetanus, but not whooping cough, vaccination for her children, she tells Reich: “Tetanus is the only one that they haven’t made modern medical advances to treat. There’s really not a good treatment for it, whereas, like, whooping cough, they can intubate you now.”

Disapproval of decisions to reject vaccines comes not just from physicians or neighbors but also from fellow parents at the school gate: 80 percent of parents in a 2014 study believed that all preschool children should stay up to date with vaccinations, while 74 percent would “consider removing their children from a care facility in which 25 percent or more children were unvaccinated.” They have grounds: Reich tells the story of Dr. Bob Sears of California, notorious for advocating unorthodox schedules of partial vaccination. In 2008 an unvaccinated boy who had picked up measles in Switzerland visited his office. The CDC later confirmed that four children, three of whom were infants below the usual vaccination age, caught measles in Sears’s waiting room. One required hospitalization, while another took a flight to Hawaii, putting all the passengers at risk. (Sears released a statement in which he explicitly confirmed his belief that vaccination can remain optional as long as enough of the wider population continue to get their shots.) Reich hears from Kira Watson, a pediatrician in Colorado, that she regularly meets parents who won’t register with her until they have checked her clinic’s vaccination rate, satisfying themselves that her waiting room is a safe place to take their kids.

Advertisement

Distrust of vaccination in the West goes back to its beginnings in the eighteenth century, with the English physician Edward Jenner, but Reich lays out the origins of its latest manifestation, including Reagan-era policy decisions intended to shore up public health (the National Childhood Vaccine Injury Act of 1986), distrust of corporate America, a broadening fear of the ubiquity of environmental toxins, and a declining sense of community responsibility. Some of the other factors she mentions are ones I recognize from my own clinics and consultations in Scotland: the steadily widening disparity between the age of first-time mothers at the top and the bottom of society (mothers who have chosen to delay childbearing generally have higher levels of education and are more likely to reject vaccination, considering themselves “experts on their own children”), overconfidence in the power of children to fight off infectious disease thanks to the phenomenal success of vaccination, and the rise among elite groups of “healthism”—the belief that healthy eating and exercise can protect against infectious diseases that, through virtually the whole of human history, have imperiled our lives.

The word “vaccine” means “from cows”; it was first used to describe Jenner’s method of preventing smallpox (Latin: variola) in humans by scratching a small amount of fluid from cowpox scabs into a nick in the skin. Smallpox had been killing and mutilating people since it first made the jump to us from rodents between 48,000 and 16,000 years ago; Pharaoh Ramses V is thought to have suffered from it in the twelfth century BCE, and traces have been unearthed from the Indus Valley Civilization. In the fifteenth century Europeans carried it to the Americas, and its lethality is part of the reason that only between 5 and 10 percent of Native Americans are estimated to have survived that encounter.

In China, from about 1000 CE, smallpox scabs were powdered and blown into the nose—a method of inoculation that risked full-blown smallpox. The Ottomans practiced a modified technique, called “variolation” in the West, in which material from a smallpox scab was introduced under the skin. In 1715 Lady Mary Wortley Montagu, the wife of the British ambassador to Ottoman Turkey, was scarred by smallpox. Fearing for the health of her son, she had him successfully variolated and asked the British Embassy surgeon Charles Maitland to observe the procedure. Maitland communicated the technique to the British medical establishment. Across the Atlantic in Puritan Boston, the clergyman Cotton Mather (the same Mather involved in the Salem witch trials) learned of variolation from his slave Onesimus, who had been variolated as a boy among his people. In the early 1720s, threatened with recurrent epidemics of smallpox, Mather arranged with local physicians to variolate 280 Bostonians, of whom only six died of the disease—a significant improvement on the usual fatality rate of one in three.

In England, folk wisdom had noted that milkmaids rarely suffered from smallpox and were able to nurse victims without fear. Prior contact with the virus’s bovine variant, cowpox, appeared to grant protection. During a smallpox epidemic in the late eighteenth century, a Dorset farmer called Benjamin Jesty had the idea of variolating his family with cowpox rather than smallpox (he’d undergone the latter, but cowpox is milder and less contagious in humans). The anti-vax movement is as old as the techniques it challenges: Mather, seen by some to be contravening divine or natural law, had a small bomb thrown through his window with a note inscribed, “COTTON MATHER, you Dog, Dam You: I’ll inoculate you with this, with a Pox to you.” Jesty’s cross-species intervention raised a new anxiety: onlookers feared that recipients risked “metamorphosis into horned beasts.”

Edward Jenner didn’t invent vaccination, he merely popularized Jesty’s technique with the slight modification of taking cowpox samples from afflicted humans rather than from cattle. Because of his London connections to the College of Physicians and to royalty, he received huge grants—£30,000 over ten years—to disseminate it. The French, Spanish, and American governments all began to vaccinate. Jefferson had his slaves vaccinated (before taking the risk of vaccinating his family), while Jenner was decorated by Napoleon, who, after having half the French army vaccinated, called him “a great benefactor of mankind.”

Advertisement

Smallpox cases dwindled over the next century and a half. The last case of smallpox caught in its natural state rather than from a laboratory sample was that of a Somali hospital cook named Ali Maow Maalin, who provoked a nationwide manhunt when he went on the run with the disease in 1977. Smallpox vaccination was obligatory for all hospital workers, but Maalin had deliberately avoided it. “I was scared of being vaccinated then,” he told The Boston Globe in 2006. “It looked like the shot hurt.” Maalin caught the less virulent form of the disease, variola minor, and thanks to the prompt quarantine and vaccination of all his colleagues, neighbors, and associates (a total of 54,777 people), an outbreak was prevented. With Maalin’s recovery came remorse—he went from being a vaccine-refuser to a campaigner passionately committed to its promotion, and he joined the World Health Organization’s program to eradicate polio. “When I meet parents who refuse to give their children the polio vaccine,” he said, “I tell them my story. I tell them how important these vaccines are. I tell them not to do something foolish like me.”2

The story of smallpox—its malignity and humanity’s triumph over it—is told in Michael Kinch’s Between Hope and Fear: A History of Vaccines and Human Immunity. Kinch, an immunologist and biosciences entrepreneur, is profoundly alarmed by our collective amnesia, the “growing forgetfulness of the agonizing and terrifying ailments that have threatened man since time immemorial.” As a scientist he doesn’t hold back in discussing how offensive he finds the “advocacy of false facts by an obnoxious and loud minority [that] has overwhelmed the fact-based attempts by credible sources to expound the extraordinary health benefits of vaccination.”

In breezy and enthusiastic prose, Kinch moves from smallpox to the marvel of the human immune system, “arrays of cells tasked with detecting and eliminating harmful microorganisms within a wet, warm environment ideally suited to most germs.” The average human being is composed of about thirty trillion cells, but may carry as many as forty trillion bacterial cells on the skin and in the digestive and respiratory tracts—our “microbiome.” These microorganisms that naturally colonize our bodies, beginning moments after our birth, are known as “commensals” and help to keep out dangerous bacteria.

The modern vision of the body as host to myriad microorganisms has its origins among European scientists of the nineteenth century; Kinch’s book is a roll-call of the names still spoken of reverentially in every medical school: Koch, Virchow, Semmelweis, Pasteur. The scientific understanding of disease pioneered by these men (the systematic exclusion of women from much of scientific endeavor throughout the nineteenth century means there are few of them in Kinch’s story) has saved hundreds of millions of lives, and Kinch recounts some of the more memorable biographies.

Semmelweis ended his life in an asylum, his great insight into the importance of hand-washing before examining each patient accepted only after his death. Virchow, by contrast, was honored by the Prussian, Swedish, and British scientific establishments. He entered politics: an apocryphal story relates that in 1865 Otto von Bismarck, outraged by Virchow’s liberalism, challenged him to a duel. Virchow agreed on condition that he choose the weapons: a fresh sausage for himself, but a sausage loaded with roundworm larvae for Bismarck. “Germans and their diaspora have continued to relish sausages despite occasional outbreaks of botulism,” Kinch adds, dryly; “Arguably, the worst wurst poisoning occurred in 1793…”

Fifty years after Virchow’s supposed challenge to Bismarck, the great Canadian physician William Osler responded to the anti-vaccinationists of his time in a similar spirit:

I would like to issue a Mount Carmel-like challenge to any ten unvaccinated priests of Baal. I will take ten selected vaccinated persons, and help in the next severe epidemic, with ten selected unvaccinated persons (if available!). I should choose three members of Parliament, three anti-vaccination doctors, if they could be found, and four anti-vaccination propagandists. And I will make this promise—neither to jeer nor to jibe when they catch the disease, but to look after them as brothers; and for the four or five who are certain to die I will try to arrange the funerals with all the pomp and ceremony of an anti-vaccination demonstration.

For Kinch, human understanding of disease proceeds by gritty perseverance, flashes of insight, and a great deal of luck. Many are familiar with the discovery of penicillin, when Alexander Fleming inadvertently found his bacterial samples killed by the fungus Penicillium; similar stories are scattered throughout medical history. Kinch charts the steps through which humanity has come to understand and then defeat (through vaccination) diphtheria, cholera, rabies, and pertussis, or whooping cough—vaccines for which I administer regularly at my own clinic. I’m lucky enough to have never seen the first three diseases in the clinic, but pertussis, sadly, is now making a comeback as a consequence of dropping vaccination rates.

In Japan in 1947, 20,000 children died from pertussis; by 1972, thanks to vaccination, the figure was zero. Then in the winter of 1974–1975 there were two high-profile deaths following administration of the vaccine, and vaccination rates plummeted; by the end of the 1970s the disease had resurged, killing more than forty people a year in Japan. Similar adverse reactions meant that by the early 1980s, suing vaccine manufacturers had become big business in the United States, and company after company gave up production. In 1986 President Reagan signed the National Childhood Vaccine Injury Act to protect companies against litigation, and simultaneously sowed seeds of distrust among parents that the government and Big Pharma had something to hide. That distrust paved the way for the MMR debacle that a 2011 paper in the Annals of Pharmacotherapy called “perhaps…the most damaging medical hoax of the last 100 years”; in 1998 the now discredited English physician Andrew Wakefield published a carefully selected series of medical studies of twelve children, associating MMR vaccination with autism. The Lancet eventually retracted the paper, but the damage was done.

Kinch estimates that measles has caused 200 million deaths over the last 150 years. I can’t help wondering if some of those children on Mary West’s headstone died of it. Vaccination with MMR results in a 99.99 percent reduction in cases, and 100 percent reduction in fatalities. In Ireland alone (with a population of 4.76 million), the drop in MMR vaccination between the publication of Wakefield’s paper and the year 2000 was estimated to have brought about more than a hundred hospitalizations and three deaths.

It’s now known that Richard Barr, an English attorney, put Wakefield in contact with the twelve children in his Lancet study. None of the children was from the London area where Wakefield worked; indeed, one was flown in from the United States. Wakefield claimed “no conflict of interest,” but he had received more than £50,000 for his expert work on a lawsuit that Barr was putting together, and had filed patents for alternative vaccines. “It seems that Wakefield was not opposed to vaccines,” Kinch observes, “but rather was simply opposed to those vaccines for which he did not own the intellectual property.”3

Unvaccinated children may carry disease without symptoms but still spread it among more vulnerable people in their communities. Vaccination may reduce your child’s risk of a disease by 99 percent or more, but you’ll never know if they, personally, benefitted from it. Adverse reactions to vaccinations are rare, but it takes only a few children to suffer them for public trust to evaporate. The 1952 US polio epidemic infected 58,000 Americans, paralyzing 21,000; Kinch vividly recalls the iron lungs then in widespread use. Polio vaccines were hurriedly generated. In 1954 Cutter Laboratories in California manufactured one that contained an inadequately inactivated virus. More than 100,000 doses are thought to have been produced; 192 people were paralyzed after receiving Cutter’s vaccine, and ten died. The following year fear of vaccination soared, and polio resurged with 28,000 cases.

Some patients tell me they distrust vaccinations because they’ve seen the truth on TV. Documentaries such as WRC–TV’s DPT: Vaccine Roulette (1982) blatantly distorted the facts, frightening viewers with misinformation in hopes of a spike in ratings. Reagan’s intervention fanned the flames of conspiracy theories, and Wakefield’s MMR study led many to distrust science, as well as the vetting processes of scientific publications. In her book The Vaccine Race: Science, Politics, and the Human Costs of Defeating Disease, medical reporter Meredith Wadman explores another motivator for the anti-vaccination movement: the methods by which vaccines are produced.

Viruses do not grow outside living cells; to produce vaccines in bulk you need billions upon billions of cells in which to grow them. Late in the book, Wadman visits a factory for rubella vaccine and describes the modern process:

Behind a series of gowning rooms and air locks that ensure that outside air never makes its way in, hooded technicians in white jumpsuits and steel-toed shoes with green shoelaces that mark them for sterile rooms were overseeing scores of cylindrical half-gallon plastic bottles that were rotating slowly, their sides made hazy by the WI-38 cells growing on them, the medium inside them awash with rubella virus.

The Vaccine Race is the story not just of vaccine development but of these WI-38 cells in particular, and it’s also a biography of the scientist who developed them, Leonard Hayflick. Along the way it shows how medical ethics have improved since the 1950s, and how medical science has been progressively commercialized.

During the early days of vaccine development, kidney cells from monkeys were used to harvest viruses, because they reproduce readily and can, under the right conditions, yield ten times as much virus as comparable human cells. But viruses behave differently inside monkey cells than in human cells, and their use risks transferring monkey viruses to humans.

Hayflick’s WI-38 cells, on the other hand, were human in origin, free of viral contaminants, and proved ideal for growing many different kinds of human virus. They reproduce abundantly, and their growth medium can be adjusted to weaken some viruses or improve the yields of others. What was and remains controversial is that every one of the trillions of WI-38 cells that have been used worldwide since the early 1960s is derived from a few grams of lung tissue taken from the body of a single aborted fetus in Sweden. “WI” refers to the Wistar Institute in Pennsylvania where Hayflick worked, and “38” refers to the fetus and the organ (in this case lung) in his experimental series. Until Hayflick demonstrated the safety and versatility of WI-38 cells, the only human cell line in widespread laboratory use was HeLa, derived from the cancerous cervix of an American woman, Henrietta Lacks.

In the early 1960s abortion was illegal in every US state; in Pennsylvania it wasn’t permissible even in cases in which a pregnant woman’s life was at stake. Hayflick worked with Sven Gard, a Swedish virologist whose contacts at the Karolinska Institute in Stockholm enabled fetal tissue to be imported into the United States for lab use. Abortion was partially legalized in Sweden in 1938, and the comprehensive medical records of its welfare state gave Hayflick access to detailed information about the health and genetics of each fetus.

In 1972 a pamphlet titled “Women, the Bible, and Abortion,” published by Dr. Forest Stevenson Jr., linked termination of pregnancy to communism and Hitler, and slanderously suggested that Hayflick’s research involved slaughtering babies after injecting them with viruses. Hayflick threatened to sue and Stevenson retracted the statement, but the lie had begun to spread. In 2003 the Vatican was asked by an anti-vaccine lobby group to reflect on the morality of vaccinating with WI-38 cells; two years later, the Pontifical Academy for Life ruled that their use was lawful as long as there were no alternatives available, “in order to avoid a serious risk not only for one’s own children but also…for the health conditions of the population as a whole—especially for pregnant women.” The pharmaceutical company Merck initially suggested that it would continue production of less effective, vastly more expensive single vaccines for use by those with moral objections to WI-38, but later reneged. In 2015, following an outbreak of measles at Disneyland, the lobby group that had petitioned the Vatican issued a press release titled “Blame Merck—Not the Parents!”

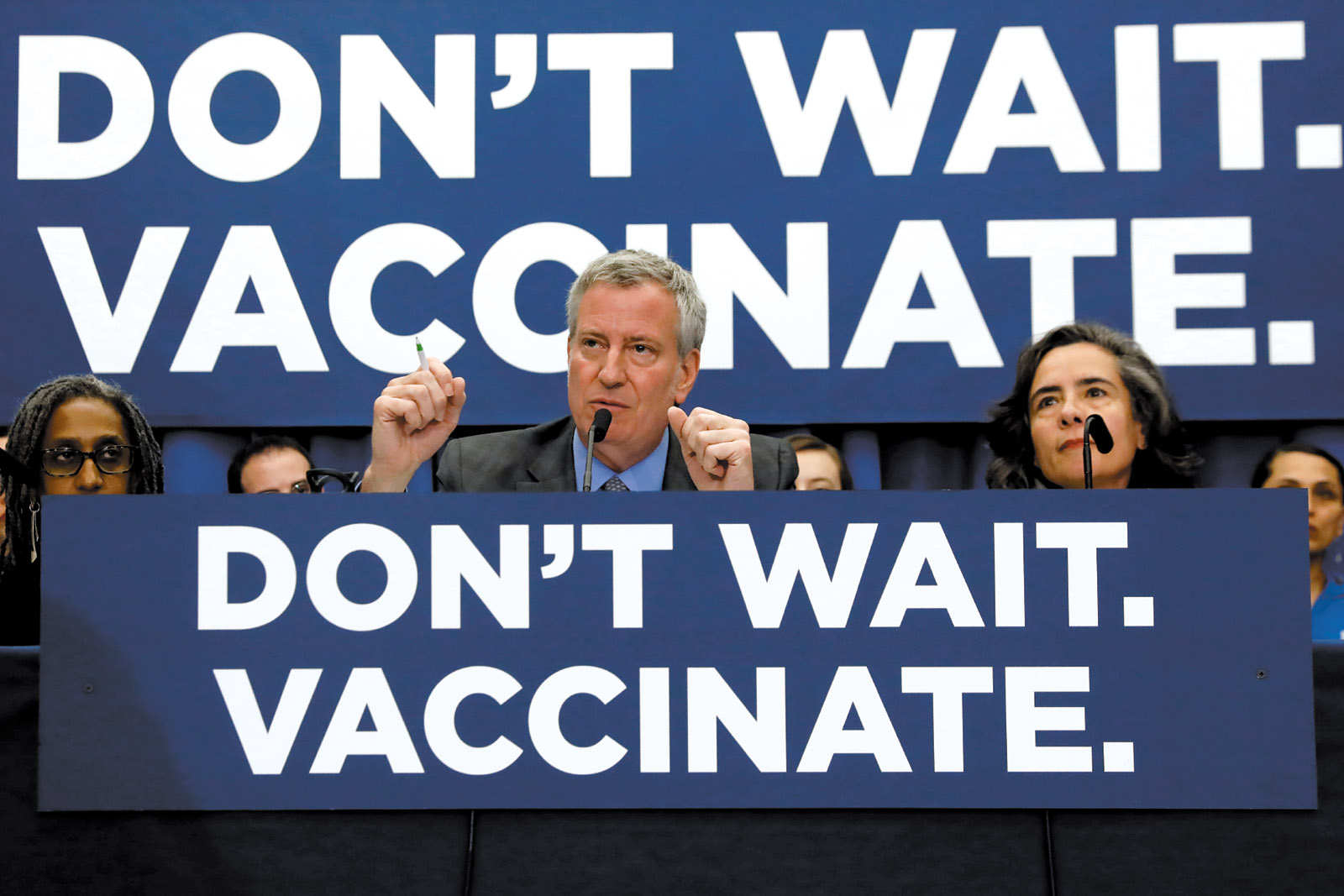

Religious objections to vaccination extend beyond those of Roman Catholics. In a recent misinformation scandal, pamphlets inaccurately claiming that vaccines are not kosher and contain “monkey, rat and pig DNA as well as cow-serum blood” were targeted at ultra-Orthodox Jewish communities, fueling measles outbreaks in New York City and Rockland County. In April, Mayor Bill de Blasio declared a public health emergency to issue an order that unvaccinated individuals receive measles vaccination or be fined.

The Vaccine Race is exhaustive in its account of Hayflick’s professional life and his many conflicts with government authorities (the US Division of Biologic Standards remained stubbornly wedded to the use of animal cells long after the superiority of Hayflick’s human-derived WI-38 cells had been demonstrated). Wadman makes a glancing acknowledgment that another fetal human cell line, MRC-5, was also fundamental to the development of many of our most commonly used vaccines, but she limits the scope of her book to Hayflick’s work. Controversially, and most unusually for his time, Hayflick commercialized his laboratory techniques, even founding a company to profit from selling the WI-38 cells that had been donated for the purposes of research. The Vaccine Race also sheds light on the systematic medical experimentation, without consent, that was routinely conducted on orphans, prisoners, people of color, and children with learning difficulties through the 1950s and 1960s—a practice that slowed only after the publication in 1966 of Henry Beecher’s damning “Ethics and Clinical Research” in the New England Journal of Medicine.

Anyone suspicious about the manner in which vaccines are produced is unlikely to be reassured by Wadman’s account. Kinch’s Between Hope and Fear aims for a much broader readership, but he too may struggle to win over vaccine-skeptics; as a biosciences entrepreneur whose research has been partly funded by and conducted in association with Big Pharma, though he claims scientific impartiality he is vulnerable to accusations of bias. But he’s confident the facts will speak for themselves:

Modern-day vaccine deniers no longer have the convenience of claiming ignorance…. The facts show that vaccines save the lives of countless millions worldwide each year. Denial of this fact exceeds the boundaries of common sense and would entail malpractice if a decision not to vaccinate were made by a physician. However, it is not. Such decisions to avoid vaccines are made by parents—well-intentioned, perhaps, but exceedingly selfish.

It is Reich’s book that may prove the most convincing to anti-vaxxers. She prefers a gentler approach than Kinch, pointing out as a sociologist that it’s generally counterproductive to portray vaccine-refusing parents as “foolish or ignorant at best, and sometimes even delusional or selfish.” She had all her own children vaccinated, and her reason for doing so is admirably succinct: “I trust that vaccines are mostly safe and I accept that we can each absorb minimal risk to protect those in our community who are most vulnerable.” Vaccines, she proposes, should be promoted less as medical interventions than as public health measures, comparable to safe drinking water, food inspection legislation, fire services, or air quality monitoring, “all of which would be difficult for an individual to accomplish alone on his or her own behalf.” She arranges her conclusions under headings that could stand as recommendations: “Stop Marketing Vaccines as Only for Individual Benefit”; “Address Profit Incentives”; “Earn Trust in Regulators”; and crucially, “Eradicate the Culture of Mother Blaming.” She asks that we recognize more broadly how measures for the public good often require the individual to give up some personal freedom to benefit the community, as Eula Biss does in On Immunity. Like Biss, Reich recognizes that vaccination is a social act as much as a personal one, and has reassured herself that any material used in vaccines is often more “natural” in origin than the drugs used to treat infectious disease.

In discussions with parents in my own clinic, I try to emphasize vaccination’s well-documented safety, and to portray vaccines as tutors that teach each child’s body how to respond to dangerous infections. It’s in nearly all ways a more sensible means of controlling infection than dosing a patient with antibiotics after an infection has occurred; after all, it relies on the child’s immune system to engage and actively respond. Every child who plays in the dirt is self-inoculating against myriad organisms—to graze a knee is to introduce immeasurably more foreign material into the body than any vaccine does.

A century ago or so, childhood was a perilous phase of life. After the MMR misinformation scandal, it looked as if it was becoming so again. In 2003–2004 vaccination for MMR in some parts of the UK dropped beneath 80 percent, far below the threshold for herd immunity—the proportion of any population that must be vaccinated in order for those who haven’t been vaccinated to remain protected. The WHO target is 95 percent. Uptake rates recovered to a peak of 92.7 percent in 2013–2014, but last year recorded the fourth consecutive year of declining MMR vaccination in England (in Scotland, by contrast, uptake has remained around 95 percent for the last ten years). In the US, immunization rates for 2017—the most recent figures available—show an enormous regional variation, from 85.8 percent in Missouri to 98.3 percent in Massachusetts, but the general trend, for MMR at least, is positive.

Recent measles outbreaks have prompted the legislatures of several states, including Maine and New York, to introduce public health bills that limit parents’ ability to opt out of vaccination (Washington state passed one such measure in April). But this year has also seen a record number of bills proposed to expand exemptions. Reich’s conclusion that “infectious disease cannot remain a private concern” could stand for all three of these books; for everyone’s health and safety, let’s hope that message keeps getting through.

-

1

Reviewed in these pages by Jerome Groopman, March 5, 2015. ↩

-

2

In 1978 a British medical photographer, Janet Parker, became the last person to die of smallpox. She contracted it through the air ducts of a microbiology lab with inadequate infection control, which was situated below her darkroom. The man responsible for the lab, Henry Bedson, committed suicide while at home under quarantine. ↩

-

3

Wakefield was struck off the medical register in the UK in 2010 but has continued his campaign against vaccination in the US. He was a guest at one of Trump’s inaugural balls. See Sarah Boseley, “How Disgraced Anti-Vaxxer Andrew Wakefield Was Embraced by Trump’s America,” The Guardian, July 18, 2018. ↩