Google plays such a large part in so many of our “digital lives” that it can be startling to learn how much of the company’s revenues come from a single source. Almost everyone online relies on one Google service or another; personally I make semiregular use of

Google Search, Gmail, Google Chat, Google Voice, Google Maps, Google Documents, Google Calendar, Google Buzz, Google Earth, Google Chrome, Google Reader, Google News, YouTube, Blogspot, Google Profiles, Google Alerts, Google Translate, Google Book Search, Google Groups, Google Analytics, and Google 411.

Yet few of these services support themselves (YouTube alone has lost hundreds of millions of dollars per year). In 2008 the advertising on Google’s search engine was responsible for 98 percent of the company’s $22 billion in revenue, and while Google refuses to provide more recent percentages, the company’s 2009 revenue of $23.6 billion suggests that little has changed.

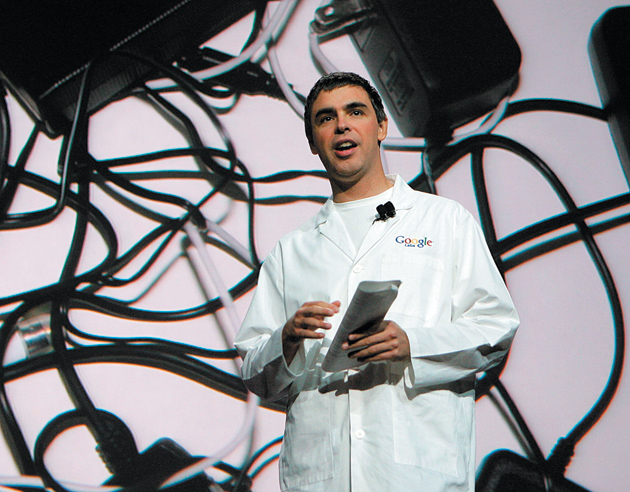

The reason Google’s search engine remains its single largest source of revenue—and why that revenue exceeds that of any other website—can most easily be understood by studying the company’s history. In 1998, when the Stanford graduate students Sergey Brin and Larry Page launched Google, the existing search engines were so inadequate that only one was capable of finding itself when queried with its own name; a search for “cars” on Lycos, one of the better search engines, returned more pornography sites than sites about cars.

Google’s vast improvement on other search engines is usually attributed to a new algorithm, called PageRank, that made use of the links between sites to more accurately determine relevancy. In contrast to other search engines, which ranked results according to the number of times a searched-for word was used, Google ranked its results based on the number of links a site received, a method that revealed the “wisdom of crowds.” The pages to which many people link were, by Google’s model, listed higher than pages that were less popular, and if a particularly popular site linked to a page, that link would be given greater weight in determining relevancy. But Google’s success was not due primarily to its technical ingenuity; other search engines, including Lycos, used a similar ranking technology.

Google’s advantage over Lycos only became apparent when Page and Brin, still intent on pursuing their Ph.D.s, tried to sell their technology to another search engine, Yahoo. As Ken Auletta writes in Googled:

[The Yahoo founders] were impressed with [Google’s] search engine. Very impressed, actually; their concern was that it was too good…. The more relevant the results of a search were, the fewer [pages] users would experience before leaving Yahoo. Instead of ten pages, they might see just a couple, and that would deflate the number of page views Yahoo sold advertisers.

In the early years of the Web, a search engine was considered only one of many attractions on sites like Yahoo and Lycos, which were attempting to become, in the language of the time, a “portal,” or a site that served as an entry point to the Web and provided links to various kinds of content. Most of this content was organized and displayed on different Web pages that were part of the site, thus leading users to remain on the site and look at page after page. As with newspapers, an advertiser might pay a premium to guarantee that an ad would appear on a site’s front or “home” page. But on the Web, aside from the home page, no equivalent of a newspaper’s “A” section exists; websites, unlike print newspapers, do not proceed in a linear fashion.

Hence the emphasis on “page views.” Instead of a promise of an ad in a newspaper’s “A” section, sites like Yahoo and Lycos sold advertising based on how many times each page on their site was viewed (a statistic easily tracked online). This “page view” approach has since been adopted by newspapers themselves in their online versions: the ads that readers see—i.e., for Moviefone and the Red Cross in a recent New York Times story about Haiti’s upcoming election—are most often sold at rates based on the number of times they are viewed, rather than where they appear on a given site.

Page and Brin decided to continue improving Google’s search algorithms, while disdaining the efforts of Yahoo, Lycos, and other portals to maximize the number of pages—and hence ads—that visitors might see. More than any innovation, this decision allowed Google to become the best search engine available. But it also left Google with almost no source of revenue, since users did not see many different pages and the site consequently could not compete in selling ads based on page views.

Advertisement

It wasn’t until Google grew desperate for funding during the dot-com bust of the early 2000s that Page, Brin, and their colleagues began to see that the technical advantage they had gained over other search engines might translate into an economic advantage as well. Aside from page views, one of the few easily measured statistics on the early Web was “click-throughs,” the number of times visitors to a site found an ad displayed enticing enough to click on it, and then be taken to the advertiser’s own website, where the product or service in question might be purchased or used. Most websites, including those of other search engines, found that they could earn more by charging small amounts for each time an ad was seen (page views) rather than charging a larger amount for the far less frequent instances when a visitor clicked on an ad (click-throughs) and then visited the advertiser’s own website.

Google’s executives realized that ads on search engines reach users at a singularly receptive time: unlike readers browsing through articles on a news website, users of search engines are often looking for something very specific. A user who asks a search engine, for instance, “Where can I find the best car insurance?” would be a more promising potential customer than a visitor to a news website, because by searching for car insurance a user signals that he or she is, at that moment, in the market for car insurance. A car insurance ad programmed to appear next to the results of such a search would allow the advertiser to target its most desirable audience.

This approach, a form of what’s known as a “cost-per-click” advertising system, charges advertisers for each time a user clicks on an ad that is displayed next to related search engine results. To implement it, Google developed a program to link specific ads to millions of different search terms that prospective customers might use (from “car insurance” to “French horn” to “cat grooming in New York”), as well as a program to ensure that the ads sold through this system would be priced fairly (the program uses a simple bidding system, vetted by economists).

You can see how this looks today: a user searching for “pet food,” for exam ple, is greeted not only by a ranked list of sites containing information about pet food, but also by three “sponsored” links at the very top of the page, as well as a column of pet food ads in the right margin. These “sponsored” links and ads in the margin are paid for by online pet food stores and related ventures.

Back in the early 2000s, though, another company, Overture, which was far more focused than Google on making money online, had already developed such a system. Google copied much of the system from Overture in 2001; Overture sued Google in 2002; and Overture was itself bought by Yahoo in 2003. Yahoo settled the lawsuit against Google out of court for $275 million in 2004, and the system, modified over time, still provides the vast majority of Google’s billions of dollars in revenues.

Much of this story has been told before. Ken Auletta, like other reporters, emphasizes the luck of Google’s early success; unlike other reporters, he never forgets that luck as the company’s profits begin to pile up. Had Google’s revenues derived from a hundred diverse sources, one could plausibly credit the company, as some business writers do, with a “management breakthrough.” Auletta instead quotes Steve Ballmer, the CEO of Microsoft, who famously called Google “a one-trick pony” in 2007. “They have one product that makes all their money,” Ballmer explained—referring to the sale of search-based ads—“and it hasn’t changed in five years.”

But while Auletta approaches his subject more judiciously than other reporters, he still tends to withhold judgment. A more critical approach to Google comes from Nicholas Carr, the technology-expert-turned-skeptic who recently expanded his widely discussed 2008 article in The Atlantic, “Is Google Making Us Stupid?,” into a full-length book, The Shallows.

Google, Carr suggests, is the new home of Taylorism, the management philosophy of “perfect efficiency” developed by Frederick Winslow Taylor for factory production lines toward the end of the nineteenth century. It is an unexpectedly easy argument to make. Eric Schmidt, the CEO of Google, has proudly said that the company is “founded around the science of measurement,” and Google executive Marissa Mayer has argued that “because you can measure so precisely [online], you can actually find small differences and mathematically learn which one is right.” This “scientific” emphasis leads Carr to conclude that the Internet, partly because of Google’s influence, has been built to process information efficiently, rather than to encourage deep understanding.

Advertisement

Carr laments the hyperlinks that dominate much of what we read online, arguing that instead of adding context, they turn the Internet into an “interruption system, a machine geared for dividing attention.” He cites studies of how links affect the brain, though the results of these experiments remain ambiguous; more persuasive evidence comes from the many bloggers who, partly as a result of Carr’s writing, have begun to admit to an inability to read entire books or even magazine articles.

The Web, ostensibly built to help process information, for Carr thus leads to confusion and distraction among the people it purports to serve, because in the drive of companies to increase the efficiency with which we go through pages (and the number of ads seen and the total profits made), the Web pre-sents us with more information than we can process, let alone understand.

Carr’s analysis is often suggestive, but he tends to ignore much of how Google approaches the Web. He argues that Google considers information a commodity that should be “mined and processed” and dispensed in highly efficient “snippets” to searchers. But while Google has done everything it can to “mine” more information, the company always tries to make it possible for searchers to view entire texts. Google does, it is true, show only small “snippets” from books when forced by copyright law to limit the text it can provide. But the company has spent millions of dollars in court fighting to allow users to see more of these books.

Carr also pays surprisingly little attention to the specific economic pressures that Auletta describes. He claims that “it’s in Google’s economic interest to make sure we click as often as possible.” But in referring to the quantity of clicks, Carr gives the impression that Google’s approach to advertising is much like the page-view model of its competitors. For his argument to be correct, Google would want users to be clicking indiscriminately, seeing as many ads on as many different pages as possible. In fact, with Google’s search engine advertising, the value of the ads comes less from their quantity (the number of times the ads appear, for which the company receives nothing) than from their quality (the number of times the ads match what users are looking for and attract purposeful clicks, for which Google receives fees).

This is why Google refuses to show ads when none match a user’s query: the company wants to provide advertisers only with clicks of the highest quality, from users who are searching for what is advertised. On other sites, such as YouTube, where Google must rely on the page-view model of advertising, the company does have an incentive to increase the raw number of ads a viewer sees. But with search engine advertising, where the company makes the vast majority of its profits, and where its decisions may have the greatest influence on the development of the rest of the Web, Google’s interest is the opposite of what Carr suggests. The company’s success, both as a provider of information and as a seller of advertising, has depended on finding ways to produce results of such high quality that users need not worry about clicking unnecessarily.

Carr is of course correct about many parts of the Web: the most successful news sites, like The Huffington Post, Politico, and Gawker, while supposedly attempting to satisfy the desire for the latest news, often fill their front pages with spurious brief stories about, say, Hollywood celebrities, meant to produce ever more page views; and the social networks, while claiming to “make the world a more open place,” encourage users to check in every minute partly in order to raise total advertising revenues. (Each time a user logs into MySpace, for example, it counts as a separate page view, thus allowing MySpace to charge advertisers more.) Google, because of its size and its different advertising model, has had other challenges. The most widely discussed may be the technical principle known as “net neutrality.”

The Internet, as originally conceived, was supposed to be noncommercial, and therefore treated all traffic in a “neutral” way, giving the same priority to every piece of data that passed through the network. As the Internet has developed, this principle of neutrality, though never codified in law, has largely been retained: whether Internet content comes from a long-established video rental company like Blockbuster or from a relatively recent start-up like Netflix, the pages, images, and videos that are sent through the network must all be passed along at equal speed.

More recently, companies that provide Internet services, such as AT&T, Verizon, and Comcast, have begun to see that they could make significant profits by providing quicker service to content providers for a fee—adding a “fast lane,” as it were, at the edge of the “information superhighway.” For example, if YouTube wanted to gain an advantage over rival video site Vimeo, it could pay the various Internet service providers to give priority to its traffic, so that videos on YouTube would load faster than those on Vimeo. Tim Wu, a professor at Columbia Law School, suggests that had the Internet not been neutral back in the 1990s, “Barnes and Noble would have destroyed Amazon, [and] Microsoft Search would have beaten out Google.”

For years Google was a staunch supporter of net neutrality, even as net neutrality’s demands began to run counter to its interests. But on August 9, Google together with Verizon announced a “compromise.” Google executives claimed that they would continue to support net neutrality on traditional cable and telephone services, but the compromise they proposed left open several loopholes.

More importantly, the iPhone, the iPad, as well as the whole gamut of mobile devices that run Google’s own Android operating system, have led many users to access the Internet through wireless devices rather than through cable or phone lines. It is not hard to imagine a future in which many users give up their home Internet connections—much as many have abandoned their “land line” telephones—and gain online access exclusively through wireless devices. But Google, in its joint statement with Verizon, dropped its support for net neutrality for wireless devices. Instead of insisting that the rapid expansion of wireless Internet access should make net neutrality all the more essential, Google executives claimed, in corporate-speak, that the “nascent nature of the wireless broadband marketplace” would make the application of the principle of net neutrality premature.

What may prove as important as Google’s acquiescence on net neutrality, however, was the disclosures by The Wall Street Journal on August 10 about the company’s strategies for the use of private information. According to the Journal:

Last September, [Google] launched its new ad exchange, which lets advertisers target individual people—consumers in the market for shoes, for instance—and buy access to them in real time as they surf the Web…. The further step…would be for Google to become a clearinghouse for everyone’s data, too. That idea…is still being considered, people familiar with the talks say. That would put Google—already one of the biggest repositories of consumer data anywhere—at the center of the trade in other people’s data.

Why would Google consider constructing such a “data clearinghouse”? Given a specific query, after all, Google’s search engine already has a very good sense of what users are looking for; and while Google could sell the data it gathers to other sites, the company would lose much of the trust of its users. But the growth of revenue from the search engine has been declining, and Page and Brin know that if Google can build a data clearinghouse and become a primary distributor of more precisely targeted ads—not making use of them on the search engine, but showing them on YouTube and selling them to other sites—then it could lay claim to another source of revenue.

Google has for years run a sideline business distributing ads to other sites, and the company made a major investment in the field by purchasing the leading ad distributor, DoubleClick, for $3.1 billion in 2007. By making this acquisition, Google took a step toward overcoming the distinctions that had defined the early years of the Web. As Eric Schmidt admits, Google knows “roughly who you are, roughly what you care about, roughly who your friends are.” The combination of DoubleClick’s ad distribution service with the data that Google has gathered on its users allows Google to sell the page view–style ads that appear almost everywhere online, and to target the ads so precisely that they can be sold on a cost-per-click basis.

Many companies, including Facebook, have attempted to create such targeted ads by using the private information they have gathered on their users. The data clearinghouse being considered by Google would make these ads far more precise by bringing together all the private information that companies have gathered on users in one place. As a result, many websites would place less emphasis on maximizing the number of page views, and more on obtaining and using personal information about their users.

These personally targeted ads, whether created by Google or some other company, will be more intrusive than anything we have seen before. Marketers have long known more about individuals than many of us would like, but the use of this information has largely been limited to techniques that could be directed to a single individual with the technology of the past—telemarketing and junk mail. The spread of wireless technology will allow marketers to do much more with this information. As magazines move to digital devices like the iPad and the Kindle, for instance, marketers will be able to personalize the ads that appear based not just on each person’s demographic information but also, with the help of the devices’ ability to track location, the places where they happen to be reading at the moment.

In addition, these personalized ads will become more pervasive, allowing advertisers to coordinate campaigns across a single user’s computer, e-reader, and cell phone, as well as the many other devices that are now being built with wireless connections, such as radios, TVs, watches, and cars. With the help of a highly efficient data clearinghouse, marketers will be able to update these campaigns instantaneously, based on such factors as the sites that we visit, the searches we make, and the places we go.

The ability to target a tightly knit group, such as a circle of friends or a family, has been of particular interest to marketers. Irwin Gotlieb, the CEO of GroupM, the largest advertising agency in the world, relates one example to Auletta: “Take disposable diapers. Should you just market to pregnant women? I would argue that maybe the grandmother has significant influence.” Thus if a daughter-in-law becomes pregnant and searches on Google for baby blogs, or looks at strollers on Amazon, the grandmother-to-be—whose relationship to her daughter-in-law could be discovered through Facebook, or perhaps through the social networking service Google is reported to be working on—may begin to notice a remarkable increase in diaper ads not only on the websites she visits but also, as more and more devices become tied together through wireless connections, over her radio, on her television, possibly on her toaster, and certainly on her cell phone, which, following another of Gotlieb’s suggestions, might start flashing with coupons for diapers when, through the phone’s location features, a marketer is made aware that she has walked into a supermarket or drugstore. It’s not hard to imagine a future in which an ill-informed grandmother-to-be might suspect that there will be a new addition to the family, simply by observing changes among the ads she’s served up hour by hour and day by day.

If we can imagine that future, we can also picture a more disturbing variation: perhaps a depressive son begins reading blog posts about suicidal thoughts, and shortly thereafter his parents begin to notice a rise in advertisements for psychotropic drugs and crisis prevention hotlines. Would that be wrong? Perhaps not, but as advertising becomes more personalized, pervasive, and instantaneously updatable, it seems likely that more and more users will want to opt out of a system that, though perhaps seldom deeply intrusive, will continually be pushing the boundaries of what we find acceptable. As The Wall Street Journal recently revealed, many companies, including Facebook, already provide advertisers with information that even their current minimal privacy policies are supposed to protect.

Sergey Brin has often complained about those who fear that Google might start acting as “Big Brother”; he emphasizes that Google allows users to opt out of data-tracking, and that the company has done more than any other site to protect its users from government intrusions, especially in countries like China.1 But even small violations of privacy can trouble users, and if too many want to opt out of Google’s data tracking, the company, faced with a huge mark-up for targeted ads, will have every incentive to stop giving users the option not to participate, at least for free.

Google executives, according to the documents disclosed by the Journal, have already half-seriously considered allowing users to pay Google the amount that advertisers would otherwise offer the company to reach them, in exchange for receiving from Google a spotless, ad-free, premium Internet. The next obvious step would be to provide well-off users with the ability to opt out of all data tracking, and to offer a premium Internet with greater privacy, at a price.

It is at this point that the problems of net neutrality and of privacy might appear to come together. After all, wouldn’t such a two-tiered Internet, with privacy available only to those who can afford it, be the opposite of a “neutral Internet”? By defending net neutrality, wouldn’t we also defend privacy? The answer, unfortunately, is no, and the question goes some way to outlining the limits of net neutrality as a way of protecting our values online.

Net neutrality doesn’t have much meaning beyond the world of commerce. It is a way of using regulation to ensure a free and open market for competing sites; and a free-market Internet, like a free-market culture, can easily lead to a system in which people can pay to join “gated communities” that provide extra privacy protections. The advocates of net neutrality should be thanked for fighting so hard for an abstract, important principle, long before the consequences of its dissolution become apparent. Yet their success emphasizes the comparative neglect of privacy, an equally abstract and easily eroded principle.

Google, unlike Facebook, currently allows users to opt out of much of its data tracking by adjusting the options on their user profiles. The easiest way to protect privacy online might be a regulation that required all sites to provide this feature. Such an option, in the form of a “Do Not Track” list—modeled on the highly successful “Do Not Call” list for telemarketers—is currently being considered by the Federal Trade Commission.

Sites like Google, however, track information about their users not just to target advertisements, but to provide better services. An example would be a query about “Yankees”: if Google knows from past searches that a user is a Revolutionary War buff, the search engine can emphasize historical sites rather than baseball sites in the results it provides. This apparently small improvement, when applied across the entire search engine, can amount to the difference between going to a small library where the staff knows one’s interests and going to the main branch where one tends to remain a stranger. We have always traded a bit of our privacy in order to receive better service; if users reject data tracking to protect their privacy, it could be argued, they would have to give up other service improvements as well.

Google’s executives habitually speak of privacy in terms of these kinds of trade-offs, but the trade is more complicated than it may at first appear: websites certainly do need private information to provide high-level “functionality”—great search results, excellent e-mail services, useful social networks—but the private information collected for user services does not therefore have to be made available for commercial purposes such as advertising.

Fortunately, Google currently lets users opt out of targeted advertising, while still allowing data tracking for improved service to users. But there’s no guarantee that Google will continue to provide this option, and even if you do opt out of Google’s targeted advertising, the company may still use your private information for other commercial purposes. The most important step that regulators could take would be to permanently decouple the private information that sites need for personalized services—for example, helping you find a restaurant based on your current location—from the private information that sites may use for commercial purposes, such as informing advertisers of that location.

A “Do Not Track” list would likely be too simplistic an approach. What I would propose instead is something like a “Chinese Wall,” akin to the division maintained between the editorial and advertising departments of newspapers, or to the separation once mandated between commercial and investment banking (though hopefully more effective than both). The result would be a firm distinction between the data that companies must gather to provide helpful services and the personal data that companies may exploit for commercial purposes such as advertising. This solution would leave open the question of whether, in their zeal to provide better services, companies may learn a dangerously large amount about individuals, information that would be vulnerable to both theft and subpoena. But with such a clear division in place, Internet users would at least be able to opt out of data tracking for commercial purposes without degrading the level of service that they receive.

Google officials, in their discussions with the Federal Trade Commission last July, warned that even the limited policies being considered could “lock in a specific privacy model that may quickly become obsolete or insufficient due to the speed with which Internet services evolve.” While Google is certainly right that overly onerous regulations could limit innovation, it is hard to imagine in what circumstances a company could justify not allowing its users to opt out of having their personal information used for commercial purposes, should they feel that the tracking intrudes on their privacy. The speed with which the Internet evolves is precisely what makes the need for finding simple but durable regulations necessary. And while a regulation such as a “Chinese Wall”—or anything else effective enough to maintain privacy—would make it harder for sites to profit online, it might also go some way to protecting another value, privacy, that if left to profit-maximizing corporations could easily be eroded away.

—November 11, 2010

This Issue

December 9, 2010