1.

In May 2018, a new data and privacy law will take effect in the European Union. The product of many years of negotiations, the General Data Protection Regulation is designed to give individuals the right to control their own information. The GDPR enshrines a “right to erasure,” also known as the “right to be forgotten,” as well as the right to transfer one’s personal data among social media companies, cloud storage providers, and others.

The European regulation also creates new protections against algorithms, including the “right to an explanation” of decisions made through automated processing. So when a European credit card issuer denies an application, the applicant will be able to learn the reason for the decision and challenge it. Customers can also invoke a right to human intervention. Companies found in violation are subject to fines rising into the billions of dollars.

Regulation has been moving in the opposite direction in the United States, where no federal legislation protects personal data. The American approach is largely the honor system, supplemented by laws that predate the Internet, such as the Fair Credit Reporting Act of 1970. In contrast to Europe’s Data Protection Authorities, the US Federal Trade Commission has only minimal authority to assess civil penalties against companies for privacy violations or data breaches. The Federal Communications Commission (FCC) recently repealed its net neutrality rules, which were among the few protections relating to digital technology.

These divergent approaches, one regulatory, the other deregulatory, follow the same pattern as antitrust enforcement, which faded in Washington and began flourishing in Brussels during the George W. Bush administration. But there is a convincing case that when it comes to overseeing the use and abuse of algorithms, neither the European nor the American approach has much to offer. Automated decision-making has revolutionized many sectors of the economy and it brings real gains to society. It also threatens privacy, autonomy, democratic practice, and ideals of social equality in ways we are only beginning to appreciate.

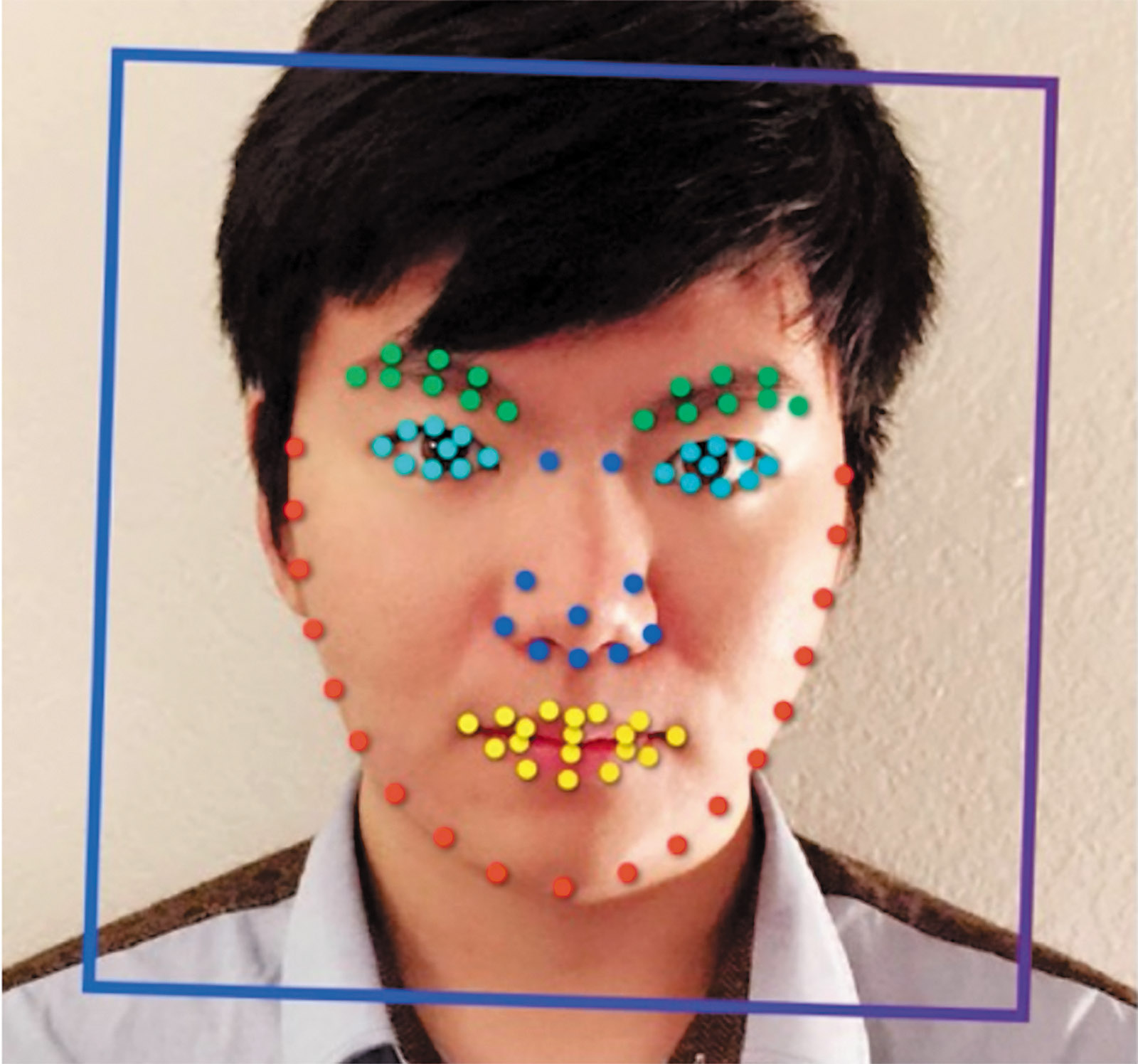

At the simplest level, an algorithm is a sequence of steps for solving a problem. The instructions for using a coffeemaker are an algorithm for converting inputs (grounds, filter, water) into an output (coffee). When people say they’re worried about the power of algorithms, however, they’re talking about the application of sophisticated, often opaque, software programs to enormous data sets. These programs employ advanced statistical methods and machine-learning techniques to pick out patterns and correlations, which they use to make predictions. The most advanced among them, including a subclass of machine-learning algorithms called “deep neural networks,” can infer complex, nonlinear relationships that they weren’t specifically programmed to find.

Predictive algorithms are increasingly central to our lives. They determine everything from what ads we see on the Internet, to whether we are flagged for increased security screening at the airport, to our medical diagnoses and credit scores. They lie behind two of the most powerful products of the digital information age: Google Search and Facebook’s Newsfeed. In many respects, machine-learning algorithms are a boon to humanity; they can map epidemics, reduce energy consumption, perform speech recognition, and predict what shows you might like on Netflix. In other respects, they are troubling. Facebook uses AI algorithms to discern the mental and emotional states of its users. While Mark Zuckerberg emphasizes the application of this technique to suicide prevention, opportunities for optimizing advertising may provide the stronger commercial incentive.

In many cases, even the developers of algorithms that employ deep learning techniques cannot fully explain how they produce their results. The German startup SearchInk has programmed a handwriting recognition algorithm that can predict with 80 percent accuracy whether a sample was penned by a man or woman. The data scientists who invented it do not know precisely how it does this. The same is true of the much-criticized “gay faces” algorithm, which can, according to its Stanford University creators, distinguish the faces of homosexual and heterosexual men with 81 percent accuracy. They have only a hypothesis about what correlations the algorithm might be finding in photos (narrower jaws and longer noses, possibly).

Machine learning of the kind used by the gay faces algorithm is at the center of several appalling episodes. In 2015, the Google Photos app labeled pictures of black people as gorillas. In another instance, researchers found that kitchen objects were associated with women in Microsoft and Facebook image libraries, while sporting equipment predicted maleness. Even a man standing at a stove was labeled a woman. In yet another case, Google searches for black-sounding first names like Trevon, Lakisha, and Darnell were 25 percent more likely to return arrest-related advertisements—including for websites that allow you to check a person’s criminal record—than those for white-sounding names. Like dogs that bark at black people, machine-learning algorithms lack the conscious intention to be racist, but seem somehow to absorb the bias around them. They often behave in ways that reflect patterns and prejudices deeply embedded in history and society.

Advertisement

The encoding of racial and gender discrimination via software design reliably inspires an outcry. In trying to fight biased algorithms, however, we run into two related problems. The first is their impenetrability. Algorithms have the legal protection of trade secrets, and at Google and Facebook they are as closely guarded as the fabled recipe for Coca-Cola. But even making algorithms transparent would not make them intelligible, as Frank Pasquale argues in his influential book The Black Box Society: The Secret Algorithms That Control Money and Information (2015). If the creators of complex machine-learning systems cannot explain how they produce their results, access to the source code will at best give experts a limited ability to discover and expose inherent flaws. Meaningless transparency threatens to lead to the same dead end as meaningless consent, whereby end-user license agreements (EULAs) filled with legal jargon fail to encourage caution or understanding.

The second problem is diffused responsibility. Like the racist dog, algorithms have been programmed with inputs that reflect the assumptions of a majority-white society. Thus they may amplify biases built into historical data, even when programmers attempt to explicitly exclude prejudicial variables like race. If machines are learning on their own, human accountability becomes trickier to ascribe.

We encounter this evasion of responsibility nearly every time an algorithmic technology comes under fire. In 2016, a ProPublica investigation revealed that Facebook’s advertising portal was allowing landlords to prevent African-Americans, Latinos, and other “ethnic affinity” groups from seeing their ads, in apparent violation of the Fair Housing Act and other laws. Facebook blamed advertisers for misusing its algorithm and proposed a better machine-learning algorithm as a solution. The predictable tendency at technology companies is to classify moral failings as technical issues and reject the need for direct human oversight. Facebook’s new tool was supposed to flag attempts to place discriminatory ads and reject them. But when the same journalists checked again a year later, Facebook was still approving the same kinds of biased ads; it remained a simple matter to offer rental housing while excluding such groups as African-Americans, mothers of high school students, Spanish speakers, and people interested in wheelchair ramps.1

2.

The hopeful assumption that software would be immune to the prejudices of human decision-makers has swiftly given way to the troubling realization that ostensibly neutral technologies can reflect and entrench preexisting biases. Two new books explore aspects of this insidious potential. In Algorithms of Oppression, Safiya Umoja Noble, who teaches at the University of Southern California’s Annenberg School of Communication, proposes that “marginalized people are exponentially harmed by Google.”

This is an interesting hypothesis, but Noble does not support it. Instead, she indicts Google with anti-imperialist rhetoric. The failed Google Glass project epitomizes the company’s “neocolonial trajectories.” Internet porn, available via Google, is “an expansion of neoliberal capitalist interests.” Google’s search dominance is a form of “cultural imperialism” that “only further entrenches the problematic identities in the media for women of color.”

Noble exemplifies the troubling academic tendency to view “free speech” and “free expression,” which she frames in quotation marks, as tools of oppression. Her preferred solution, which she doesn’t explore at any level of practical detail, is to censor offensive websites or, as she puts it, “suspend the circulation of racist and sexist material.” It’s hard to imagine an answer to the problem of algorithmic injustice that could be further off base. It might be technically if not legally possible to block politically sensitive terms from Internet searches and to demand that search engines filter their results accordingly. China does this with the help of a vast army of human censors. But even if throwing out the First Amendment doesn’t appall you, it wouldn’t actually address the problem of implicit bias. The point about algorithms is that they can encode and perpetuate discrimination unintentionally without any conscious expression ever taking place.

Noble bases her critique of Google primarily on a single outrageous example. As recently as 2011, if you searched for “black girls,” the first several results were for pornography. (The same was true for “Asian girls” and “Latina girls” but not to the same extent for “white girls.”) This is a genuine algorithmic horror story, but Noble fails to appreciate Google’s unexpected response: it chose to replace the pornography with socially constructive results. If you search today for “black girls,” the first return is for a nonprofit called Black Girls Code that encourages African-American girls to pursue careers in software engineering. Pornography is blocked, even on the later pages (though it remains easy enough to find by substituting other search terms).

Advertisement

By overriding the “organic” results, Google acknowledged the moral failure of its primary product. Producing a decent result, it recognized, required exactly what Facebook has resisted providing for its advertising portal: an interposition of human decency. When it was a less mature company, Google rejected this kind of solution too. In 2004, it refused demands to intervene in its results when the search for “Jew” produced as its top return an anti-Semitic site called Jewwatch.com. Today, Google routinely downgrades what it calls offensive and misleading search results and autocomplete suggestions, employing an army of search-quality raters to apply its guidelines.2 This solution points in a more promising direction: supervision by sensate human beings rather than categorical suppression. It’s not clear whether it might satisfy Noble, who isn’t much interested in distinctions between Google’s past and present practices.

Virginia Eubanks’s Automating Inequality, which turns from the private sector to the public sector, gets much closer to the heart of the problem. Its argument is that the use of automated decision-making in social service programs creates a “digital poorhouse” that perpetuates the kinds of negative moral judgments that have always been attached to poverty in America. Eubanks, a political scientist at SUNY Albany, reports on three programs that epitomize this dubious innovation: a welfare reform effort in Indiana, an algorithm to distribute scarce subsidized apartments to homeless people in Los Angeles, and another designed to reduce the risk of child endangerment in Allegheny County, Pennsylvania.

Former Indiana governor Mitch Daniels’s disastrous effort to privatize and automate the process for determining welfare eligibility in Indiana provides one kind of support for her thesis. Running for office in 2004, Daniels blamed the state’s Family and Social Services Administration for encouraging welfare dependency. After IBM, in concert with a politically connected local firm, won the billion-dollar contract to automate the system, Daniels’s mandate to “reduce ineligible cases” and increase the speed of eligibility determinations took precedence over helping the poor.

The new system was clearly worse in a variety of ways. An enormous backlog developed, and error rates skyrocketed. The newly digitized system lost its human face; caseworkers no longer had the final say in determining eligibility. Recipients who couldn’t get through to the call center received “failure to cooperate” notices. In the words of one state employee, “The rules became brittle. If [applicants] didn’t send something in, one of thirty documents, you simply closed the case for failure to comply…. You couldn’t go out of your way to help somebody.” Desperately ill children were denied Medicaid coverage.

Between 2006 and 2008, Eubanks writes, Indiana denied more than a million applications for benefits, an increase of more than 50 percent, with a strongly disparate impact on black beneficiaries. In 2000, African-Americans made up 46.5 percent of the state’s recipients of TANF, the main federally supported welfare program. A decade later, they made up 32.1 percent. Things got so bad that Daniels eventually had to acknowledge that the experiment had failed and cancel the contract with IBM.

3.

To paraphrase Amos Tversky, the Indiana experiment may have less to say about artificial intelligence than about natural stupidity. The project didn’t deploy any sophisticated technology; it merely provided technological cover for an effort to push people off welfare. By contrast, the Allegheny Family Screening Tool (AFST)—an algorithm designed to predict neglect and abuse of children in the county that includes Pittsburgh—is a cutting-edge machine-learning algorithm developed by a team of economists at the Auckland University of Technology. Taking in such variables as a parent’s welfare status, mental health, and criminal justice record, the AFST produces a score that is meant to predict a child’s risk of endangerment.

Public resistance prevented the launch of an observational experiment with the same algorithm in New Zealand. But in Allegheny County, a well-liked and data-minded director of the Department of Human Services saw it as a way to maximize the efficiency of diminishing resources provided by the Pennsylvania assembly for child welfare programs. The algorithm was deployed there in 2016.

Eubanks, who spent time at the call center where reports are processed, explains how the risk assessment works. When a call comes in to the child neglect and abuse hotline, the algorithm mines stored data and factors in other variables to rate the risk of harm on a scale of zero to twenty. She observes that its predictions often defy common sense: “A 14-year-old living in a cold and dirty house gets a risk score almost three times as high as a 6-year-old whose mother suspects he may have been abused and who may now be homeless.” And indeed, the algorithm is often wrong, predicting high levels of neglect and abuse that are not substantiated in follow-up investigations by caseworkers, and failing to predict much neglect and abuse that is found in subsequent months. In theory, human screeners are supposed to use the AFST as support for their decisions, not as the decision maker. “And yet, in practice, the algorithm seems to be training the intake workers,” she writes. Humans tend to defer to high scores produced by conceptually flawed software.

Among the AFST’s flaws is that it predicts harm to black and biracial children far more often than to white ones. How does racial bias come through in the algorithm? To a large extent, the AFST is simply mirroring unfairness built into the old human system. Forty-eight percent of the children in foster care in Allegheny County are African-American, even though only 18 percent of the total population of children are African-American. By using call referrals as its chief “outcome variable,” the algorithm perpetuates this disparity. People call the hotline more often about black and biracial families than about white ones. This reflects in part an urban/rural racial divide. Since anonymous complaints by neighbors lead to a higher score, the system accentuates a preexisting bias toward noticing urban families and ignoring rural ones.

Additional unfairness comes from the data warehouse, which stores extensive information about people who use a range of social services, but none for families that don’t. “The professional middle class will not stand for such intrusive data gathering,” Eubanks writes. Poor people learn two contradictory lessons from being assigned AFST scores. One is the need to act deferentially around caseworkers for fear of having their children taken away and placed in foster care. The other is to try to avoid accessing social services in the first place, since doing so brings more suspicion and surveillance.

Eubanks talks to some of the apparent victims of this system: working-class parents doing their best who are constantly surveilled, investigated, and supervised by state authorities, often as a result of what are apparently vendetta calls to the hotline from landlords, ex-spouses, or neighbors disturbed by noisy parties. “Ordinary behaviors that might raise no eyebrows before a high AFST score become confirmation for the decision to screen them in for investigation,” she writes. “A parent is now more likely to be re-referred to a hotline because the neighbors saw child protective services at her door last week.” What emerges is a vicious cycle in which the self-validating algorithm produces the behavior it predicts, and predicts the behavior it produces.

This kind of feedback loop helps to explain the “racist dog” phenomenon of ostensibly race-neutral criminal justice algorithms. If a correlation of dark skin and criminality is reflected in data based on patterns of racial profiling, then processing historical data will predict that blacks will commit more crimes, even if neither race nor a proxy for race is encoded as an input variable. The prediction brings more supervision, which supports the prediction. This seems to be what is going on with the Correctional Offender Management Profiling for Alternative Sanctions tool (COMPAS). ProPublica studied this risk-assessment algorithm, which judges around the country use to help make decisions about bail, sentencing, and parole, as part of its invaluable “Machine Bias” series.3

COMPAS produces nearly twice the rate of false positives for blacks that it does for whites. In other words, it is much more likely to inaccurately predict that an African-American defendant will commit a subsequent violent offense than it is for a white defendant. Being unemployed or having a parent who went to prison raises a prisoner’s score, which can bring higher bail, a longer prison sentence, or denial of parole. Correlations reflected in historical data become invisibly entrenched in policy without programmers having ill intentions. Quantified information naturally points backward. As Cathy O’Neil puts it in Weapons of Math Destruction: “Big Data processes codify the past. They do not invent the future.”4

In theory, the EU’s “right to an explanation” provides a way to at least see and understand this kind of embedded discrimination. If we had something resembling the General Data Protection Regulation in the United States, a proprietary algorithm like COMPAS could not hide behind commercial secrecy. Defendants could theoretically sue to understand how they were scored.

But secrecy isn’t the main issue. The AFST is a fully transparent algorithm that has been subject to extensive discussion in both New Zealand and the United States. While this means that flaws can be discovered, as they have been by Eubanks, the resulting knowledge is probably too esoteric and technical to be of much use. Public policy that hinges on understanding the distinctions among outcome variables, prediction variables, training data, and validation data seems certain to become the domain of technocrats. An explanation is not what’s wanted. What’s wanted is for the harm not to have occurred in the first place, and not to continue in the future.

Following O’Neil, Eubanks proposes a Hippocratic oath for data scientists, whereby they would vow to respect all people and to not compound patterns of discrimination. Whether this would have much effect or become yet another form of meaningless consent is open to debate. But she is correct that the answer must come in the form of ethical compunction rather than more data and better mining of it. Systems similar to the AFST and COMPAS continue to be implemented in jurisdictions across the country. Algorithms are developing their capabilities to regulate humans faster than humans are figuring out how to regulate algorithms.

-

1

See Julia Angwin and Terry Parris Jr., “Facebook Lets Advertisers Exclude Users by Race,” ProPublica, October 28, 2016; and Julia Angwin, Ariana Tobin, and Madeleine Varner, “Facebook (Still) Letting Housing Advertisers Exclude Users by Race,” ProPublica, November 21, 2017. ↩

-

2

Adrianne Jeffries, “Google Finally Realized That Racist Search Results Are a Problem,” The Outline, April 25, 2017. ↩

-

3

Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner, “Machine Bias,” ProPublica, May 23, 2016. ↩

-

4

Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (Crown, 2016), p. 204. ↩