Apparently, the age of the old-fashioned spook is in decline. What is emerging instead is an obscure world of mysterious boutique companies specializing in data analysis and online influence that contract with government agencies. As they say about hedge funds, if the general public has heard their names that’s probably not a good sign. But there is now one data analysis company that anyone who pays attention to the US and UK press has heard of: Cambridge Analytica. Representatives have boasted that their list of past and current clients includes the British Ministry of Defense, the US Department of Defense, the US Department of State, the CIA, the Defense Intelligence Agency, and NATO. Nevertheless, they became recognized for just one influence campaign: the one that helped Donald Trump get elected president of the United States. The kind of help the company offered has since been the subject of much unwelcome legal and journalistic scrutiny.

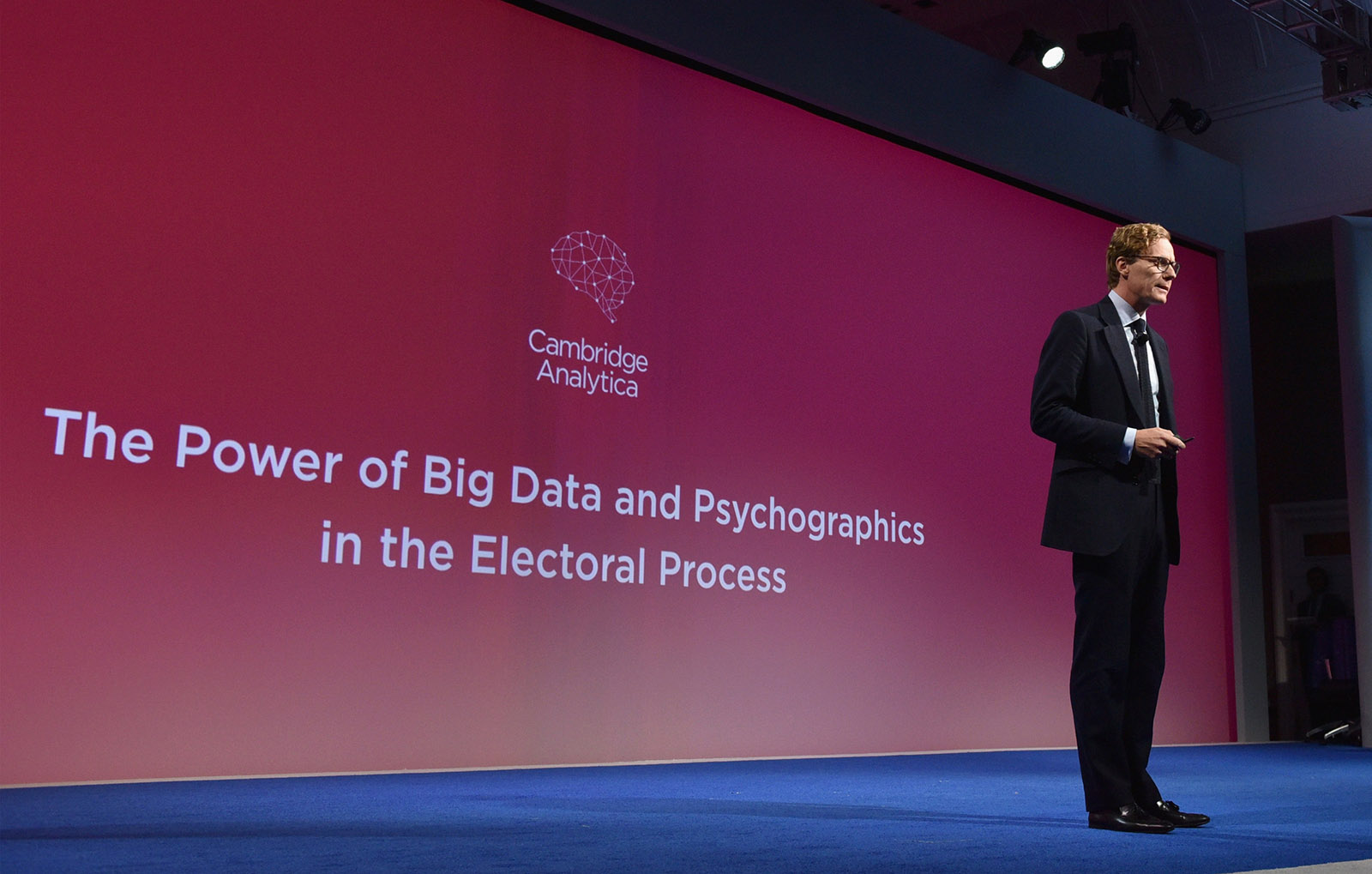

Carole Cadwalladr’s recent exposé of the inner workings of Cambridge Analytica shows that the company, along with its partner, SCL Group, should rightly be as a cautionary tale about the part private companies play in developing and deploying government-funded behavioral technologies. Her source, former employee Christopher Wylie, has described the development of influence techniques for psychological warfare by SCL Defense, the refinement of similar techniques by SCL Elections through its use across the developing world (for example, a “rumor campaign” deployed to spread fear during the 2007 election in Nigeria), and the purchase of this cyber-arsenal by Robert Mercer, the American billionaire who funded Cambridge Analytica, and who, with the help of Wylie, Trump campaign manager Steve Bannon, and the company’s chief executive Alexander Nix, deployed it on the American electorate in 2016.

But the revelations should also prompt us to ask deeper questions about the kind of behavioral science research that enables both governments and private companies to assume these powers. Two young psychologists are central to the Cambridge Analytica story. One is Michal Kosinski, who devised an app with a Cambridge University colleague, David Stillwell, that measures personality traits by analyzing Facebook “likes.” It was then used in collaboration with the World Well-Being Project, a group at the University of Pennsylvania’s Positive Psychology Center that specializes in the use of big data to measure health and happiness in order to improve well-being. The other is Aleksandr Kogan, who also works in the field of positive psychology and has written papers on happiness, kindness, and love (according to his résumé, an early paper was called “Down the Rabbit Hole: A Unified Theory of Love”). He ran the Prosociality and Well-being Laboratory, under the auspices of Cambridge University’s Well-Being Institute.

Despite its prominence in research on well-being, Kosinski’s work, Cadwalladr points out, drew a great deal of interest from British and American intelligence agencies and defense contractors, including overtures from the private company running an intelligence project nicknamed “Operation KitKat” because a correlation had been found between anti-Israeli sentiments and liking Nikes and KitKats. Several of Kosinski’s co-authored papers list the US government’s Defense Advanced Research Projects Agency, or DARPA, as a funding source. His résumé boasts of meetings with senior figures at two of the world’s largest defense contractors, Boeing and Microsoft, both companies that have sponsored his research. He ran a workshop on digital footprints and psychological assessment for the Singaporean Ministry of Defense.

For his part, Aleksandr Kogan established a company, Global Science Research, that contracted with SCL, using Facebook data to map personality traits for its work in elections (Kosinski claims that Kogan essentially reverse-engineered the app that he and Stillwell had developed). Kogan’s app harvested data on Facebook users who agreed to take a personality test for the purposes of academic research (though it was, in fact, to be used by SCL for non-academic ends). But according to Wylie, the app also collected data on their entire—and nonconsenting—network of friends. Once Cambridge Analytica and SCL had won contracts with the State Department and were pitching to the Pentagon, Wylie became alarmed that this illegally-obtained data had ended up at the heart of government, along with the contractors who might abuse it.

This apparently bizarre intersection of research on topics like love and kindness with defense and intelligence interests is not, in fact, particularly unusual. It is typical of the kind of dual-use research that has shaped the field of social psychology in the US since World War II. Much of the classic, foundational research on personality, conformity, obedience, group polarization, and other such determinants of social dynamics—while ostensibly civilian—was funded during the cold war by the military and the CIA. The cold war was an ideological battle, so, naturally, research on techniques for controlling belief was considered a national security priority. This psychological research laid the groundwork for propaganda wars and for experiments in individual “mind control.” The pioneering figures from this era—for example, Gordon Allport on personality and Solomon Asch on belief conformity—are still cited in NATO psy-ops literature to this day.

Advertisement

The recent revival of this cold war approach has taken place in the setting of the war on terror, which began in 1998 with Bill Clinton’s Presidential Decision Directive 62, making terrorism America’s national security priority. Martin Seligman, the psychologist who has bridged the military and civilian worlds more successfully than any other with his work on helplessness and resilience, was at the forefront of the new dual-use initiative. His research began as a part of a cold war program of electroshock experiments in the 1960s. He subjected dogs to electric shocks, rendering them passive to the point that they no longer even tried to avoid the pain, a state he called “learned helplessness.” This concept then became the basis of a theory of depression, along with associated ideas about how to foster psychological resilience.

In 1998, Seligman founded the positive psychology movement, dedicated to the study of psychological traits and habits that foster authentic happiness and well-being, spawning an enormous industry of popular self-help books. At the same time, his work attracted interest and funding from the military as a central part of its soldier-resilience initiative. Seligman had previously worked with the CIA and even before September 11, 2001, his new movement was in tune with America’s shifting national security priorities, hosting in its inaugural year a conference in Northern Ireland on “ethno-political conflict.”

But it was after the September 11 attacks that terrorism became Seligman’s absolute priority. In 2003, he said that the war with jihadis must take precedence over all other academic research, saying of his colleagues: “If we lose the war, the laudable, but pet projects they endorse, will not be issues… If we win this war, we can go on to pursue the normal goals of science.” Money poured into the discipline for these purposes. The Department of Homeland Security established Centers of Excellence in universities for interdisciplinary research into the social and psychological roots of terrorism. Elsewhere, scholars worked more obliquely on relevant behavioral technologies.

Some of the psychological projects cultivated under the banner of the war on terror will be familiar to many readers. Psychologists such as Jonathan Haidt and Steven Pinker, and their colleagues in other disciplines (most prominently, the Harvard Law professor Cass Sunstein) rehabilitated the cold war research on “group polarization” as a way of understanding not, this time, the radicalism that feeds “totalitarianism,” but the equally amorphous notion of “extremism.” They sought to combat extremism domestically by promoting “viewpoint diversity” both on campus (through organizations such as the Heterodox Academy, run by Haidt and funded by libertarian billionaire Paul Singer) and online, suggesting ways in which websites might employ techniques from social psychology to combat phenomena such as “confirmation bias.” Their notion of “appropriate heterogeneity” (Sunstein) in moral and political views remains controversial.

Seligman himself saw the potential for using the Internet to bring his research on personality together with new ways of gathering data. This project began shortly after the September 11 attacks, with a paper on “Character Strengths Before and After September 11,” which focused on variations in traits such as trust, love, teamwork, and leadership. It ultimately evolved into the innovative World Well-Being Project at Penn. Seligman also fostered links with Cambridge University, where he is on the board of the Well-Being Institute that employs the same kind of psychometric techniques. The aim of these programs is not simply to analyze our subjective states of mind but to discover means by which we can be “nudged” in the direction of our true well-being as positive psychologists understand it, which includes attributes like resilience and optimism. Seligman’s projects are almost all funded by the Templeton Foundation and may have been employed for entirely civilian purposes. But in bringing together the personality research and the behavioral technologies that social psychologists had for decades been refining with the new tool of big data (via the astonishing resources provided by social media), it has created an important template for what is now the cutting-edge work of America’s intelligence community.

In 2008, then Secretary of Defense Robert Gates commissioned the Minerva Initiative, funded by the DoD, which brought researchers in the social sciences together to study culture and terrorism, and specifically supported initiatives involving the analysis of social media. One of the Cornell scientists involved also participated in the famous and controversial Facebook study of emotional contagion. Less well known is the Open Source Indicators program at the Intelligence Advanced Research Projects Activity, or IARPA (a body under the Director of National Intelligence), which has aimed to analyze social media in order to predict social unrest and political crises.

Advertisement

In a 2014 interview, Lt. Gen. Michael Flynn, speaking then as head of the Defense Intelligence Agency, said that such open-source data initiatives, and in particular the study of social media such as Facebook, had entirely transformed intelligence-gathering. He reported that traditional signals intelligence and human intelligence were increasingly being replaced by this open-source work and that the way in which intelligence agents are trained had been modified to accommodate the shift. A growing portion of the military’s $50 billion budget would be spent on this data analytics work, he claimed, creating a “gold rush” for contractors. A few weeks after this interview, Flynn left the DIA to establish the Flynn Intel Group Inc. He later acted as a consultant to the SCL Group.

Carole Cadwalladr reported in The Observer last year that it was Sophie Schmidt, daughter of Alphabet founder Eric Schmidt, who made SCL aware of this gold rush, telling Alexander Nix, then head of SCL Elections, that the company should emulate Palantir, the company set up by Peter Thiel and funded with CIA venture capital that has now won important national security contracts. Schmidt threatened to sue Cadwalladr for reporting this information. But Nix recently admitted before a parliamentary select committee in London that Schmidt had interned for Cambridge Analytica, though he denied that she had introduced him to Peter Thiel. Aleksandr Kogan and Christopher Wylie allowed Cambridge Analytica to evolve into an extremely competitive operator in this arena.

It was by no means inevitable that dual-use research at the intersection of psychology and data science would be employed along with illegally-obtained caches of data to manipulate elections. But dual-use research in psychology does seem to present a specific set of dangers. Many areas of scientific research have benefited from dual-use initiatives. The National Cancer Institute began its life in the early 1970s as part of a coordinated program examining the effects of tumor agents developed as bio-weapons at Fort Detrick. The National Institute of Allergy and Infectious Diseases, similarly, researched the effects of militarily manufactured hazardous viruses. This was the foundation of a biotechnology industry that has become a paradigm case of dual use and has led, in spite of its more sinister side, to invaluable medical breakthroughs. But the development of behavioral technologies intended for military-grade persuasion in cyber-operations is rooted in a specific perspective on human beings, one that is at odds with the way they should be viewed in democratic societies.

I’ve written previously about the way in which a great deal of contemporary behavioral science aims to exploit our irrationalities rather than overcome them. A science that is oriented toward the development of behavioral technologies is bound to view us narrowly as manipulable subjects rather than rational agents. If these technologies are becoming the core of America’s military and intelligence cyber-operations, it looks as though we will have to work harder to keep these trends from affecting the everyday life of our democratic society. That will mean paying closer attention to the military and civilian boundaries being crossed by the private companies that undertake such cyber-operations.

In the academic world, it should entail a refusal to apply the perspective of propaganda research more generally to social problems. From social media we should demand, at a minimum, much greater protection of our data. Over time, we might also see a lower tolerance for platforms whose business model relies on the collection and commercial exploitation of that data. As for politics, rather than elected officials’ perfecting technologies that give them access to personal information about the electorate, their focus should be on informing voters about their policies and actions, and making themselves accountable.