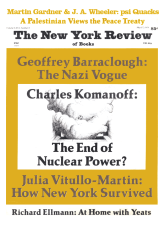

The case for nuclear power has always rested on two claims: that reactors were reasonably safe and that they were indispensable as a source of energy. Now the accident at Three Mile Island has shaken the first claim, and we will soon have to face the flaws in the second. The result should be the abandonment of nuclear power and the emergence of a more rational energy policy, based on measures to improve the efficiency with which energy from fossil fuels is used.

The dangers of nuclear power have been greatly underestimated, while its potential to replace oil as the world’s primary energy source has been vastly exaggerated. Rather than being indispensable, nuclear power can make, at best, only a modest and easily replaceable contribution to future energy requirements.

Nuclear power plants currently generate 13 percent of the electricity produced in the United States, and slightly smaller percentages in Western Europe and Japan. (Countries such as Sweden and Switzerland which depend on nuclear reactors for 20 percent of their electricity are exceptions.) Since electricity accounts for only 30 percent of the total energy supply, nuclear power provides less than 4 percent of the overall energy of the industrial countries. More surprisingly, as the economist Vince Taylor has shown in a report to the US Arms Control and Disarmament Agency, nuclear power will provide, at most, only a 10 to 15 percent share of the energy supply of the advanced countries by the year 2000.1

Taylor’s argument begins with the fact that nuclear power provides only electricity—an expensive form of energy which absorbs only 10 percent of the oil used in the US and other advanced countries. The great hopes for nuclear energy were based on the possibility that electricity could be substituted for oil in processes where electric power had not been heavily used. In fact, little such substitution has occurred. The petrochemical and transport industries, including automobiles, now use 50 percent of available oil. There is no foreseeable technical possibility of electrifying large proportions of these industries. The remaining 40 percent of oil is used for heating and for industrial energy. In both cases, major electrification is ruled out by nuclear power’s high cost.

What are the relative costs of energy from nuclear power? They are rarely compared to the costs of burning oil in engines or furnaces, yet such a comparison is central if we are to weigh the prospects of substituting nuclear power for oil. If we carefully estimate the different kinds of costs involved in producing kilowatts at nuclear plants and in obtaining barrels of oil, we find that the cost of energy from a nuclear plant built today can be calculated at $100 for the “heat equivalent of a barrel of oil.” This figure reflects fuel, maintenance, and—most important—the cost of constructing the nuclear plant itself. It is over four times the cost of heat from oil at OPEC prices.

The heat in electricity, of course, can be made use of with more efficiency than can heat from oil and gas—as much as twice as efficiently. For example, electric furnaces produce about 50 percent more glass, per unit of applied heat, than gas-burning furnaces. When we allow for this, nuclear electricity still remains two to three times as expensive as oil. As a result, the market for nuclear electricity has slowly but inexorably been drying up. Notwithstanding plans to build hundreds of reactors, the projected demand for nuclear power—and with it the financial backing for its expansion—has not materialized.

It wasn’t always this way. At the start of 1972, the first plants purchased by the electric utilities on a commercial basis were entering service at a cost of $225 million for a standard thousand-megawatt generator. This cost converts to the equivalent of about $25 per barrel of oil. Costs were expected to fall as more plants were built. The tripling of prices for crude oil and coal in the wake of the 1973 Arab oil embargo was expected to put nuclear power in a commanding market position. Not only was it apparently cheaper than coal as a source of electricity, but nuclear electricity seemed competitive with oil both for heating and for industrial purposes. Nuclear electricity cost only twice as much per British thermal unit—i.e., per unit of delivered heat; and since this heat was available in more efficient form, it was therefore close to oil in energy value. Moreover, nuclear electricity was expected to decline in cost while oil rose. Few doubted the Atomic Energy Commission’s projection in 1974 that 1,000 thousand-megawatt reactors would be built by the end of the century—capable of producing roughly the equivalent of the entire US energy supply in 1972.

Instead, nuclear costs have soared as the industry expanded, and primarily for two reasons: safety systems had to be added to meet rising public concerns; and improvements in design were needed to correct defects that emerged as operating experience increased. By the end of 1977, a thousand-megawatt reactor cost $900 million, four times the cost of a 1972 plant. The rate of increase was an astounding 26 percent per year. This was triple the rate of general US inflation and half again as great as the increase in construction costs of power generators using coal, notwithstanding new emission controls. It was about the same rate of increase as that for the price of oil, despite the actions of the OPEC cartel. Nuclear power had thus lost its edge over coal in the electric power market, and had lost the opportunity to make inroads on the larger market occupied by oil and gas.

Advertisement

To be sure, these figures represent the findings of the author’s own cost research. They have not as yet been digested by the power industry, which still claims nuclear reactors to be the least costly source of electricity.2 But the impact of steeply rising costs and concomitant delays in licensing and constructing reactors has not been lost on nuclear advocates. Before the breakdown at Harrisburg, they were stridently demanding that Congress and the regulatory authorities “stabilize” reactor design standards as a solution to rising costs. Now the Harrisburg accident has raised the prospect of a reactor disaster from an infinitesimally remote possibility to a reality. This guarantees that safety requirements will be stiffened so that costs will continue to rise sharply, offsetting probable increases in the cost of oil. The events at Harrisburg have pushed nuclear power beyond the brink of economic acceptability.

Moreover, the accident has undermined confidence in the nuclear industry. As the laxity of safety regulations at Three Mile Island becomes widely known, it may undermine confidence in the Nuclear Regulatory Commission as well. The Commission had composed a supposedly exhaustive list of possible “initiating chains” for nuclear accidents and concluded that, in view of its precautions, US reactors were statistically safe. The sequence of events which caused the Harrisburg accident was not among these “chains.” Henceforth every major decision about nuclear power, especially those concerned with disposal of radioactive wastes, will require so much public scrutiny that delays and costs will become intolerable. Well before the Harrisburg events seven state legislatures had already passed laws to stop, or control, the storage and transport of nuclear wastes. We can now expect that pressure for more stringent legislation will mount.

Of course, for many people the prospect of permanent oil shortages causing economic stagnation is worse than unsafe and expensive nuclear power. And, in fact, nuclear power will be justified in the months ahead by references to the world’s limited store of easily extractable oil. The median estimate of the total quantity of world oil that remains to be exploited is one-and-a-half to two trillion barrels. This would be enough for a one-hundred-year supply at the current rate of consumption. It will not suffice if world needs grow at several percent per year—the rate of energy growth generally considered necessary to support healthy economic growth. At 3 percent annual energy growth, for example, this supply would be exhausted in fifty years. And, well before then, physical limits on the potential rate of discovery and extraction would force the level of oil output below demand.

The possibility that potential oil resources may be greater than is now anticipated does not significantly alter this pessimistic picture. Aside from the doubtful wisdom of tying the world’s economic prospects to unexpected discoveries, even a doubling of total oil resources would add only another twenty years of supply, assuming 3 percent annual growth in consumption. Oil is simply incapable of sustaining world energy growth for more than a few decades.

Nor are coal or natural gas, the other fossil fuels, likely to take up much of the slack. Natural gas is less abundant than oil. Moreover, continued growth of gas consumption would require that it be transported in liquefied from—a matter of growing controversy—and would maintain the present, undesirable level of dependence on imports from the Middle East.

The world’s recoverable coal reserves are several times those of oil and will now be increasingly exploited in the US. But environmental and cost constraints analogous to those impeding nuclear power will probably prohibit developing coal on the scale necessary to increase energy growth significantly. During the past decade, efforts to reduce injuries and deaths in US mines, and to contain pollution from coal burning, have caused the cost of coal-fired electricity to increase at twice the general inflation rate. Any increase in the rate of expansion of the coal industry would probably accelerate this trend.

Moreover, hopes of manufacturing large quantities of synthetic oil and gas from coal or shale appear doomed both by inherent inefficiencies and environmental effects. The costs of dealing with both would be staggering. According to a study by energy specialists at the Massachusetts Institute of Technology,3 the production of synthetic fuels equivalent to only one-tenth of the current US energy supply would require mining the equivalent of the entire US coal output in 1975. To process this coal would require forty-two gasification facilities and six oil shale plants, costing over $1 billion each, as well as forty-seven liquefaction facilities, each costing one-half billion dollars. Oil and gas from such facilities would be several times as costly as OPEC oil and gas.

Advertisement

Furthermore, since no commercial-size synthetic fuel plants are operating as yet, little attention has been devoted to the environmental effects of these technologies. If we follow the known pattern for energy projects with potentially large effects on the environment, we can estimate that the construction costs currently cited for commercial synthetic fuel plants could easily double or triple, ensuring that such fuels would remain uneconomical.

Similar considerations apply to nuclear fusion and solar space satellites. Even if the considerable scientific and engineering problems can be solved, high costs will consign these “exotic” high-technology schemes to a miniscule share of energy supply.

Aside from nuclear power, only solar energy—which includes, in addition to sunlight, other sun-driven sources such as wind, water, and plants—appears to hold the technical potential to provide sufficient energy to support the world’s economies. However, it will take a long time for solar energy to become the dominant source of world energy. It will first be necessary to eliminate inefficiencies in solar technologies, to allow turnover of much of the existing housing stock which is unsuited for solar energy, and to permit national economies to absorb solar energy’s high costs. Solar technologies are now generally less expensive than electricity from new nuclear plants, but cost considerably more than oil.

The earliest anticipated date by which this transition could be accomplished in most advanced countries is 2025. But before then, it is likely that oil extraction could not keep up with increasing oil requirements, assuming that the amount of energy in use increases by several percent a year. Some experts argue that nuclear power, despite its high risks and poor economic prospects, is therefore needed at least as a supplementary, transitional energy source, to avert major shortages of fossil fuels which would imperil economic growth.

However, this view ignores perhaps the most significant—and certainly the most neglected—factor in the current discussion: the large potential for reducing the amounts of energy used, such as oil or gas, without affecting the quality or quantity of energy services, such as heating, lighting, and transportation. Energy must be combined with other materials, equipment, and labor to provide energy services. The amount of energy required to provide a given service can thus vary widely, depending upon the amounts of other resources used and the technology employed. As Vince Taylor points out in the paper cited earlier, the productivity of energy is not fixed, but is susceptible to deliberate change.

Technological innovations, such as those occurring in solid-state electronics, will surely be one source of improvement in energy productivity, but major technical advances are not required for a successful program of productivity increases. A variety of simple measures could allow energy services to be expanded without increasing the amount of energy consumed.

For example, if the walls and ceilings of new homes were equipped with insulation, using only amounts which would pay back the added cost through fuel savings within ten years, this would reduce fuel consumption by one-third or more, by comparison to consumption in typical houses today. A further reduction by one-half could be accomplished by a variety of building improvements: thick walls to moderate temperature changes; large, south-facing windows to capture winter sunlight; sophisticated thermal controls to match heating output to temperature desired; and better designed and maintained furnaces. These changes, along with insulation, could reduce fuel requirements for home heating to less than one-third of the present average, with no loss of comfort. Even this estimate does not exhaust the possibilities of reducing the energy consumed, since the addition of sufficient solar collectors and of heat-storage capacity could altogether eliminate fuel consumption for heating new houses.

Similar savings are available in every part of the economy. One study by a team of industrial engineers from private industry and the Massachusetts Institute of Technology4 compared US energy requirements—and their costs—in 1985 under two plans. The engineers’ own plan stressed improved energy productivity of the kind I have described; the other was a government plan stressing expansion of coal and nuclear power. Energy requirements for 1985 under the engineers’ plan would be eighty “Quads” (US energy consumption in 1978 was seventy-eight Quads), or 21 percent less than the 101 Quads envisioned under the government plan. The savings would come primarily from improved auto mileage (5.6 Quads), improvements in industry such as heat recuperators and more efficient electric motors (4.5 Quads), generating electricity in conjunction with steam in factories (2.4 Quads), and improvements in the efficiency of major appliances such as air conditioners and refrgierators (2.5 Quads).

All of these measures, the authors judged, could be achieved by 1985 with the present US technology. But what is most striking about the engineers’ proposal is its saving in capital investment. Whereas the government plan would have required a total investment between 1975 and 1985 of $648 billion (88 percent to expand supply and 12 percent for energy productivity), the engineer’s plan would require $318 billion, or only half as much. This sum would be divided evenly between investments in supply, such as boilers producing both electricity and steam, and investments in improving energy productivity.

The large potential for improving the productivity of energy is not confined to the United States. It has been demonstrated in detailed studies in virtually every other industrial country—even those thought to be much more efficient in their use of energy than the United States. For example, a recent analysis in the United Kingdom5 concluded that applying currently available technologies could reduce energy requirements from 8.7 Quads today to 8.0 in the year 2025, while economic output would triple. Savings would come mostly from reducing heat losses in buildings, raising auto mileage through lightening and redesigning vehicles and the electronic control of engine operation, increasing the efficiency of industrial electric motors through proper sizing and coupling, and improving the efficiency of household appliances.

Broadly similar results have also been obtained in studies in France—where lack of indigenous fuels has been used to justify a huge commitment to nuclear power—as well as Denmark, Sweden, the Netherlands, West Germany, and Switzerland. The common conclusion of these studies is that improvements in energy productivity can extend the lifetime of oil and gas resources sufficiently to obtain the time needed by the industrial countries for an orderly transition to solar energy.

Energy productivity has in fact been rising since the oil price increase of 1973. In the United States, economic growth has recently been running at one and a half times energy growth. Gains have been smaller in Europe, in part because of the lower rate of increase in the cost of imported oil (owing to appreciation in the value of most European currencies against the dollar), and also because of greater investment in electrification, which generally yields less economic output per unit of energy. Further improvements in energy productivity will come as energy prices rise, but a strategy aimed at eliminating so-called “institutional traps” inhibiting conservation could coax forth greater energy savings at less cost to consumers.

One such trap is the rate structure under which utilities sell electricity and gas. Rates are set by state authorities here and by national bodies in Europe. Generally they decline with increasing consumption so that reductions in usage resulting from conservation bring about less-than-proportional reductions in electricity and gas bills. Such rate structures made economic sense in the days when expansion of supply led to more efficient production, which in turn reduced average costs. However such rate structures make no sense today, when new, “incremental” supplies of electricity and gas are now available only at rising costs. The effect of these structures is to discourage conservation, since, for example, a 20 percent reduction in electricity usage typically reduces customer bills by only 10 to 15 percent.

A second trap is the tax code. Virtually all industries that supply energy in the United States benefit from credit and other advantages accruing to businesses that are “capital intensive.” Utilities also enjoy special tax provisions obtained in recent years by pleading hardship from rising costs for construction and fuel. Accordingly, new investments by electric and gas companies actually reduce their taxes. According to the Cornell University economist Duane Chapman,6 deductions for interest expense, accelerated depreciation, and the investment tax credit are sufficient to offset entirely the nominal 48 percent tax rate on corporate income. By contrast, investments to improve energy productivity have far fewer advantages. Thus productivity-raising ventures are frequently less profitable, even though they are superior to supply investments in the energy obtained per dollar invested. This causes capital to flow toward expanding energy supply.

Finally, there are jurisdictional traps. Few houses are constructed to be efficient in their use of energy, since most builders seek to minimize equipment costs to keep the original selling prices down. Similarly, much electricity usage in commercial and apartment buildings is master-metered, rather than individually billed, eliminating any economic incentive to conserve. In industry, many potential energy-saving measures go begging, despite prospective rates of return as high as 50 percent; management instead assigns a higher importance to conventional investments needed to remain competitive, such as expanding plants and developing products. Yet regulated utilities can attract capital, at lower rates of return, to expand their generating capacity.

The result of these and many other barriers to productivity improvements is that individuals and business now undertake only a small fraction of the available measures that could improve energy productivity at a cost less than the cost to the economy of supplying equivalent energy.

Most of these barriers have staunch defenders: large power users who benefit from quantity discounts; energy corporations whose profits depend on favorable tax treatment; home builders and appliance manufacturers who contend that the cost of energy-saving measures will reduce sales. However, the staying power of these institutional arrangements appears to result less from the political strength of their beneficiaries than from the lack of a constituency for reform.

The environmental movement has concentrated single-mindedly on the dangers of energy projects to health and to nature. With only a few exceptions, such as the Environmental Defense Fund, it has done little to promote new policies that would shift economic incentives from increasing the supply of energy to improving its use. Only a handful of consumer organizations have attacked corporate subsidies which help to maintain low energy prices at the expense of higher taxes (for example, the subsidies to the electric utilities). Moreover, saving energy generally has a gloomy sound, with its implication that people must “do without.” One result is that organized labor does not perceive productivity improvements as a workable, job-creating alternative to projects to produce energy. Finally, government policymakers, lulled by the notion of a cheap nuclear future, underestimate the costs of new energy supplies and give little encouragement to programs to improve energy productivity.

Nevertheless, the arguments for basing energy policy on improved productivity advanced by Vince Taylor, and complementary ones by Amory Lovins of Friends of the Earth, have begun to be received sympathetically by energy specialists. Now the near disaster at Harrisburg provides an opportunity for new departures in policy, which would have been required for oil conservation in any event, in view of the limits on nuclear power I have described above.

Because of short-term considerations of cost and energy, the seventy nuclear plants now operating in the United States will probably continue to run, and the other fifty where major construction has started may be completed. But if improvements in energy productivity were made on a wide scale, this could ensure that no additional plants need be built. A similar outcome is possible in Western Europe and Japan, where costs have also spiraled as the result of the necessary but perhaps futile efforts to resolve rising doubts over nuclear power’s safety.

Nuclear power would then be left providing just over 5 percent of the industrial countries’ energy supply, assuming overall energy requirements remain at today’s levels. It would then be realistic to ask whether the dangers in plant safety, in accumulation of radioactive wastes, and in potential contribution to nuclear weapons proliferation do not exceed the minor benefits of continuing to run existing plants. To judge by the response to the Harrisburg accident, that debate has already begun.

This Issue

May 17, 1979

-

1

Vince Taylor, Energy: The Easy Path, available from Pan Heuristics, Suite 1221, 1801 Avenue of the Stars, Los Angeles, CA 90067, January 1979. ↩

-

2

The Edison Electric Institute claims that power from nuclear reactors costs 1.5 cents per kilowatt-hour, as compared to 2 cents for power from coal and 3.9 cents for power from oil. But this claim is based on a sample dominated by nuclear plants completed before expensive safety standards were imposed and including coal and oil plants operating at low efficiencies because of the surplus of generating capacity. More important, this comparison pertains only to the 10 percent of oil which is used to generate electricity. ↩

-

3

Carroll L. Wilson, ed., Energy: Global Prospects 1985–2000, Report of the Workshop on Alternative Energy Strategies (McGraw-Hill, 1977). ↩

-

4

Thomas F. Widmer and Elias P. Gyftopoulos, “Energy Conservation and a Healthy Economy,” in Technology Review, Volume 79, Number 7, June 1977. ↩

-

5

Gerald Leach et al., A Low Energy Strategy for the United Kingdom (International Institute for Environment and Development, London, January 1979). ↩

-

6

Duane Chapman, Taxation, Energy Use, and Employment (Department of Agricultural Economics, Cornell University, Ithaca, New York, March 1978). ↩