Several months ago, I led a clinical conference for interns and residents at the Massachusetts General Hospital. It was thirty-three years since I had trained there, and beyond discussing the topic of the gathering, I was keen to learn from these young doctors how they viewed recent changes in the culture of medicine.

The subject of the conference centered on how physicians arrive at a diagnosis and recommend a treatment—questions that are central in the two books under review. We began by discussing not clinical successes but failures. Some 10 to 15 percent of all patients either suffer from a delay in making the correct diagnosis or die before the correct diagnosis is made. Misdiagnosis, it turns out, is rarely related to the doctor being misled by technical errors, like a laboratory worker mixing up a blood sample and reporting a result on the wrong patient; rather, the failure to diagnose reflects the unsuspected errors made while trying to understand a patient’s condition.1

These cognitive pitfalls are part of human thinking, biases that cloud logic when we make judgments under conditions of uncertainty and time pressure. Indeed, the cognitive errors common in clinical medicine were initially elucidated by the psychologists Amos Tversky and Daniel Kahneman in their seminal work in the early 1970s.2 At the conference, I reviewed with the residents three principal biases these researchers studied: “anchoring,” where a person overvalues the first data he encounters and so is skewed in his thinking; “availability,” where recent or dramatic cases quickly come to mind and color judgment about the situation at hand; and “attribution,” where stereotypes can prejudice thinking so conclusions arise not from data but from such preconceptions.

A physician works with imperfect information. Patients typically describe their problem in a fragmented and tangential fashion—they tell the doctor when they began to feel different, what parts of the body bother them, what factors in the environment like food or a pet may have exacerbated their symptoms, and what they did to try to relieve their condition. There are usually gaps in the patient’s story: parts of his narrative are only hazily recalled and facts are distorted by his memory, making the data he offers incomplete and uncertain. The physician’s physical examination, where he should use all of his senses to try to ascertain changes in bodily functions—assessing the tension of the skin, the breadth of the liver, the pace of the heart—yields soundings that are, at best, approximations. More information may come from blood tests, X-rays, and scans. But no test result, from even the most sophisticated technology, is consistently reliable in revealing the hidden pathology.

So a doctor learns to question the quality and significance of the data he extracts from the medical history of the patient, physical examination, and diagnostic testing. Rigorous questioning requires considerable effort to stop and look back with a discerning eye and try to rearrange the pieces of the puzzle to form a different picture that provides the diagnosis. The most instructive moments are when you are proven wrong, and realize that you believed you knew more than you did, wrongly dismissing a key bit of information that contradicted your presumed diagnosis as an “outlier,” or failing to consider in your parsimonious logic that the patient had more than one malady causing his symptoms.

At the clinical conference, I recounted this reality to the interns and residents, and emphasized that like every doctor I knew, I had made serious errors in diagnosis, errors that were detrimental to patients. And I worried aloud about how changes in the delivery of health care, particularly the increasing time pressure to see more and more patients in fewer and fewer minutes in the name of “efficiency,” could worsen the pitfalls physicians face in their thinking, because clear thinking cannot be done in haste.

When the discussion moved to formulating ways of improving diagnosis, I raised the issue of the growing reliance on “clinical guidelines,” the algorithms crafted by expert committees that are intended to implement uniform “best practices.” Like all doctors educated over the past decade, the residents had been immersed in what is called “evidence-based medicine.” This is a movement to put medical care on a sound scientific footing using data from clinical trials of treatment rather than on anecdotal results. To be sure, this shift to science is welcome, but the “evidence” from clinical trials is often limited in its application to a particular patient’s case. Subjects in clinical trials are typically “cherry-picked,” meaning that they are included only if they have a single disease and excluded if they have multiple conditions, or are receiving other medications or treatments that might mar the purity of the population under study. People are also excluded who are too young or too old to fit into the rigid criteria set by the researchers.

Advertisement

Yet these excluded patients are the very people who heavily populate doctors’ clinics and seek their care. It is these many patients that a physician must think about deeply, taking on the difficult task of devising an empirical approach, melding statistics from clinical trials with personal experience and even anecdotal results. Yes, prudent physicians consult scientific data, the so-called “best evidence,” but they recognize that such evidence is an approximation of reality. And importantly, clinical guidelines do not incorporate the sick person’s preferences and values into the doctor’s choice of treatment; guidelines are generic, not customized to the individual’s sensibilities.3

At the conference, an animated discussion followed, and I heard how changes in the culture of medicine were altering the ways that the young doctors interacted with their patients. One woman said that she spent less and less time conversing with her patients. Instead, she felt glued to a computer screen, checking off boxes on an electronic medical record to document a voluminous set of required “quality of care” measures, many of them not clearly relevant to her patient’s problems. Another resident talked about how so-called “work rounds” were frequently conducted in a closed conference room with a computer rather than at the patient’s bedside.

During my training three decades ago, the team of interns and residents would move from bedside to bedside, engaging the sick person in discussion, looking for new symptoms; the medical chart was available to review the progress to date and new tests were often ordered in search of the diagnosis. By contrast, each patient now had his or her relevant data on the screen, and the team sat around clicking the computer keyboard. It took concerted effort for the group to leave the conference room and visit the actual people in need.

Still another trainee talked about the work schedule. Because chronic sleep deprivation can lead to medical mistakes, strict regulations have been implemented across the country to limit the amount of time any one resident can attend to patient care. While well intentioned and clearly addressing an important problem with patient safety, the unintended consequence was that care became more fragmented; patients now were “handed off” in shifts, and with such handoffs the trainee often failed to learn how an illness evolved over time, and important information was sometimes lost in the transition.

After the clinical conference, I chatted with my hosts, the two chief residents. I saw what had not changed over the years: the eagerness and excitement about human biology; the mix of tension and pride in taking responsibility for another person’s life; the desire, while acknowledging its impossibility, for perfection in practice and performance; and a growing awareness that deciding with the patient on the “best treatment” is a complex process that cannot simply be reduced to formulas using probabilities of risks and benefits.

The two chief residents showed a level of insight about the limits of medical science that I had not had at their age. In the 1970s, clinical medicine was encountering molecular biology for the first time, and I was dazzled by the cascade of findings about genes and proteins. Recombinant DNA technology with gene cloning promised to revolutionize the profession, and indeed it has. Now, diseases are unraveled based on mutations in the genome, and drugs are more rationally designed to target such underlying molecular aberrations.

As an intern and resident, my focus was firmly fixed on this marvelous and awesome science.4 Although I prided myself on probing deeply into the patient’s history in search of clues that would point to a diagnosis, I too often missed the deeper narrative—the tale of the meaning of the illness to the person. My fellow trainees and I spent scant time pondering how the sufferer experienced his plight, or what values and preferences were relevant to the clinical choices he faced. The two chief residents seemed deeply engaged by their patients’ lives and struggles, yet deeply frustrated, because that dimension of medicine, what is termed “medical humanism,” was, despite much lip service, given short shrift as a consequence of the enormous change in how medical care is being restructured.

What I heard from the residents at the Massachusetts General Hospital was not confined to that noon meeting or to young physicians. A close friend in New York City told me how his wife with metastatic ovarian cancer had spent six days in the hospital without a single doctor engaging her in a genuine conversation. Yes, she had undergone blood tests and been sent for CT scans. But no one attending to her had sat down in a chair at her bedside and conversed at eye level, asking questions and probing her thoughts and feelings about what was being done to combat her cancer and how much more treatment she was willing to undergo. The doctors had hardly touched her, only briefly placing their hands on her swollen abdomen to gauge its tension. The interactions with the clinical staff were remote, impersonal, and essentially mediated through machines.

Advertisement

Nor were these perceptions of the change in the nature of care restricted to reports from patients and their families. They were also made by senior physicians. My wife and frequent co-writer, Dr. Pamela Hartzband, an endocrinologist, reported conversations among the clinical faculty about how a price tag was being fixed to every hour of the doctor’s day. There were monetary metrics to be met, so-called “relative value units,” which assessed your productivity as a physician strictly by measuring how much money you, as a salaried staff member, generated for the larger department. There is a compassionate, altruistic core of medical practice—sitting with a grieving family after a loved one is lost; lending your experience to a younger colleague struggling to manage a complex case; telephoning a patient and listening to how she is faring after surgery and chemotherapy for her breast cancer; extending yourself beyond the usual working day to help others because that is much of what it means to be a doctor. But not one minute of such time may be accountable for reimbursement on a bean counter’s balance sheet.5

Still, I wondered whether my diagnosis of the ills of modern medicine was accurate. Perhaps I was weighed down by nostalgia, my perspective a product of selective hindsight. Certainly, coldly mercenary physicians were familiar in classical narratives of illness. Tolstoy satirized “celebrity doctors” who were well paid for offering Ivan Ilych ridiculous remedies for his undiagnosed malady while ignoring his suffering. Turgenev in “The Country Doctor” depicted an unctuous provincial physician whose degree of engagement with the sick was tied to the size of their pocketbook. Molière repeatedly lampooned the folly of pompous and greedy physicians.

Such doctors have been members of the profession since its founding. And it would be naive to believe that money is not one part of the exchange between physician and patient. But only recently has medical care been recast in our society as if it took place in a factory, with doctors and nurses as shift workers, laboring on an assembly line of the ill. The new people in charge, many with degrees in management economics, believe that care should be configured as a commodity, its contents reduced to equations, all of its dimensions measured and priced, all patient choices formulated as retail purchases. The experience of illness is being stripped of its symbolism and meaning, emptied of feeling and conflict. The new era rightly embraces science but wrongly relinquishes the soul.

In his book Carrying the Heart, Dr. Frank González-Crussi, a professor of pathology at Northwestern University, has made a sharp departure from medicine as a cold world of clinical facts and figures. Rather, he asks us to return to a view of the body not as a machine but as a wondrous work of creation, where both the corporeal and the spiritual coexist. His aim, he writes, is

to increase the public’s awareness of the body’s insides. By this, I do not mean the objective facts of anatomy, for most educated people today have a general, if limited, understanding of the body’s parts and functions. I mean the history, the symbolism, the reflections, the many ideas, serious or fanciful, and even the romance and lore with which the inner organs have been surrounded historically.

This précis captures the beauty and charm of his book. I learned from González-Crussi that for centuries the stomach was considered the most noble of organs, directing all important physiological functions. The ancients, González-Crussi tells us, called the stomach “the king of viscera,” “the senate or the patrician class; the bodily parts were the rebellious plebeians.” Shakespeare repeats this fable in Coriolanus, where the stomach lectures the rest of the body’s organs about the importance of its function.

Our gastric elements were seen as having a leading part in joy and adversity, and were the seat of the soul—predating the belief that the spirit was housed in the heart or the brain. This regal position was ultimately relinquished through the observations of Dr. William Beaumont in 1822. Beaumont studied a young French-Canadian named Alexis St. Martin, who suffered an accidental musket shot to the belly. He was left with a perforation some two and a half inches in circumference, through which the doctor could look into the living stomach and perform experiments on its workings. Via this “stomach window,” the physiology of the organ was gradually deciphered, and its fabled status faded.

No part of our anatomy, González-Crussi recounts, has failed to fascinate poets, priests, and philosophers—including the working of the colon. In the chapter on feces, we learn that the Chinese had a divinity of the toilet. “This was Zi-gu, ‘the violet lady.’ She was not entirely fictional,” González-Crussi writes,

but took her origin from a flesh-and-blood woman who lived about AD 689. To her misfortune, she was made the concubine of a high government official, Li-Jing. The man’s legitimate wife, overcome by jealousy, killed Zi-gu in cold blood while she was visiting the toilet. Since then, her ghost has haunted the latrines, “a most inconvenient circumstance for anyone in a hurry.”

The colon and its product also were part of the theology of the Aztecs. They believed that excrement

was capable of bringing ills and misfortune, and associated with sin, but also powerful and beneficent, able to ward off disease, to subdue the enemy, and to transform sexual transgressions into something useful and healthy.

Gold was termed “the sun’s excrement” and the sun god Tonatiuh deposited his own feces in the form of this precious element in the earth while he passed through to the underworld.

González-Crussi also reminds us that there was an inordinate fixation on one’s bowels during the Victorian age, which honored values of order, temperance, respect for tradition, and sexual repression. Personal self-control, the mark of British culture, was at odds with that urgent process of expelling air and waste:

Perhaps no greater ambivalence has ever existed toward the bowel than in Victorian England, where this organ was viewed with simultaneous skittish embarrassment and fascination, shame and fixed interest, shy modesty and hypnotic engrossment.

A shocking consequence of this cultural tension is that one of the most proficient surgeons of the era, William Arbuthnot Lane, who devised procedures to successfully set compound fractures, concluded that without a colon, man would free himself from inner toxins and extend his health and longevity. A natural physiological function became a pseudodisease. Initially, Lane devised operations to bypass the large bowel, and he then moved on to perform total colectomies. Patients flocked to him from all over Great Britain and abroad, certain that their lives would be more salubrious and fulfilling without their large intestine.

González-Crussi treats with similar scholarship and playful insight the uterus, the penis, the lungs, and the heart. He melds history with literature, religion with science, high humor with serious concerns. The sum of his narrative shows that medicine does not exist as some absolute ideal, but is very much a product of the prevailing culture, affected by the prejudices and passions of the time. This truth is far from the sterile conception of care as a commodity and the body as a jumble of molecules, disconnected from the experience of illness shared between patient and physician. But our culture, with its worship of technology and its deference to the technocrat, risks imposing an approach to medical care that ignores the deeply felt symbolism of our body parts and our desperate search for meaning when we suffer from illness. Patients—their problems, perceptions, and preferences—cannot be reduced to lifeless numbers. Ironically, the emerging quantitative view of medicine is as misleading as are the past conceptions González-Crussi presents.

Jonathan Edlow is concerned with the doctor not as poet or philosopher or priest but as detective. An emergency room physician at the Beth Israel Deaconess Medical Center, a Harvard teaching hospital in Boston where I also work, Edlow recounts how as a teenager with no interest in a medical career, he received a copy of Berton Roueché’s The Medical Detectives. This anthology of articles from The New Yorker proved for him to be a companion to the Complete Stories of Sherlock Holmes. Arthur Conan Doyle, of course, was trained as a physician, and the skills of Holmes are precisely those a thinking physician needs. Both detective and doctor not only assemble evidence but must judiciously weigh what they have found, seeking the underlying value of each clue. The successful doctor-detective must be alert to biases that can lead him astray. This was the message of the clinical conference those months ago; and in Edlow’s tales of difficult diagnoses, we can observe detours that are due to “anchoring,” “availability,” and “attribution.”

Notably, the collection of cases in Edlow’s book The Deadly Dinner Party takes us out of the clinic and into the field, as epidemiologists and infectious disease experts from the Centers for Disease Control and academic medical centers comb for clues in cooking pots that served a communal dinner and in the caverns of office buildings where workers fell ill. In his chapter “An Airtight Case,” Edlow implicitly shows why so many of the standard formulas that policymakers promulgate fall short when answers are not obvious. He describes how an office worker (whom he calls Philip Bradford) thought he had developed “the flu—the usual cough, fevers, chest pain, just feeling lousy….” What appeared to be the symptoms of a typical viral illness did not spontaneously disappear. A chest X-ray showed pneumonia, but treatment with antibiotics proved ineffective. The presumptive diagnosis changed from infection to cancer, and Bradford was told by his doctor that he needed his chest opened to resect a piece of lung and identify the tumor.

Fortunately, the patient sought a second opinion, from a senior thoracic surgeon, and the diagnosis was again thrown into doubt—the specialist believed that the problem was neither infection nor an abnormal growth. Over the ensuing months, the mysterious pneumonia spontaneously cleared up, but after a year Bradford again started coughing and running a fever. “His chest X-ray blossomed with ominous nodules,” Edlow writes, “then, as with the previous episode, after a few weeks his symptoms mysteriously vanished.”

It was the good fortune of this ill office worker with the mysterious lung problem to see Dr. Robert H. Rubin, an infectious disease specialist at the Massachusetts General Hospital, at the time the director of the hospital’s clinical investigation program. As Rubin recounts his deciphering of the ultimate diagnosis, what is striking is his “low-tech” thinking: “I was immediately impressed by three aspects of the case,” Rubin recalled.

First was that Bradford appeared healthy and athletic, not the picture of someone with a chronic disease. Second, between episodes, he continued to jog over five miles with no apparent problem. And third, his physical examination was normal.

With such comments, we are a universe away from sophisticated blood tests and CT scans, and deeply rooted in the world of the physician’s five senses. The most seasoned clinicians teach that the patient tells you his diagnosis if only you know how to listen. The clinical history, beyond all other aspects of information gathering, holds the most clues. And it is this part of medicine—the patient’s narrative, the onset and tempo of the illness, the factors that exacerbated the symptoms and those that ameliorated them, the foods the patient ate, the clothing he wore, the people he worked with, the trips he took, the myriad of other events that occurred before, during, and after the malady—that are as vital as any DNA analysis or MRI investigation.

Rubin concentrated that kind of questioning and listening on Bradford. He did not quickly dispatch him for more tests, but instead sharply shifted his focus to investigate clues in Bradford’s environment that could reveal what was causing inflammation in his lungs. Edlow goes on to write in clear and fluid prose about how Rubin systematically pursued what could be the agent provocateur in the case. The lengths to which Rubin went are extraordinary, his skill in eliciting and interpreting the patient’s narrative exemplary, and certainly not part of the rushed practice of today’s clinic. I won’t spoil the end of the story; what is important is that the solution came about only by dogged thinking that required the kind of time and inquiry that is absent in much of modern medical care.

The other detective stories in Edlow’s compilation transmit the same message: we most need a discerning doctor when a diagnosis is not obvious, when the clues are confusing, when initial tests are inconclusive. No simple technology can serve as a surrogate for the probing human mind. Edlow’s book is a welcome complement to González-Crussi’s. Both show us that medicine is truly an art and a science that requires doctors both to decipher the mystery and illuminate the meaning of the body in health and disease.

This Issue

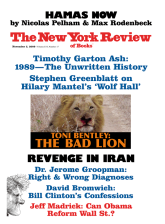

November 5, 2009

-

1

There has been a national focus on medical mistakes since the release of a report from the Institute of Medicine of the National Academy of Sciences, “To Err Is Human: Building a Safer Health System” (National Academies Press, 1999). Important measures have been implemented to improve patient safety in hospitals and clinics. But this report did not address the issue of misdiagnosis. See also Jerome Groopman, How Doctors Think. Houghton Mifflin, 2007); Pat Croskerry, “The Cognitive Imperative: Thinking About How We Think,” Academic Emergency Medicine, Vol. 7, No. 11 (2000); Donald A. Redelmeier et al., “Problems for Clinical Judgement: Introducing Cognitive Psychology as One More Basic Science,” Canadian Medical Association Journal, Vol. 164, No. 3 (2001). ↩

-

2

Amos Tversky and Daniel Kahneman, “Judgment Under Uncertainty: Heuristics and Biases: Bias in Judgments Reveals Some Heuristics of Thinking Under Uncertainty,” Science, Vol. 185, No. 4157 (1974). ↩

-

3

In addition, there is serious concern about the influence of the pharmaceutical and device industry in the formulation of clinical guidelines. There is no one process to craft such guidelines, nor is there agreement about limits on conflict of interest and types of funding to support the expert committees reviewing clinical trials and selecting what constitutes “best evidence.” The Institute of Medicine recently released a report on this issue that recommends strict measures to avoid financial conflicts in crafting clinical guidelines: “Conflicts of Interest in Medical Research, Education, and Practice” (National Academies Press, 2009). See also David Aron and Leonard Pogach, “Transparency Standards for Diabetes Performance Measures,” JAMA, Vol. 301, No. 2 (2009); and Barry Meier, “Diabetes Case Shows Pitfalls of Treatment Rules,” The New York Times, August 17, 2009. ↩

-

4

Dr. Stanley Joel Reiser, in his recent book Technological Medicine: The Changing World of Doctors and Patients. Cambridge University Press, 2009), documents how the introduction of each new technology in the clinic, beginning with the stethoscope and extending to our era with MRI scans and electronic medical records, is initially enthusiastically greeted by both physicians and the public, but can impair the doctor in his relationship with the patient by forming a barrier to direct communication. ↩

-

5

Pamela Hartzband and Jerome Groopman, “Money and the Changing Culture of Medicine,” The New England Journal of Medicine, Vol. 360, No. 2 (2009). ↩