Andrew Innerarity/Reuters

The LS3, a four-legged rough-terrain robot designed by Boston Dynamics with funding from DARPA and the US Marine Corps to accompany soldiers anywhere they go on foot, and to help respond to natural and man-made disasters, at the DARPA Robotics Challenge Trials, Homestead-Miami Speedway, Florida, December 2013. That month, Boston Dynamics was acquired by Google, which says it will honor existing contracts with DARPA but is rejecting other DARPA funding.

“The great advances of civilization,” wrote Milton Friedman in Capitalism and Freedom, his influential best seller published in 1962, “whether in architecture or painting, in science or literature, in industry or agriculture, have never come from centralized government.” He did not say what he made of the state-sponsored art of Athens’s Periclean Age or the Medici family, who, as Europe’s dominant bankers but then as Florentine rulers, commissioned and financed so much Renaissance art. Or the Spanish court that gave us Velázquez. Or the many public universities that produced great scientists in our times. Or, even just before Friedman was writing, what could he have made of the Manhattan Project of the US government, which produced the atomic bomb? Or the National Institutes of Health, whose government-supported grants led to many of the most important pharmaceutical breakthroughs?

We could perhaps forgive Friedman’s ill-informed remarks as a burst of ideological enthusiasm if so many economists and business executives didn’t accept this myth as largely true. We hear time and again from those who should know better that government is a hindrance to the innovation that produces economic growth. Above all, the government should not try to pick “winners” by investing in what may be the next great companies. Many orthodox economists insist that the government should just get out of the way.

Lawrence Summers said something of the sort in a 2001 interview, shortly after the end of his tenure as Bill Clinton’s treasury secretary:

There is something about this epoch in history that really puts a premium on incentives, on decentralization, on allowing small economic energy to bubble up rather than a more top-down, more directed approach.

More recently, the respected Northwestern economist Robert Gordon reiterated the conventional view in a talk at the New School, saying that he was “extremely skeptical of government” as a source of innovation. “This is the role of individual entrepreneurs. Government had nothing to do with Bill Gates, Steve Jobs, Zuckerberg.”

Fortunately, a new book, The Entrepreneurial State, by the Sussex University economist Mariana Mazzucato, forcefully documents just how wrong these assertions are. It is one of the most incisive economic books in years. Mazzucato’s research goes well beyond the oft-told story about how the Internet was originally developed at the US Department of Defense. For example, she shows in detail that, while Steve Jobs brilliantly imagined and designed attractive new commercial products, almost all the scientific research on which the iPod, iPhone, and iPad were based was done by government-backed scientists and engineers in Europe and America. The touch-screen technology, specifically, now so common to Apple products, was based on research done at government-funded labs in Europe and the US in the 1960s and 1970s.

Similarly, Gordon called the National Institutes of Health a useful government “backstop” to the apparently far more important work done by pharmaceutical companies. But Mazzucato cites research to show that the NIH was responsible for some 75 percent of the major original breakthroughs known as new molecular entities between 1993 and 2004.

Further, Marcia Angell, former editor of The New England Journal of Medicine, found that new molecular entities that were given priority as possibly leading to significant advances in medical treatment were often if not mostly created by government. As Angell notes in her book The Truth About the Drug Companies (2004), only three of the seven high-priority drugs in 2002 came from pharmaceutical companies: the drug Zelnorm was developed by Novartis to treat irritable bowel syndrome, Gilead Sciences created Hepsera to treat hepatitis B, and Eloxatin was created by Sanofi-Synthélabo to treat colon cancer. No one can doubt the benefits of these drugs, or the expense incurred to develop them, but this is a far cry from the common claim, such as Gordon’s, that it is the private sector that does almost all the important innovation.

The rise of Silicon Valley, the high-technology center of the US based in and around Palo Alto, California, is supposedly the quintessential example of how entrepreneurial ideas succeeded without government direction. As Summers put it, new economic ideas were “born of the lessons of the experience of the success of decentralization in a place like Silicon Valley.” In fact, military contracts for research gave initial rise to the Silicon Valley firms, and national defense policy strongly influenced their development. Two researchers cited by Mazzucato found that in 2006, the last year sampled, only twenty-seven of the hundred top inventions annually listed by R&D Magazine in the 2000s were created by a single firm as opposed to government alone or a collaboration with government-funded entities. Among those recently developed by government labs were a computer program to speed up data-mining significantly and Babel, a program that translates one computer-programming language into another.

Advertisement

For all the acclaim now given to venture capital, Mazzucato says, private firms often invest after innovations have already come a long way under government’s much more daring basic research and patient investment of capital. The obvious case is the development of the technology for the Internet, but the process is much the same in the pharmaceutical industry.

Mazzucato observes that less and less basic research is being done by companies today. Rather, they focus on the commercial development of the research already done by the government. One shouldn’t underestimate the degree of imagination and funding necessary to transform a major scientific idea into a product that can be used by millions of consumers. The money paid by investors for some young unproven companies can be astounding, even when they are only beginning to make profits.

Facebook had hardly turned a profit when it went public in 2012 at a share price that made it worth more than $100 billion. Earlier this year, Facebook in turn bought for $19 billion WhatsApp, a messaging service that reaches 450 million people and has only fifty-five employees. The possibility remains that competition could wipe out WhatApp’s advantage, just as the once-popular Blackberry mobile communication service was made to seem archaic by Apple’s iPhone. It’s not yet even clear whether Facebook itself can maintain its newfound profitability. We can only imagine the possible benefits if such money were spent earlier in the basic research cycle.

Both government research and entrepreneurial capital are necessary conditions for the advance of commercial innovation. Neither is sufficient. But the consensus among many economists and politicians doesn’t seem to acknowledge an equal role for government. Resistance to acknowledging government’s fundamental contribution to American scientific and technical innovation became especially vigorous when the federal government’s solar energy project, Solyndra, to which it had lent more than $500 million, went bankrupt. The investment was part of President Obama’s 2009 stimulus package, which included a substantial program of loans for clean energy, run by a successful former hedge fund and venture capital manager. But the solar energy company was undermined when the high price of silicon, on which an alternative technology to Solyndra’s was based, fell sharply, enabling competition, especially from China’s solar companies, to underprice the American start-up.

Solyndra’s 2011 bankruptcy led to a Republican congressional investigation, and a bill to end the loan program altogether. Although venture capital funds, such as Argonaut Ventures, controlled by Obama fund-raiser George Kaiser, were among the major investors in Solyndra, critics saw the failure as proof that government couldn’t and shouldn’t invest in such new ventures at all. “Governments have always been lousy at picking winners, and they are likely to become more so, as legions of entrepreneurs and tinkerers swap designs online,” wrote The Economist in 2012. But including Solyndra, only roughly 2 percent of the projects partly financed by the federal government have gone bankrupt.

In Mazzucato’s account of the enormous success of federal scientific and technical research as the foundation of the most revolutionary of today’s technologies, the most telling example is how dependent Steve Jobs’s Apple was on government-funded breakthroughs. Apple’s earliest innovations in computers were themselves dependent on government research. But by the 1990s, sales of its traditional laptops were flagging. The launch of the iPod in 2001, which displaced the once-popular but more limited Sony Walkman, and in 2007 of the touch-screen systems of the new iPhone and iPad, turned the company into the electronic powerhouse of our time. From that point, its global sales almost quintupled and its stock price rose from roughly $100 to more than $700 a share at its high.

These later breakthroughs were almost completely dependent on government-sponsored research. “While the products owe their beautiful design and slick integration to the genius of Jobs and his large team,” writes Mazzucato,

nearly every state-of-the-art technology found in the iPod, iPhone and iPad is an often overlooked and ignored achievement of the research efforts and funding support of the government and military.

A major government-funded discovery known as giant magnetoresistance, which won its two European inventors a Nobel Prize in physics, is a telling example of such support. The process enlarges the storage capacity of computers and more recent electronic devices. In a speech at the Nobel ceremony, a member of the Royal Swedish Academy of Science explained that this breakthrough made the iPod possible. Other major developments by Apple also had their “roots” in federal research, among them, Mazzucato writes, the global positioning system of the iPhone and Siri, its voice-activated personal assistant.

Advertisement

Apple is only one of many such stories. In the early 1980s, the federal government formed the often-forgotten Sematech, the Semiconductor Manufacturing Technology consortium, a research partnership of US semiconductor companies designed to combat Japan’s growing lead in chip technologies. The US provided $100 million a year to encourage private companies to join the effort, including the innovative giant Intel, whose pioneering work on microprocessors in the 1970s had been central to the electronics explosion.

Virtually all experts acknowledged that Sematech reestablished the US competitiveness in microprocessors and memory chips, leading to a sharp reduction in costs and radical miniaturization. The tiny integrated circuits with huge memories that resulted are the core of most electronic products today, long exploited by major companies like Microsoft and Apple. Their development was critically aided by military purchases in their early stages when commercial possibilities were still in their infancy.

Mazzucato claims not that business entrepreneurs and venture capitalists did not make crucial contributions, but that they were, on balance, more averse to risks than government researchers. One successful venture capitalist, William Janeway, fully acknowledges the fundamental contributions of government research in his book, Doing Capitalism in the Innovation Economy. He is concerned that the antigovernment attitudes of recent decades may prove dangerous. “The very success in ‘liberating’ the market economy from the encroachment of the state,” he writes, which defines today’s conventional economic wisdom, as the quote by Summers suggests, “has potentially dire consequences for the Innovation Economy.”

Janeway is a well-informed economist as well as a successful venture entrepreneur, and he argues for the importance of government in the nation’s economic growth. With the development of huge, highly profitable corporations in steel, oil, aluminum, chemicals, and communications by the late 1800s, he notes, crucial research was increasingly dominated by the private sector. Janeway cites the business historian Alfred Chandler to show that this is not an example of the free market at work. Rather, the huge, unchallenged profits of large oligopolistic companies enabled them to make long-term investments in research. Chandler called it the “visible hand.” Still, while Bell Labs and Xerox PARC, as well as research labs at General Electric, DuPont, and Alcoa, among others, made important, even legendary discoveries, they were also partly financed by Washington.

A major shift occurred after World War II. Federally funded science research became critical to commercial success. It may well have been the Manhattan Project that encouraged serious federal spending on research. Much of it was done in, or for, the Defense Department, but not all. The National Science Foundation was created by Congress and the National Institutes of Health were expanded. During the same period, the major private leaders in research, such as Bell Labs and Xerox PARC, were becoming less rich, and started closing down or reducing their commitments to their research labs by the 1980s and 1990s.

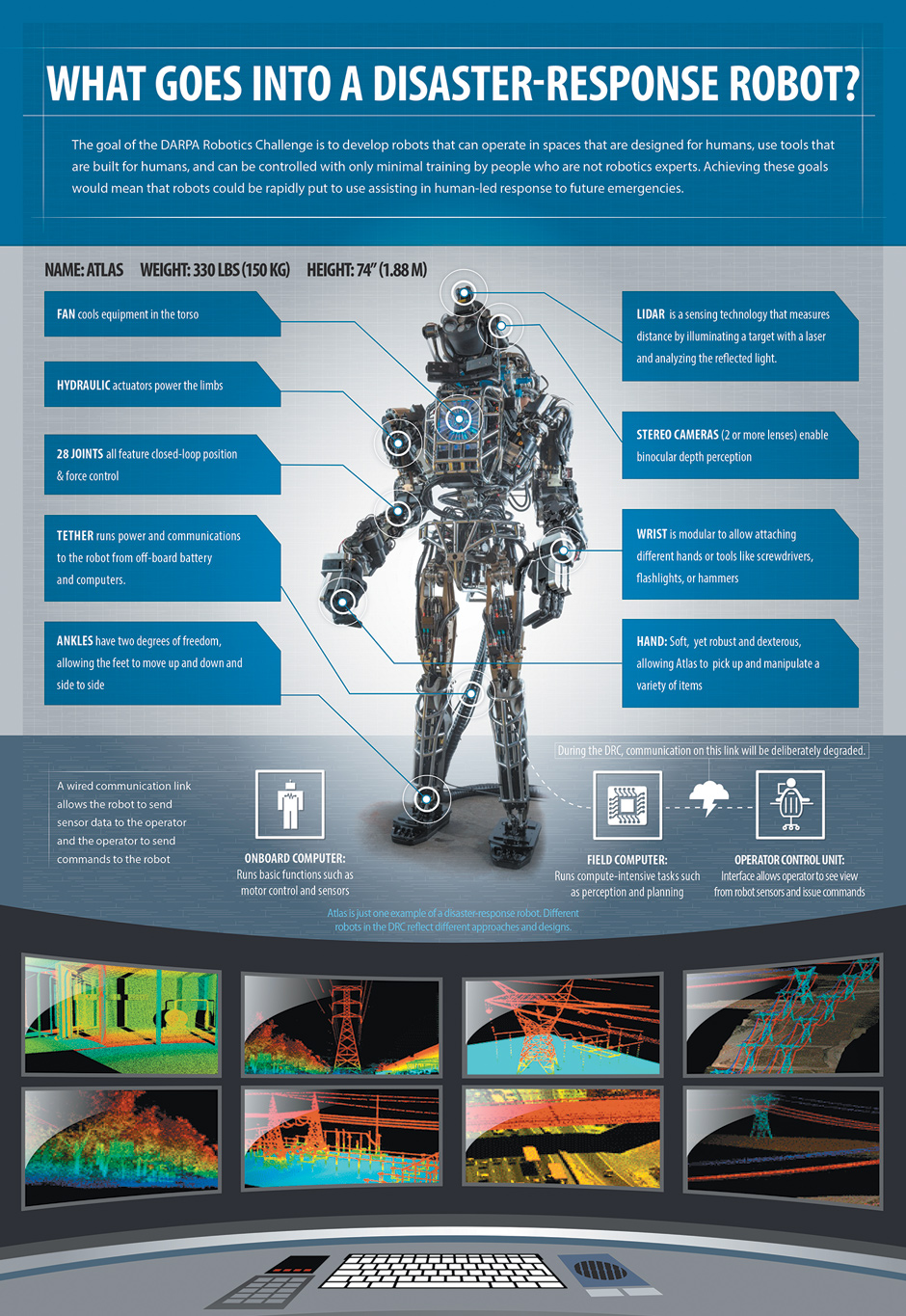

In response to the Soviet launch of Sputnik, the Defense Department created what became the progenitor of the Internet, the Defense Advanced Research Projects Agency (DARPA), in 1958. But, as Janeway points out, the DOD had already funded roughly twenty projects to help construct digital computers. “The IT sector, which scarcely existed in 1945,” write two economic historians cited by Janeway,

was a key focus of federal R&D and defense-related procurement spending for much of the postwar period. Moreover, the structure of these federal R&D and procurement programs exerted a powerful influence on the pace of development of the underlying technologies of defense and civilian applications.

Federal funding accounted for more than 50 percent of all US R&D from the early 1950s through 1978 and exceeded the total spent by all other OECD countries over this period. The conventional economic justification for government spending on basic research is that business won’t make sufficient investments because no single company will be able to benefit sufficiently from the potential financial returns.

Mazzucato argues that government research has been visionary. It not merely reduces risks in the market, but opens technology to entirely new ideas. She cites how government directed the development of major new technologies in completely new spheres like information technology, biotechnology, nuclear energy, and nanotechnology. She also argues that government funding even of later-stage research can be beneficial. Government subsidies in Denmark, Germany, and China have resulted in successful individual companies. The US has had a success with First Solar, a solar energy company whose existence depended on state support.

Venture capital, according to Mazzucato, cuts and runs too rapidly to be trusted and also encourages short-term successes that may not be sustainable, the better to entice financial market investors. It would have been helpful had she provided more real-world examples for this argument.

Like Mazzucato, Janeway wants government to invest more; but as a firm believer in venture capital he has a more conventional view of the process of innovation. “The state can play a decisively catalytic, and more than merely constructive role,” he writes. “But to explore the new space for innovative applications thereby created remains the realm of entrepreneurial finance, the world of bubbles and crashes.”

Citing the history of the railroad boom of the 1800s, the electrification boom of the 1920s, and the high-technology boom of the 1990s, he says these speculative bubbles are the necessary fuel of productive innovation because the uncertainty of a profitable future for these companies is just too high for many other investors. Facing enormous uncertainty, business is only willing to invest as speculative fever mounts, knowing it may be able to sell out to investors—who, as Janeway admits, are greater fools—even if the company is not ultimately successful.

In one case, Janeway and his partners made a killing with an initial cash investment of some $6 million in an Internet company called Covad. Soon enough, Bear Stearns, the large investment house later sold to JPMorgan Chase, invested $300 million in the company, which at that point had only $26,000 of revenues. Before the crash, Janeway and his investors had a 20 percent stake in a company that went public in 1999 and had a market value of $5 billion. Janeway and his partners earned a $1 billion return. The company soon went bankrupt.

Janeway’s main point is that while there will be a lot of waste in such speculative bubbles, some of these investments will become giant successes. An example of the buying frenzy’s longer-term benefits was a company Janeway and his partners invested in called Neustar, which provided the technology to allow customers to keep their telephone numbers when they switched phone companies. It had a workable business plan, got its financing, and ultimately survived the 2000–2001 crash, earning Janeway and partners some $1 billion on a $77 million investment. “Financial bubbles have been the vehicle for mobilizing capital at the scale required in the face,” he writes, of such “fundamental, intractable uncertainty.”

For Janeway, as for the admired Austrian economist Joseph Schumpeter, who eventually taught at Harvard, this is healthy capitalism at work. Well before World War II, Schumpeter called it “creative destruction,” a phrase soon to be famous and arguably all too common now. Still, Janeway gives government basic research much if not most of the credit. Most investment of private venture capital was directed at IT or biotech, and not at other technologies, precisely because government led the way, he says.

Janeway favors bubbles, just the good kind, like the high-technology boom of the 1990s. The mortgage boom of the 2000s was a “bad” bubble, which left mostly destruction in its wake. But Janeway tells us little about how to distinguish among bubbles when they are underway. For years, defenders of the mortgage boom argued that it was justified by the sharp rise in home ownership, for example. My own preference would be to minimize bubbles by means of restraints on debt and on banker compensation, because the incentives too easily make investors rich while resulting in far too few useful products with lasting value. Such bubbles also give rise to large-scale fraud, such as the accounting fictions at Enron, or the deceptive sales practices of mortgage brokers. In short, the pay-off for cheating becomes too large.

The nation may well be in a new high-technology bubble right now, as evidenced by the price paid for the WhatsApp messaging service. The large venture capital firm Sequoia Capital invested $60 million in WhatsApp over the past couple of years. Since Facebook’s offer, it now has a value of between $2 billion and $3 billion. But we won’t know whether this is one of Janeway’s “good” bubbles until it increases income or crashes. As it competes with other apps such as Skype and Google Hangouts, we don’t know whether WhatsApp will survive.

Mazzucato may underestimate the risks that venture capitalists take, even if she is largely right that it is the government that takes the biggest risks of all. Besides DARPA and the National Institutes of Health, Mazzucato cites three other major examples of government research programs that worked well and are generally neglected in public discussions. The Small Business Innovation Research program—started, it may surprise some readers, by Ronald Reagan—provided research funding to small independent companies, such as the computer security company Symantec and the telecommunications company Qualcomm. The success of this little-known agency, which distributes $2 billion in funding directly, is almost a secret. Yet a survey and analysis of forty-four recipients of funding by several scholars showed a significant positive return on the government’s investment. The Orphan Drug Act, also signed by Reagan, provides funding for drugs designed to treat rare diseases. Novartis drew on such funding in developing its leukemia drug Gleevec, which by 2010 had generated sales of $4.3 billion.

The National Nanotechnology Initiative may be most worth watching because it is the federal government’s attempt to find “the next new thing.” Many, including Mazzucato, think nanotechnology, which works with the tiniest bits of matter from single molecules to atoms themselves, will become the next general-purpose technology, spreading across medicine, electronics, and the creation of new materials. But commercial applications are still elusive. Mazzucato similarly thinks that green technology such as solar panels, if it is to succeed, requires committed, long-term investment by the government or extensions of government-directed investment, like state-funded banks.

A mutually accepted assumption of Mazzucato, Janeway, and also the critics of government interference in research is that scientific innovation will be a main source of rising productivity for the economy, and therefore of a rising standard of living. Such single-minded emphasis on something as amorphous as innovation can be misleading. Scholars are understandably influenced by the wonders of the IT revolution. But there are multiple sources of economic growth besides technical innovation. Military spending for the cold war created persistent Keynesian demand. An energy-dependent economy was aided by sharp drops in the price of oil as new sources were found in the Middle East and elsewhere, while cheaper American natural gas may well have similar effects in the future. The building of the national highway system led to the growth of the suburbs. And as the Nobel Prize–winning economist Edmund Phelps writes in his new book Mass Flourishing (2013), an entrepreneurial culture that begins at the grass-roots level is also probably crucial for the countless small improvements that go almost unnoticed but make possible innovation on the national level.

In the 1970s, when American companies were overrun by Japanese and European competition, it was not so much scientific—IT was in its infancy—but managerial innovation that led to foreign domination. Meanwhile, some technological wonders, like nuclear energy, did not have a deep effect on the US economy, despite advances in electricity-generating reactors and in medical procedures.

Moreover, during the last twenty years, productivity has grown while wages have largely stagnated. Basic economic theory suggests they should grow together. Are the new technologies failing to create jobs? Do they make it that much easier for jobs to be created offshore? Mazzucato acknowledges that the relationship between research, innovation, and economic growth is not predictable. But she and Janeway make the right assumption. Where would we be without it?

How do we fund what we need? The debate over the nation’s budget deficit aside, government’s contribution to innovation is not financially rewarded. The benefits to government, it has always been argued, would be increases in tax revenues as companies grow based on its research. The fact that Apple avoids almost all taxes by international avoidance strategies, as do many other high-technology companies, shows such hypotheses to be largely bogus.

Mazzucato proposes that when its research is used commercially, the government collects royalties to be put in a federal innovation fund. Companies could be required to pay back grants if their products succeed financially. “A more direct tool,” she says, are state investment banks. Such state banks in Germany, Brazil, and China earn billions of dollars, which they can reinvest. Government could also take equity stakes in companies that use the technologies developed.

“Many of the problems being faced today by the Obama administration,” she writes, “are due to the fact that US taxpayers…do not realize that corporations are making money from innovation that has been supported by their taxes.” That they are not aware of the benefits to competition seems to be a triumph of free-market ideology over good sense. How many Americans are aware that Google’s basic algorithm was developed with a National Science Foundation grant?

Business failures do not provoke such generalized criticism. A few decades ago, the Detroit auto industry almost collapsed under poor management. US industry never exploited liquid crystal display technology for TV and computer screens, leaving the business to Japanese and other Asian manufacturers. Owing to government research, China leads the way in solar energy development.

Sitting on trillions of dollars of cash resulting from high profits these days, corporations buy back hundreds of billions of dollars of their own shares to boost stock prices, a systematic practice documented closely by the economist William Lazonick.* They often do so rather than invest in new ideas or research. The financial community fights financial reform with great ferocity, and banks are bigger than ever.

Both Mazzucato and Janeway fear that China’s large investments in basic research will produce innovation that leaves the US behind. Under the limits set by sequestration, government research programs are now being cut back to the lowest level as a percent of GDP in forty years.

As government research spending falls, one relatively new development, with potential benefits but also dangers, is a rapid rise in funding for research—mostly in the sciences and mostly related to health and environment issues—by the nation’s new high-technology and financial billionaires, including Bill Gates of Microsoft, Lawrence Ellison of Oracle, and Michael Bloomberg. Thus far, the donations do not nearly equal the amount spent by government agencies on research, but there have been notable successes, in particular treatment for cystic fibrosis. On the other hand, some scientists are critical of organizations in which research agendas are to a large extent influenced by the personal preferences of a fairly small number of philanthropists. Since contributions to such organizations are usually tax-deductible, the general public in effect subsidizes a major part of the research.

But Mazzucato’s criticism of US innovation strategies goes deeper than the lack of adequate funding. She makes one of the most convincing cases I have seen for the value and competence of government itself, and for its ability to do what the private sector simply cannot. It is not only, as economists argue, a matter of reducing the risk of research and innovation for private enterprise. She argues that government efforts are the source of new technological visions for the future, and—very persuasively—she cites the innovations of the past sixty years to make her case.

-

*

William Lazonick, “The Financialization of the US Corporation: What Has Been Lost, and How It Can Be Regained,” Seattle University Law Review, Vol. 36, No. 2 (2013). ↩