1.

The history of economic thought in the twentieth century is a bit like the history of Christianity in the sixteenth century. Until John Maynard Keynes published The General Theory of Employment, Interest, and Money in 1936, economics—at least in the English-speaking world—was completely dominated by free-market orthodoxy. Heresies would occasionally pop up, but they were always suppressed. Classical economics, wrote Keynes in 1936, “conquered England as completely as the Holy Inquisition conquered Spain.” And classical economics said that the answer to almost all problems was to let the forces of supply and demand do their job.

But classical economics offered neither explanations nor solutions for the Great Depression. By the middle of the 1930s, the challenges to orthodoxy could no longer be contained. Keynes played the role of Martin Luther, providing the intellectual rigor needed to make heresy respectable. Although Keynes was by no means a leftist—he came to save capitalism, not to bury it—his theory said that free markets could not be counted on to provide full employment, creating a new rationale for large-scale government intervention in the economy.

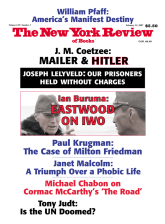

Keynesianism was a great reformation of economic thought. It was followed, inevitably, by a counter-reformation. A number of economists played important roles in the great revival of classical economics between 1950 and 2000, but none was as influential as Milton Friedman. If Keynes was Luther, Friedman was Ignatius of Loyola, founder of the Jesuits. And like the Jesuits, Friedman’s followers have acted as a sort of disciplined army of the faithful, spearheading a broad, but incomplete, rollback of Keynesian heresy. By the century’s end, classical economics had regained much though by no means all of its former dominion, and Friedman deserves much of the credit.

I don’t want to push the religious analogy too far. Economic theory at least aspires to be science, not theology; it is concerned with earth, not heaven. Keynesian theory initially prevailed because it did a far better job than classical orthodoxy of making sense of the world around us, and Friedman’s critique of Keynes became so influential largely because he correctly identified Keynesianism’s weak points. And just to be clear: although this essay argues that Friedman was wrong on some issues, and sometimes seemed less than honest with his readers, I regard him as a great economist and a great man.

2.

Milton Friedman played three roles in the intellectual life of the twentieth century. There was Friedman the economist’s economist, who wrote technical, more or less apolitical analyses of consumer behavior and inflation. There was Friedman the policy entrepreneur, who spent decades campaigning on behalf of the policy known as monetarism—finally seeing the Federal Reserve and the Bank of England adopt his doctrine at the end of the 1970s, only to abandon it as unworkable a few years later. Finally, there was Friedman the ideologue, the great popularizer of free-market doctrine.

Did the same man play all these roles? Yes and no. All three roles were informed by Friedman’s faith in the classical verities of free-market economics. Moreover, Friedman’s effectiveness as a popularizer and propagandist rested in part on his well-deserved reputation as a profound economic theorist. But there’s an important difference between the rigor of his work as a professional economist and the looser, sometimes questionable logic of his pronouncements as a public intellectual. While Friedman’s theoretical work is universally admired by professional economists, there’s much more ambivalence about his policy pronouncements and especially his popularizing. And it must be said that there were some serious questions about his intellectual honesty when he was speaking to the mass public.

But let’s hold off on the questionable material for a moment, and talk about Friedman the economic theorist. For most of the past two centuries, economic thinking has been dominated by the concept of Homo economicus. The hypothetical Economic Man knows what he wants; his preferences can be expressed mathematically in terms of a “utility function.” And his choices are driven by rational calculations about how to maximize that function: whether consumers are deciding between corn flakes or shredded wheat, or investors are deciding between stocks and bonds, those decisions are assumed to be based on comparisons of the “marginal utility,” or the added benefit the buyer would get from acquiring a small amount of the alternatives available.

It’s easy to make fun of this story. Nobody, not even Nobel-winning economists, really makes decisions that way. But most economists—myself included—nonetheless find Economic Man useful, with the understanding that he’s an idealized representation of what we really think is going on. People do have preferences, even if those preferences can’t really be expressed by a precise utility function; they usually make sensible decisions, even if they don’t literally maximize utility. You might ask, why not represent people the way they really are? The answer is that abstraction, strategic simplification, is the only way we can impose some intellectual order on the complexity of economic life. And the assumption of rational behavior has been a particularly fruitful simplification.

Advertisement

The question, however, is how far to push it. Keynes didn’t make an all-out assault on Economic Man, but he often resorted to plausible psychological theorizing rather than careful analysis of what a rational decision-maker would do. Business decisions were driven by “animal spirits,” consumer decisions by a psychological tendency to spend some but not all of any increase in income, wage settlements by a sense of fairness, and so on.

But was it really a good idea to diminish the role of Economic Man that much? No, said Friedman, who argued in his 1953 essay “The Methodology of Positive Economics” that economic theories should be judged not by their psychological realism but by their ability to predict behavior. And Friedman’s two greatest triumphs as an economic theorist came from applying the hypothesis of rational behavior to questions other economists had thought beyond its reach.

In his 1957 book A Theory of the Consumption Function—not exactly a crowd-pleasing title, but an important topic—Friedman argued that the best way to make sense of saving and spending was not, as Keynes had done, to resort to loose psychological theorizing, but rather to think of individuals as making rational plans about how to spend their wealth over their lifetimes. This wasn’t necessarily an anti-Keynesian idea—in fact, the great Keynesian economist Franco Modigliani simultaneously and independently made a similar case, with even more care in thinking about rational behavior, in work with Albert Ando. But it did mark a return to classical ways of thinking—and it worked. The details are a bit technical, but Friedman’s “permanent income hypothesis” and the Ando-Modigliani “life cycle model” resolved several apparent paradoxes about the relationship between income and spending, and remain the foundations of how economists think about spending and saving to this day.

Friedman’s work on consumption behavior would, in itself, have made his academic reputation. An even bigger triumph, however, came from his application of Economic Man theorizing to inflation. In 1958 the New Zealand–born economist A.W. Phillips pointed out that there was a historical correlation between unemployment and inflation, with high inflation associated with low unemployment and vice versa. For a time, economists treated this correlation as if it were a reliable and stable relationship. This led to serious discussion about which point on the “Phillips curve” the government should choose. For example, should the United States accept a higher inflation rate in order to achieve a lower unemployment rate?

In 1967, however, Friedman gave a presidential address to the American Economic Association in which he argued that the correlation between inflation and unemployment, even though it was visible in the data, did not represent a true trade-off, at least not in the long run. “There is,” he said, “always a temporary trade-off between inflation and unemployment; there is no permanent trade-off.” In other words, if policymakers were to try to keep unemployment low through a policy of generating higher inflation, they would achieve only temporary success. According to Friedman, unemployment would eventually rise again, even as inflation remained high. The economy would, in other words, suffer the condition Paul Samuelson would later dub “stagflation.”

How did Friedman reach this conclusion? (Edmund S. Phelps, who was awarded the Nobel Memorial Prize in economics this year, simultaneously and independently arrived at the same result.) As in the case of his work on consumer behavior, Friedman applied the idea of rational behavior. He argued that after a sustained period of inflation, people would build expectations of future inflation into their decisions, nullifying any positive effects of inflation on employment. For example, one reason inflation may lead to higher employment is that hiring more workers becomes profitable when prices rise faster than wages. But once workers understand that the purchasing power of their wages will be eroded by inflation, they will demand higher wage settlements in advance, so that wages keep up with prices. As a result, after inflation has gone on for a while, it will no longer deliver the original boost to employment. In fact, there will be a rise in unemployment if inflation falls short of expectations.

At the time Friedman and Phelps propounded their ideas, the United States had little experience with sustained inflation. So this was truly a prediction rather than an attempt to explain the past. In the 1970s, however, persistent inflation provided a test of the Friedman-Phelps hypothesis. Sure enough, the historical correlation between inflation and unemployment broke down in just the way Friedman and Phelps had predicted: in the 1970s, as the inflation rate rose into double digits, the unemployment rate was as high or higher than in the stable-price years of the 1950s and 1960s. Inflation was eventually brought under control in the 1980s, but only after a painful period of extremely high unemployment, the worst since the Great Depression.

Advertisement

By predicting the phenomenon of stagflation in advance, Friedman and Phelps achieved one of the great triumphs of postwar economics. This triumph, more than anything else, confirmed Milton Friedman’s status as a great economist’s economist, whatever one may think of his other roles.

One interesting footnote: although Friedman made great strides in macroeconomics by applying the concept of individual rationality, he also knew where to stop. In the 1970s, some economists pushed Friedman’s analysis of inflation even further, arguing that there is no usable trade-off between inflation and unemployment even in the short run, because people will anticipate government actions and build that anticipation, as well as past experience, into their price-setting and wage-bargaining. This doctrine, known as “rational expectations,” swept through much of academic economics. But Friedman never went there. His reality sense warned that this was taking the idea of Homo economicus too far. And so it proved: Friedman’s 1967 address has stood the test of time, while the more extreme views propounded by rational expectations theorists in the Seventies and Eighties have not.

3.

“Everything reminds Milton of the money supply. Well, everything reminds me of sex, but I keep it out of the paper,” wrote MIT’s Robert Solow in 1966. For decades, Milton Friedman’s public image and fame were defined largely by his pronouncements on monetary policy and his creation of the doctrine known as monetarism. It’s somewhat surprising to realize, then, that monetarism is now widely regarded as a failure, and that some of the things Friedman said about “money” and monetary policy—unlike what he said about consumption and inflation—appear to have been misleading, and perhaps deliberately so.

To understand what monetarism was all about, the first thing you need to know is that the word “money” doesn’t mean quite the same thing in Economese that it does in plain English. When economists talk of the money supply, they don’t mean wealth in the usual sense. They mean only those forms of wealth that can be used more or less directly to buy things. Currency—pieces of green paper with pictures of dead presidents on them—is money, and so are bank deposits on which you can write checks. But stocks, bonds, and real estate aren’t money, because they have to be converted into cash or bank deposits before they can be used to make purchases.

If the money supply consisted solely of currency, it would be under the direct control of the government—or, more precisely, the Federal Reserve, a monetary agency that, like its counterpart “central banks” in many other countries, is institutionally somewhat separate from the government proper. The fact that the money supply also includes bank deposits makes reality more complicated. The central bank has direct control only over the “monetary base”—the sum of currency in circulation, the currency banks hold in their vaults, and the deposits banks hold at the Federal Reserve—but not the deposits people have made in banks. Under normal circumstances, however, the Federal Reserve’s direct control over the monetary base is enough to give it effective control of the overall money supply as well.

Before Keynes, economists considered the money supply a primary tool of economic management. But Keynes argued that under depression conditions, when interest rates are very low, changes in the money supply have little effect on the economy. The logic went like this: when interest rates are 4 or 5 percent, nobody wants to sit on idle cash. But in a situation like that of 1935, when the interest rate on three-month Treasury bills was only 0.14 percent, there is very little incentive to take the risk of putting money to work. The central bank may try to spur the economy by printing large quantities of additional currency; but if the interest rate is already very low the additional cash is likely to languish in bank vaults or under mattresses. Thus Keynes argued that monetary policy, a change in the money supply to manage the economy, would be ineffective. And that’s why Keynes and his followers believed that fiscal policy—in particular, an increase in government spending—was necessary to get countries out of the Great Depression.

Why does this matter? Monetary policy is a highly technocratic, mostly apolitical form of government intervention in the economy. If the Fed decides to increase the money supply, all it does is purchase some government bonds from private banks, paying for the bonds by crediting the banks’ reserve accounts—in effect, all the Fed has to do is print some more monetary base. By contrast, fiscal policy involves the government much more deeply in the economy, often in a value-laden way: if politicians decide to use public works to promote employment, they need to decide what to build and where. Economists with a free-market bent, then, tend to want to believe that monetary policy is all that’s needed; those with a desire to see a more active government tend to believe that fiscal policy is essential.

Economic thinking after the triumph of the Keynesian revolution—as reflected, say, in the early editions of Paul Samuelson’s classic textbook*—gave priority to fiscal policy, while monetary policy was relegated to the sidelines. As Friedman said in his 1967 address to the American Economic Association:

The wide acceptance of [Keynesian] views in the economics profession meant that for some two decades monetary policy was believed by all but a few reactionary souls to have been rendered obsolete by new economic knowledge. Money did not matter.

Although this may have been an exaggeration, monetary policy was held in relatively low regard through the 1940s and 1950s. Friedman, however, crusaded for the proposition that money did too matter, culminating in the 1963 publication of A Monetary History of the United States, 1867–1960, with Anna Schwartz.

Although A Monetary History is a vast work of extraordinary scholarship, covering a century of monetary developments, its most influential and controversial discussion concerned the Great Depression. Friedman and Schwartz claimed to have refuted Keynes’s pessimism about the effectiveness of monetary policy in depression conditions. “The contraction” of the economy, they declared, “is in fact a tragic testimonial to the importance of monetary forces.”

But what did they mean by that? From the beginning, the Friedman-Schwartz position seemed a bit slippery. And over time Friedman’s presentation of the story grew cruder, not subtler, and eventually began to seem—there’s no other way to say this—intellectually dishonest.

In interpreting the origins of the Depression, the distinction between the monetary base (currency plus bank reserves), which the Fed controls directly, and the money supply (currency plus bank deposits) is crucial. The monetary base went up during the early years of the Great Depression, rising from an average of $6.05 billion in 1929 to an average of $7.02 billion in 1933. But the money supply fell sharply, from $26.6 billion to $19.9 billion. This divergence mainly reflected the fallout from the wave of bank failures in 1930–1931: as the public lost faith in banks, people began holding their wealth in cash rather than bank deposits, and those banks that survived began keeping large quantities of cash on hand rather than lending it out, to avert the danger of a bank run. The result was much less lending, and hence much less spending, than there would have been if the public had continued to deposit cash into banks, and banks had continued to lend deposits out to businesses. And since a collapse of spending was the proximate cause of the Depression, the sudden desire of both individuals and banks to hold more cash undoubtedly made the slump worse.

Friedman and Schwartz claimed that the fall in the money supply turned what might have been an ordinary recession into a catastrophic depression, itself an arguable point. But even if we grant that point for the sake of argument, one has to ask whether the Federal Reserve, which after all did increase the monetary base, can be said to have caused the fall in the overall money supply. At least initially, Friedman and Schwartz didn’t say that. What they said instead was that the Fed could have prevented the fall in the money supply, in particular by riding to the rescue of the failing banks during the crisis of 1930–1931. If the Fed had rushed to lend money to banks in trouble, the wave of bank failures might have been prevented, which in turn might have avoided both the public’s decision to hold cash rather than bank deposits, and the preference of the surviving banks for stashing deposits in their vaults rather than lending the funds out. And this, in turn, might have staved off the worst of the Depression.

An analogy may be helpful here. Suppose that a flu epidemic breaks out, and later analysis suggests that appropriate action by the Centers for Disease Control could have contained the epidemic. It would be fair to blame government officials for failing to take appropriate action. But it would be quite a stretch to say that the government caused the epidemic, or to use the CDC’s failure as a demonstration of the superiority of free markets over big government.

Yet many economists, and even more lay readers, have taken Friedman and Schwartz’s account to mean that the Federal Reserve actually caused the Great Depression—that the Depression is in some sense a demonstration of the evils of an excessively interventionist government. And in later years, as I’ve said, Friedman’s assertions grew cruder, as if to feed this misperception. In his 1967 presidential address he declared that “the US monetary authorities followed highly deflationary policies,” and that the money supply fell “because the Federal Reserve System forced or permitted a sharp reduction in the monetary base, because it failed to exercise the responsibilities assigned to it”—an odd assertion given that the monetary base, as we’ve seen, actually rose as the money supply was falling. (Friedman may have been referring to a couple of episodes along the way in which the monetary base fell modestly for brief periods, but even so his statement was highly misleading at best.)

By 1976 Friedman was telling readers of Newsweek that “the elementary truth is that the Great Depression was produced by government mismanagement,” a statement that his readers surely took to mean that the Depression wouldn’t have happened if only the government had kept out of the way—when in fact what Friedman and Schwartz claimed was that the government should have been more active, not less.

Why did historical disputes about the role of monetary policy in the 1930s matter so much in the 1960s? Partly because they fed into Friedman’s broader anti-government agenda, of which more below. But the more direct application was to Friedman’s advocacy of monetarism. According to this doctrine, the Federal Reserve should keep the money supply growing at a steady, low rate, say 3 percent a year—and not deviate from this target, no matter what is happening in the economy. The idea was to put monetary policy on autopilot, removing any discretion on the part of government officials.

Friedman’s case for monetarism was part economic, part political. Steady growth in the money supply, he argued, would lead to a reasonably stable economy. He never claimed that following his rule would eliminate all recessions, but he did argue that the wiggles in the economy’s growth path would be small enough to be tolerable—hence the assertion that the Great Depression wouldn’t have happened if the Fed had been following a monetarist rule. And along with this qualified faith in the stability of the economy under a monetary rule went Friedman’s unqualified contempt for the ability of Federal Reserve officials to do better if given discretion. Exhibit A for the Fed’s unreliability was the onset of the Great Depression, but Friedman could point to many other examples of policy gone wrong. “A monetary rule,” he wrote in 1972, “would insulate monetary policy both from arbitrary power of a small group of men not subject to control by the electorate and from the short-run pressures of partisan politics.”

Monetarism was a powerful force in economic debate for about three decades after Friedman first propounded the doctrine in his 1959 book A Program for Monetary Stability. Today, however, it is a shadow of its former self, for two main reasons.

First, when the United States and the United Kingdom tried to put monetarism into practice at the end of the 1970s, both experienced dismal results: in each country steady growth in the money supply failed to prevent severe recessions. The Federal Reserve officially adopted Friedman-type monetary targets in 1979, but effectively abandoned them in 1982 when the unemployment rate went into double digits. This abandonment was made official in 1984, and ever since then the Fed has engaged in precisely the sort of discretionary fine-tuning that Friedman decried. For example, the Fed responded to the 2001 recession by slashing interest rates and allowing the money supply to grow at rates that sometimes exceeded 10 percent per year. Once the Fed was satisfied that the recovery was solid, it reversed course, raising interest rates and allowing growth in the money supply to drop to zero.

Second, since the early 1980s the Federal Reserve and its counterparts in other countries have done a reasonably good job, undermining Friedman’s portrayal of central bankers as irredeemable bunglers. Inflation has stayed low, recessions—except in Japan, of which more in a second—have been relatively brief and shallow. And all this happened in spite of fluctuations in the money supply that horrified monetarists, and led them—Friedman included—to predict disasters that failed to materialize. As David Warsh of The Boston Globe pointed out in 1992, “Friedman blunted his lance forecasting inflation in the 1980s, when he was deeply, frequently wrong.”

By 2004, the Economic Report of the President, written by the very conservative economists of the Bush administration, could nonetheless make the highly anti-monetarist declaration that “aggressive monetary policy”—not stable, steady-as-you-go, but aggressive—“can reduce the depth of a recession.”

Now, a word about Japan. During the 1990s Japan experienced a sort of minor-key reprise of the Great Depression. The unemployment rate never reached Depression levels, thanks to massive public works spending that had Japan, with less than half America’s population, pouring more concrete each year than the United States. But the very low interest rate conditions of the Great Depression reemerged in full. By 1998 the call money rate, the rate on overnight loans between banks, was literally zero.

And under those conditions, monetary policy proved just as ineffective as Keynes had said it was in the 1930s. The Bank of Japan, Japan’s equivalent of the Fed, could and did increase the monetary base. But the extra yen were hoarded, not spent. The only consumer durable goods selling well, some Japanese economists told me at the time, were safes. In fact, the Bank of Japan found itself unable even to increase the money supply as much as it wanted. It pushed vast quantities of cash into circulation, but broader measures of the money supply grew very little. An economic recovery finally began a couple of years ago, driven by a revival of business investment to take advantage of new technological opportunities. But monetary policy never was able to get any traction.

In effect, Japan in the Nineties offered a fresh opportunity to test the views of Friedman and Keynes regarding the effectiveness of monetary policy in depression conditions. And the results clearly supported Keynes’s pessimism rather than Friedman’s optimism.

4.

In 1946 Milton Friedman made his debut as a popularizer of free-market economics with a pamphlet titled “Roofs or Ceilings: The Current Housing Problem” coauthored with George J. Stigler, who would later join him at the University of Chicago. The pamphlet, an attack on the rent controls that were still universal just after World War II, was released under rather odd circumstances: it was a publication of the Foundation for Economic Education, an organization which, as Rick Perlstein writes in Before the Storm (2001), his book about the origins of the modern conservative movement, “spread a libertarian gospel so uncompromising it bordered on anarchism.” Robert Welch, the founder of the John Birch Society, sat on the FEE’s board. This first venture in free-market popularization prefigured in two ways the course of Friedman’s career as a public intellectual over the next six decades.

First, the pamphlet demonstrated Friedman’s special willingness to take free-market ideas to their logical limits. Neither the idea that markets are efficient ways to allocate scarce goods nor the proposition that price controls create shortages and inefficiency was new. But many economists, fearing the backlash against a sudden rise in rents (which Friedman and Stigler predicted would be about 30 percent for the nation as a whole), might have proposed some kind of gradual transition to decontrol. Friedman and Stigler dismissed all such concerns.

In the decades ahead, this single-mindedness would become Friedman’s trademark. Again and again, he called for market solutions to problems—education, health care, the illegal drug trade—that almost everyone else thought required extensive government intervention. Some of his ideas have received widespread acceptance, like replacing rigid rules on pollution with a system of pollution permits that companies are free to buy and sell. Some, like school vouchers, are broadly supported by the conservative movement but haven’t gotten far politically. And some of his proposals, like eliminating licensing procedures for doctors and abolishing the Food and Drug Administration, are considered outlandish even by most conservatives.

Second, the pamphlet showed just how good Friedman was as a popularizer. It’s beautifully and cunningly written. There is no jargon; the points are made with cleverly chosen real-world examples, ranging from San Francisco’s rapid recovery from the 1906 earthquake to the plight of a 1946 veteran, newly discharged from the army, searching in vain for a decent place to live. The same style, enhanced by video, would mark Friedman’s celebrated 1980 TV series Free to Choose.

The odds are that the great swing back toward laissez-faire policies that took place around the world beginning in the 1970s would have happened even if there had been no Milton Friedman. But his tireless and brilliantly effective campaign on behalf of free markets surely helped accelerate the process, both in the United States and around the world. By any measure—protectionism versus free trade; regulation versus deregulation; wages set by collective bargaining and government minimum wages versus wages set by the market—the world has moved a long way in Friedman’s direction. And even more striking than his achievement in terms of actual policy changes has been the transformation of the conventional wisdom: most influential people have been so converted to the Friedman way of thinking that it is simply taken as a given that the change in economic policies he promoted has been a force for good. But has it?

Consider first the macroeconomic performance of the US economy. We have data on the real income—that is, income adjusted for inflation—of American families from 1947 to 2005. During the first half of that fifty-eight-year stretch, from 1947 to 1976, Milton Friedman was a voice crying in the wilderness, his ideas ignored by policymakers. But the economy, for all the inefficiencies he decried, delivered dramatic improvements in the standard of living of most Americans: median real income more than doubled. By contrast, the period since 1976 has been one of increasing acceptance of Friedman’s ideas; although there remained plenty of government intervention for him to complain about, there was no question that free-market policies became much more widespread. Yet gains in living standards have been far less robust than they were during the previous period: median real income was only about 23 percent higher in 2005 than in 1976.

Part of the reason the second postwar generation didn’t do as well as the first was a slower overall rate of economic growth—a fact that may come as a surprise to those who assume that the trend toward free markets has yielded big economic dividends. But another important reason for the lag in most families’ living standards was a spectacular increase in economic inequality: during the first postwar generation income growth was broadly spread across the population, but since the late 1970s median income, the income of the typical family, has risen only about a third as fast as average income, which includes the soaring incomes of a small minority at the top.

This raises an interesting point. Milton Friedman often assured audiences that no special institutions, like minimum wages and unions, were needed to ensure that workers would share in the benefits of economic growth. In 1976 he told Newsweek readers that tales of the evil done by the robber barons were pure myth:

There is probably no other period in history, in this or any other country, in which the ordinary man had as large an increase in his standard of living as in the period between the Civil War and the First World War, when unrestrained individualism was most rugged.

(What about the remarkable thirty-year stretch after World War II, which encompassed much of Friedman’s own career?) Yet in the decades that followed that pronouncement, as the minimum wage was allowed to fall behind inflation and unions largely disappeared as an important factor in the private sector, working Americans saw their fortunes lag behind growth in the economy as a whole. Was Friedman too sanguine about the generosity of the invisible hand?

To be fair, there are many factors affecting both economic growth and the distribution of income, so we can’t blame Friedmanite policies for all disappointments. Still, given the common assumption that the turn toward free-market policies did great things for the US economy and the living standards of ordinary Americans, it’s striking how little support one can find for that proposition in the data.

Similar questions about the lack of clear evidence that Friedman’s ideas actually work in practice can be raised, with even more force, for Latin America. A decade ago it was common to cite the success of the Chilean economy, where Augusto Pinochet’s Chicago-educated advisers turned to free-market policies after Pinochet seized power in 1973, as proof that Friedman-inspired policies showed the path to successful economic development. But although other Latin nations, from Mexico to Argentina, have followed Chile’s lead in freeing up trade, privatizing industries, and deregulating, Chile’s success story has not been replicated.

On the contrary, the perception of most Latin Americans is that “neoliberal” policies have been a failure: the promised takeoff in economic growth never arrived, while income inequality has worsened. I don’t mean to blame everything that has gone wrong in Latin America on the Chicago School, or to idealize what went before; but there is a striking contrast between the perception that Friedman was vindicated and the actual results in economies that turned from the interventionist policies of the early postwar decades to laissez-faire.

On a more narrowly focused topic, one of Friedman’s key targets was what he considered the uselessness and counterproductive nature of most government regulation. In an obituary for his one-time collaborator George Stigler, Friedman singled out for praise Stigler’s critique of electricity regulation, and his argument that regulators usually end up serving the interests of the regulated rather than those of the public. So how has deregulation worked out?

It started well, with the deregulation of trucking and airlines beginning in the late 1970s. In both cases deregulation, while it didn’t make everyone happy, led to increased competition, generally lower prices, and higher efficiency. Deregulation of natural gas was also a success.

But the next big wave of deregulation, in the electricity sector, was a different story. Just as Japan’s slump in the 1990s showed that Keynesian worries about the effectiveness of monetary policy were no myth, the California electricity crisis of 2000– 2001—in which power companies and energy traders created an artificial shortage to drive up prices—reminded us of the reality that lay behind tales of the robber barons and their depredations. While other states didn’t suffer as severely as California, across the nation electricity deregulation led to higher, not lower, prices, with huge windfall profits for power companies.

Those states that, for whatever reason, didn’t get on the deregulation bandwagon in the 1990s now consider themselves lucky. And the luckiest of all are those cities that somehow didn’t get the memo about the evils of government and the virtues of the private sector, and still have publicly owned power companies. All of this showed that the original rationale for electricity regulation—the observation that without regulation, power companies would have too much monopoly power—remains as valid as ever.

Should we conclude from this that deregulation is always a bad idea? No—it depends on the specifics. To conclude that deregulation is always and everywhere a bad idea would be to engage in the same kind of absolutist thinking that was, arguably, Milton Friedman’s greatest flaw.

In his 1965 review of Friedman and Schwartz’s Monetary History, the late Yale economist and Nobel laureate James Tobin gently chided the authors for going too far. “Consider the following three propositions,” he wrote. “Money does not matter. It does too matter. Money is all that matters. It is all too easy to slip from the second proposition to the third.” And he added that “in their zeal and exuberance” Friedman and his followers had too often done just that.

A similar sequence seems to have happened in Milton Friedman’s advocacy of laissez-faire. In the aftermath of the Great Depression, there were many people saying that markets can never work. Friedman had the intellectual courage to say that markets can too work, and his showman’s flair combined with his ability to marshal evidence made him the best spokesman for the virtues of free markets since Adam Smith. But he slipped all too easily into claiming both that markets always work and that only markets work. It’s extremely hard to find cases in which Friedman acknowledged the possibility that markets could go wrong, or that government intervention could serve a useful purpose.

Friedman’s laissez-faire absolutism contributed to an intellectual climate in which faith in markets and disdain for government often trumps the evidence. Developing countries rushed to open up their capital markets, despite warnings that this might expose them to financial crises; then, when the crises duly arrived, many observers blamed the countries’ governments, not the instability of international capital flows. Electricity deregulation proceeded despite clear warnings that monopoly power might be a problem; in fact, even as the California electricity crisis was happening, most commentators dismissed concerns about price-rigging as wild conspiracy theories. Conservatives continue to insist that the free market is the answer to the health care crisis, in the teeth of overwhelming evidence to the contrary.

What’s odd about Friedman’s absolutism on the virtues of markets and the vices of government is that in his work as an economist’s economist he was actually a model of restraint. As I pointed out earlier, he made great contributions to economic theory by emphasizing the role of individual rationality—but unlike some of his colleagues, he knew where to stop. Why didn’t he exhibit the same restraint in his role as a public intellectual?

The answer, I suspect, is that he got caught up in an essentially political role. Milton Friedman the great economist could and did acknowledge ambiguity. But Milton Friedman the great champion of free markets was expected to preach the true faith, not give voice to doubts. And he ended up playing the role his followers expected. As a result, over time the refreshing iconoclasm of his early career hardened into a rigid defense of what had become the new orthodoxy.

In the long run, great men are remembered for their strengths, not their weaknesses, and Milton Friedman was a very great man indeed—a man of intellectual courage who was one of the most important economic thinkers of all time, and possibly the most brilliant communicator of economic ideas to the general public that ever lived. But there’s a good case for arguing that Friedmanism, in the end, went too far, both as a doctrine and in its practical applications. When Friedman was beginning his career as a public intellectual, the times were ripe for a counterreformation against Keynesianism and all that went with it. But what the world needs now, I’d argue, is a counter-counterreformation.

This Issue

February 15, 2007

-

*

See Paul A. Samuelson, Economics: The Original 1948 Edition (McGraw-Hill, 1997). ↩