The following is adapted from a lecture given at New York University on October 19, 2009.

Americans would like things to be better. According to public opinion surveys in recent years, everyone would like their child to have improved life chances at birth. They would prefer it if their wife or daughter had the same odds of surviving maternity as women in other advanced countries. They would appreciate full medical coverage at lower cost, longer life expectancy, better public services, and less crime.

When told that these things are available in Austria, Scandinavia, or the Netherlands, but that they come with higher taxes and an “interventionary” state, many of those same Americans respond: “But that is socialism! We do not want the state interfering in our affairs. And above all, we do not wish to pay more taxes.”

This curious cognitive dissonance is an old story. A century ago, the German sociologist Werner Sombart famously asked: Why is there no socialism in America? There are many answers to this question. Some have to do with the sheer size of the country: shared purposes are difficult to organize and sustain on an imperial scale. There are also, of course, cultural factors, including the distinctively American suspicion of central government.

And indeed, it is not by chance that social democracy and welfare states have worked best in small, homogeneous countries, where issues of mistrust and mutual suspicion do not arise so acutely. A willingness to pay for other people’s services and benefits rests upon the understanding that they in turn will do likewise for you and your children: because they are like you and see the world as you do.

Conversely, where immigration and visible minorities have altered the demography of a country, we typically find increased suspicion of others and a loss of enthusiasm for the institutions of the welfare state. Finally, it is incontrovertible that social democracy and the welfare states face serious practical challenges today. Their survival is not in question, but they are no longer as self-confident as they once appeared.

But my concern tonight is the following: Why is it that here in the United States we have such difficulty even imagining a different sort of society from the one whose dysfunctions and inequalities trouble us so? We appear to have lost the capacity to question the present, much less offer alternatives to it. Why is it so beyond us to conceive of a different set of arrangements to our common advantage?

Our shortcoming—forgive the academic jargon—is discursive. We simply do not know how to talk about these things. To understand why this should be the case, some history is in order: as Keynes once observed, “A study of the history of opinion is a necessary preliminary to the emancipation of the mind.” For the purposes of mental emancipation this evening, I propose that we take a minute to study the history of a prejudice: the universal contemporary resort to “economism,” the invocation of economics in all discussions of public affairs.

For the last thirty years, in much of the English-speaking world (though less so in continental Europe and elsewhere), when asking ourselves whether we support a proposal or initiative, we have not asked, is it good or bad? Instead we inquire: Is it efficient? Is it productive? Would it benefit gross domestic product? Will it contribute to growth? This propensity to avoid moral considerations, to restrict ourselves to issues of profit and loss—economic questions in the narrowest sense—is not an instinctive human condition. It is an acquired taste.

We have been here before. In 1905, the young William Beveridge—whose 1942 report would lay the foundations of the British welfare state—delivered a lecture at Oxford in which he asked why it was that political philosophy had been obscured in public debates by classical economics. Beveridge’s question applies with equal force today. Note, however, that this eclipse of political thought bears no relation to the writings of the great classical economists themselves. In the eighteenth century, what Adam Smith called “moral sentiments” were uppermost in economic conversations.

Indeed, the thought that we might restrict public policy considerations to a mere economic calculus was already a source of concern. The Marquis de Condorcet, one of the most perceptive writers on commercial capitalism in its early years, anticipated with distaste the prospect that “liberty will be no more, in the eyes of an avid nation, than the necessary condition for the security of financial operations.” The revolutions of the age risked fostering a confusion between the freedom to make money…and freedom itself. But how did we, in our own time, come to think in exclusively economic terms? The fascination with an etiolated economic vocabulary did not come out of nowhere.

Advertisement

On the contrary, we live in the long shadow of a debate with which most people are altogether unfamiliar. If we ask who exercised the greatest influence over contemporary Anglophone economic thought, five foreign-born thinkers spring to mind: Ludwig von Mises, Friedrich Hayek, Joseph Schumpeter, Karl Popper, and Peter Drucker. The first two were the outstanding “grandfathers” of the Chicago School of free-market macroeconomics. Schumpeter is best known for his enthusiastic description of the “creative, destructive” powers of capitalism, Popper for his defense of the “open society” and his theory of totalitarianism. As for Drucker, his writings on management exercised enormous influence over the theory and practice of business in the prosperous decades of the postwar boom.

Three of these men were born in Vienna, a fourth (von Mises) in Austrian Lemberg (now Lvov), the fifth (Schumpeter) in Moravia, a few dozen miles north of the imperial capital. All were profoundly shaken by the interwar catastrophe that struck their native Austria. Following the cataclysm of World War I and a brief socialist municipal experiment in Vienna, the country fell to a reactionary coup in 1934 and then, four years later, to the Nazi invasion and occupation.

All were forced into exile by these events and all—Hayek in particular—were to cast their writings and teachings in the shadow of the central question of their lifetime: Why had liberal society collapsed and given way—at least in the Austrian case—to fascism? Their answer: the unsuccessful attempts of the (Marxist) left to introduce into post-1918 Austria state-directed planning, municipally owned services, and collectivized economic activity had not only proven delusionary, but had led directly to a counterreaction.

The European tragedy had thus been brought about by the failure of the left: first to achieve its objectives and then to defend itself and its liberal heritage. Each, albeit in contrasting keys, drew the same conclusion: the best way to defend liberalism, the best defense of an open society and its attendant freedoms, was to keep government far away from economic life. If the state was held at a safe distance, if politicians—however well-intentioned—were barred from planning, manipulating, or directing the affairs of their fellow citizens, then extremists of right and left alike would be kept at bay.

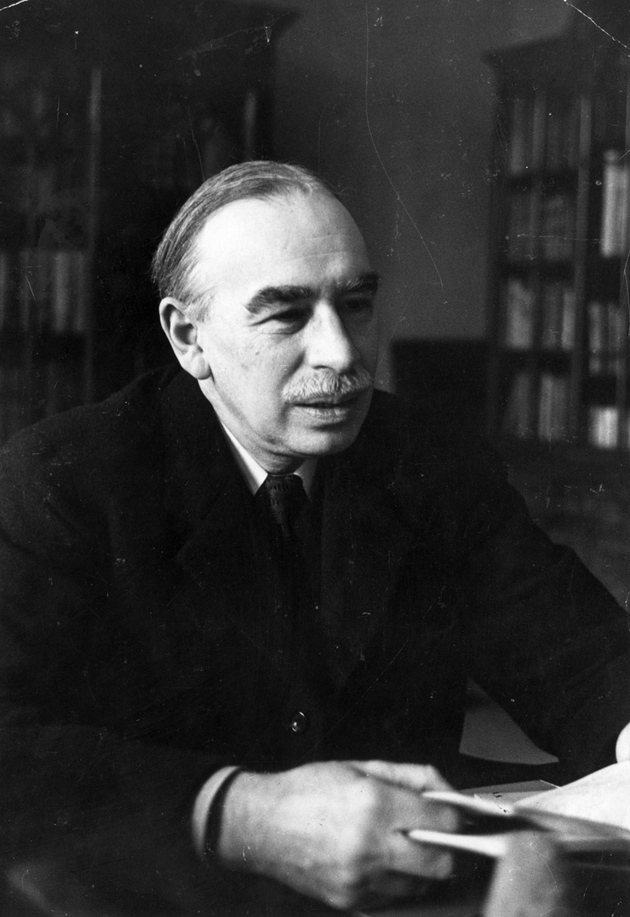

The same challenge—how to understand what had happened between the wars and prevent its recurrence—was confronted by John Maynard Keynes. The great English economist, born in 1883 (the same year as Schumpeter), grew up in a stable, confident, prosperous, and powerful Britain. And then, from his privileged perch at the Treasury and as a participant in the Versailles peace negotiations, he watched his world collapse, taking with it all the reassuring certainties of his culture and class. Keynes, too, would ask himself the question that Hayek and his Austrian colleagues had posed. But he offered a very different answer.

Yes, Keynes acknowledged, the disintegration of late Victorian Europe was the defining experience of his lifetime. Indeed, the essence of his contributions to economic theory was his insistence upon uncertainty: in contrast to the confident nostrums of classical and neoclassical economics, Keynes would insist upon the essential unpredictability of human affairs. If there was a lesson to be drawn from depression, fascism, and war, it was this: uncertainty—elevated to the level of insecurity and collective fear—was the corrosive force that had threatened and might again threaten the liberal world.

Thus Keynes sought an increased role for the social security state, including but not confined to countercyclical economic intervention. Hayek proposed the opposite. In his 1944 classic, The Road to Serfdom, he wrote:

No description in general terms can give an adequate idea of the similarity of much of current English political literature to the works which destroyed the belief in Western civilization in Germany, and created the state of mind in which naziism could become successful.

In other words, Hayek explicitly projected a fascist outcome should Labour win power in England. And indeed, Labour did win. But it went on to implement policies many of which were directly identified with Keynes. For the next three decades, Great Britain (like much of the Western world) was governed in the light of Keynes’s concerns.

Since then, as we know, the Austrians have had their revenge. Quite why this should have happened—and happened where it did—is an interesting question for another occasion. But for whatever reason, we are today living out the dim echo—like light from a fading star—of a debate conducted seventy years ago by men born for the most part in the late nineteenth century. To be sure, the economic terms in which we are encouraged to think are not conventionally associated with these far-off political disagreements. And yet without an understanding of the latter, it is as though we speak a language we do not fully comprehend.

Advertisement

The welfare state had remarkable achievements to its credit. In some countries it was social democratic, grounded in an ambitious program of socialist legislation; in others—Great Britain, for example—it amounted to a series of pragmatic policies aimed at alleviating disadvantage and reducing extremes of wealth and indigence. The common theme and universal accomplishment of the neo-Keynesian governments of the postwar era was their remarkable success in curbing inequality. If you compare the gap separating rich and poor, whether by income or assets, in all continental European countries along with Great Britain and the US, you will see that it shrinks dramatically in the generation following 1945.

With greater equality there came other benefits. Over time, the fear of a return to extremist politics—the politics of desperation, the politics of envy, the politics of insecurity—abated. The Western industrialized world entered a halcyon era of prosperous security: a bubble, perhaps, but a comforting bubble in which most people did far better than they could ever have hoped in the past and had good reason to anticipate the future with confidence.

The paradox of the welfare state, and indeed of all the social democratic (and Christian Democratic) states of Europe, was quite simply that their success would over time undermine their appeal. The generation that remembered the 1930s was understandably the most committed to preserving institutions and systems of taxation, social service, and public provision that they saw as bulwarks against a return to the horrors of the past. But their successors—even in Sweden—began to forget why they had sought such security in the first place.

It was social democracy that bound the middle classes to liberal institutions in the wake of World War II (I use “middle class” here in the European sense). They received in many cases the same welfare assistance and services as the poor: free education, cheap or free medical treatment, public pensions, and the like. In consequence, the European middle class found itself by the 1960s with far greater disposable incomes than ever before, with so many of life’s necessities prepaid in tax. And thus the very class that had been so exposed to fear and insecurity in the interwar years was now tightly woven into the postwar democratic consensus.

By the late 1970s, however, such considerations were increasingly neglected. Starting with the tax and employment reforms of the Thatcher-Reagan years, and followed in short order by deregulation of the financial sector, inequality has once again become an issue in Western society. After notably diminishing from the 1910s through the 1960s, the inequality index has steadily grown over the course of the past three decades.

In the US today, the “Gini coefficient”—a measure of the distance separating rich and poor—is comparable to that of China.1 When we consider that China is a developing country where huge gaps will inevitably open up between the wealthy few and the impoverished many, the fact that here in the US we have a similar inequality coefficient says much about how far we have fallen behind our earlier aspirations.

Consider the 1996 “Personal Responsibility and Work Opportunity Act” (a more Orwellian title would be hard to conceive), the Clinton-era legislation that sought to gut welfare provision here in the US. The terms of this act should put us in mind of another act, passed in England nearly two centuries ago: the New Poor Law of 1834. The provisions of the New Poor Law are familiar to us, thanks to Charles Dickens’s depiction of its workings in Oliver Twist. When Noah Claypole famously sneers at little Oliver, calling him “Work’us” (“Workhouse”), he is implying, for 1838, precisely what we convey today when we speak disparagingly of “welfare queens.”

The New Poor Law was an outrage, forcing the indigent and the unemployed to choose between work at any wage, however low, and the humiliation of the workhouse. Here and in most other forms of nineteenth-century public assistance (still thought of and described as “charity”), the level of aid and support was calibrated so as to be less appealing than the worst available alternative. This system drew on classical economic theories that denied the very possibility of unemployment in an efficient market: if wages fell low enough and there was no attractive alternative to work, everyone would find a job.

For the next 150 years, reformers strove to replace such demeaning practices. In due course, the New Poor Law and its foreign analogues were succeeded by the public provision of assistance as a matter of right. Workless citizens were no longer deemed any the less deserving for that; they were not penalized for their condition nor were implicit aspersions cast upon their good standing as members of society. More than anything else, the welfare states of the mid-twentieth century established the profound impropriety of defining civic status as a function of economic participation.

In the contemporary United States, at a time of growing unemployment, a jobless man or woman is not a full member of the community. In order to receive even the exiguous welfare payments available, they must first have sought and, where applicable, accepted employment at whatever wage is on offer, however low the pay and distasteful the work. Only then are they entitled to the consideration and assistance of their fellow citizens.

Why do so few of us condemn such “reforms”—enacted under a Democratic president? Why are we so unmoved by the stigma attaching to their victims? Far from questioning this reversion to the practices of early industrial capitalism, we have adapted all too well and in consensual silence—in revealing contrast to an earlier generation. But then, as Tolstoy reminds us, there are “no conditions of life to which a man cannot get accustomed, especially if he sees them accepted by everyone around him.”

This “disposition to admire, and almost to worship, the rich and the powerful, and to despise, or, at least, to neglect persons of poor and mean condition…is…the great and most universal cause of the corruption of our moral sentiments.” Those are not my words. They were written by Adam Smith, who regarded the likelihood that we would come to admire wealth and despise poverty, admire success and scorn failure, as the greatest risk facing us in the commercial society whose advent he predicted. It is now upon us.

The most revealing instance of the kind of problem we face comes in a form that may strike many of you as a mere technicality: the process of privatization. In the last thirty years, a cult of privatization has mesmerized Western (and many non-Western) governments. Why? The shortest response is that, in an age of budgetary constraints, privatization appears to save money. If the state owns an inefficient public program or an expensive public service—a waterworks, a car factory, a railway—it seeks to offload it onto private buyers.

The sale duly earns money for the state. Meanwhile, by entering the private sector, the service or operation in question becomes more efficient thanks to the working of the profit motive. Everyone benefits: the service improves, the state rids itself of an inappropriate and poorly managed responsibility, investors profit, and the public sector makes a one-time gain from the sale.

So much for the theory. The practice is very different. What we have been watching these past decades is the steady shifting of public responsibility onto the private sector to no discernible collective advantage. In the first place, privatization is inefficient. Most of the things that governments have seen fit to pass into the private sector were operating at a loss: whether they were railway companies, coal mines, postal services, or energy utilities, they cost more to provide and maintain than they could ever hope to attract in revenue.

For just this reason, such public goods were inherently unattractive to private buyers unless offered at a steep discount. But when the state sells cheap, the public takes a loss. It has been calculated that, in the course of the Thatcher-era UK privatizations, the deliberately low price at which long-standing public assets were marketed to the private sector resulted in a net transfer of £14 billion from the taxpaying public to stockholders and other investors.

To this loss should be added a further £3 billion in fees to the banks that transacted the privatizations. Thus the state in effect paid the private sector some £17 billion ($30 billion) to facilitate the sale of assets for which there would otherwise have been no takers. These are significant sums of money—approximating the endowment of Harvard University, for example, or the annual gross domestic product of Paraguay or Bosnia-Herzegovina.2 This can hardly be construed as an efficient use of public resources.

In the second place, there arises the question of moral hazard. The only reason that private investors are willing to purchase apparently inefficient public goods is because the state eliminates or reduces their exposure to risk. In the case of the London Underground, for example, the purchasing companies were assured that whatever happened they would be protected against serious loss—thereby undermining the classic economic case for privatization: that the profit motive encourages efficiency. The “hazard” in question is that the private sector, under such privileged conditions, will prove at least as inefficient as its public counterpart—while creaming off such profits as are to be made and charging losses to the state.

The third and perhaps most telling case against privatization is this. There can be no doubt that many of the goods and services that the state seeks to divest have been badly run: incompetently managed, underinvested, etc. Nevertheless, however badly run, postal services, railway networks, retirement homes, prisons, and other provisions targeted for privatization remain the responsibility of the public authorities. Even after they are sold, they cannot be left entirely to the vagaries of the market. They are inherently the sort of activity that someone has to regulate.

This semiprivate, semipublic disposition of essentially collective responsibilities returns us to a very old story indeed. If your tax returns are audited in the US today, although it is the government that has decided to investigate you, the investigation itself will very likely be conducted by a private company. The latter has contracted to perform the service on the state’s behalf, in much the same way that private agents have contracted with Washington to provide security, transportation, and technical know-how (at a profit) in Iraq and elsewhere. In a similar way, the British government today contracts with private entrepreneurs to provide residential care services for the elderly—a responsibility once controlled by the state.

Governments, in short, farm out their responsibilities to private firms that claim to administer them more cheaply and better than the state can itself. In the eighteenth century this was called tax farming. Early modern governments often lacked the means to collect taxes and thus invited bids from private individuals to undertake the task. The highest bidder would get the job, and was free—once he had paid the agreed sum—to collect whatever he could and retain the proceeds. The government thus took a discount on its anticipated tax revenue, in return for cash up front.

After the fall of the monarchy in France, it was widely conceded that tax farming was grotesquely inefficient. In the first place, it discredits the state, represented in the popular mind by a grasping private profiteer. Secondly, it generates considerably less revenue than an efficiently administered system of government collection, if only because of the profit margin accruing to the private collector. And thirdly, you get disgruntled taxpayers.

In the US today, we have a discredited state and inadequate public resources. Interestingly, we do not have disgruntled taxpayers—or, at least, they are usually disgruntled for the wrong reasons. Nevertheless, the problem we have created for ourselves is essentially comparable to that which faced the ancien régime.

As in the eighteenth century, so today: by eviscerating the state’s responsibilities and capacities, we have diminished its public standing. The outcome is “gated communities,” in every sense of the word: subsections of society that fondly suppose themselves functionally independent of the collectivity and its public servants. If we deal uniquely or overwhelmingly with private agencies, then over time we dilute our relationship with a public sector for which we have no apparent use. It doesn’t much matter whether the private sector does the same things better or worse, at higher or lower cost. In either event, we have diminished our allegiance to the state and lost something vital that we ought to share—and in many cases used to share—with our fellow citizens.

This process was well described by one of its greatest modern practitioners: Margaret Thatcher reportedly asserted that “there is no such thing as society. There are only individual men and women and families.” But if there is no such thing as society, merely individuals and the “night watchman” state—overseeing from afar activities in which it plays no part—then what will bind us together? We already accept the existence of private police forces, private mail services, private agencies provisioning the state in war, and much else besides. We have “privatized” precisely those responsibilities that the modern state laboriously took upon itself in the course of the nineteenth and early twentieth centuries.

What, then, will serve as a buffer between citizens and the state? Surely not “society,” hard pressed to survive the evisceration of the public domain. For the state is not about to wither away. Even if we strip it of all its service attributes, it will still be with us—if only as a force for control and repression. Between state and individuals there would then be no intermediate institutions or allegiances: nothing would remain of the spider’s web of reciprocal services and obligations that bind citizens to one another via the public space they collectively occupy. All that would be left is private persons and corporations seeking competitively to hijack the state for their own advantage.

The consequences are no more attractive today than they were before the modern state arose. Indeed, the impetus to state-building as we have known it derived quite explicitly from the understanding that no collection of individuals can survive long without shared purposes and common institutions. The very notion that private advantage could be multiplied to public benefit was already palpably absurd to the liberal critics of nascent industrial capitalism. In the words of John Stuart Mill, “the idea is essentially repulsive of a society only held together by the relations and feelings arising out of pecuniary interests.”

What, then, is to be done? We have to begin with the state: as the incarnation of collective interests, collective purposes, and collective goods. If we cannot learn to “think the state” once again, we shall not get very far. But what precisely should the state do? Minimally, it should not duplicate unnecessarily: as Keynes wrote, “The important thing for Government is not to do things which individuals are doing already, and to do them a little better or a little worse; but to do those things which at present are not done at all.” And we know from the bitter experience of the past century that there are some things that states should most certainly not be doing.

The twentieth-century narrative of the progressive state rested precariously upon the conceit that “we”—reformers, socialists, radicals—had History on our side: that our projects, in the words of the late Bernard Williams, were “being cheered on by the universe.”3 Today, we have no such reassuring story to tell. We have just survived a century of doctrines purporting with alarming confidence to say what the state should do and to remind individuals—forcibly if necessary—that the state knows what is good for them. We cannot return to all that. So if we are to “think the state” once more, we had better begin with a sense of its limits.

For similar reasons, it would be futile to resurrect the rhetoric of early-twentieth-century social democracy. In those years, the democratic left emerged as an alternative to the more uncompromising varieties of Marxist revolutionary socialism and—in later years—to their Communist successor. Inherent in social democracy there was thus a curious schizophrenia. While marching confidently forward into a better future, it was constantly glancing nervously over its left shoulder. We, it seems to say, are not authoritarian. We are for freedom, not repression. We are democrats who also believe in social justice, regulated markets, and so forth.

So long as the primary objective of social democrats was to convince voters that they were a respectable radical choice within the liberal polity, this defensive stance made sense. But today such rhetoric is incoherent. It is not by chance that a Christian Democrat like Angela Merkel can win an election in Germany against her Social Democratic opponents—even at the height of a financial crisis—with a set of policies that in all its important essentials resembles their own program.

Social democracy, in one form or another, is the prose of contemporary European politics. There are very few European politicians, and certainly fewer still in positions of influence, who would dissent from core social democratic assumptions about the duties of the state, however much they might differ as to their scope. Consequently, social democrats in today’s Europe have nothing distinctive to offer: in France, for example, even their unreflective disposition to favor state ownership hardly distinguishes them from the Colbertian instincts of the Gaullist right. Social democracy needs to rethink its purposes.

The problem lies not in social democratic policies, but in the language in which they are couched. Since the authoritarian challenge from the left has lapsed, the emphasis upon “democracy” is largely redundant. We are all democrats today. But “social” still means something—arguably more now than some decades back when a role for the public sector was uncontentiously conceded by all sides. What, then, is distinctive about the “social” in the social democratic approach to politics?

Imagine, if you will, a railway station. A real railway station, not New York’s Pennsylvania Station: a failed 1960s-era shopping mall stacked above a coal cellar. I mean something like Waterloo Station in London, the Gare de l’Est in Paris, Mumbai’s dramatic Victoria Terminus, or Berlin’s magnificent new Hauptbahnhof. In these remarkable cathedrals of modern life, the private sector functions perfectly well in its place: there is no reason, after all, why newsstands or coffee bars should be run by the state. Anyone who can recall the desiccated, plastic-wrapped sandwiches of British Railway’s cafés will concede that competition in this arena is to be encouraged.

But you cannot run trains competitively. Railways—like agriculture or the mails—are at one and the same time an economic activity and an essential public good. Moreover, you cannot render a railway system more efficient by placing two trains on a track and waiting to see which performs better: railways are a natural monopoly. Implausibly, the English have actually instituted such competition among bus services. But the paradox of public transport, of course, is that the better it does its job, the less “efficient” it may be.

A bus that provides an express service for those who can afford it and avoids remote villages where it would be boarded only by the occasional pensioner will make more money for its owner. But someone—the state or the local municipality—must still provide the unprofitable, inefficient local service. In its absence, the short-term economic benefits of cutting the provision will be offset by long-term damage to the community at large. Predictably, therefore, the consequences of “competitive” buses—except in London where there is enough demand to go around—have been an increase in costs assigned to the public sector; a sharp rise in fares to the level that the market can bear; and attractive profits for the express bus companies.

Trains, like buses, are above all a social service. Anyone could run a profitable rail line if all they had to do was shunt expresses back and forth from London to Edinburgh, Paris to Marseilles, Boston to Washington. But what of rail links to and from places where people take the train only occasionally? No single person is going to set aside sufficient funds to pay the economic cost of supporting such a service for the infrequent occasions when he uses it. Only the collectivity—the state, the government, the local authorities—can do this. The subsidy required will always appear inefficient in the eyes of a certain sort of economist: Surely it would be cheaper to rip up the tracks and let everyone use their car?

In 1996, the last year before Britain’s railways were privatized, British Rail boasted the lowest public subsidy for a railway in Europe. In that year the French were planning for their railways an investment rate of £21 per head of population; the Italians £33; the British just £9.4 These contrasts were accurately reflected in the quality of the service provided by the respective national systems. They also explain why the British rail network could be privatized only at great loss, so inadequate was its infrastructure.

But the investment contrast illustrates my point. The French and the Italians have long treated their railways as a social provision. Running a train to a remote region, however cost-ineffective, sustains local communities. It reduces environmental damage by providing an alternative to road transport. The railway station and the service it provides are thus a symptom and symbol of society as a shared aspiration.

I suggested above that the provision of train service to remote districts makes social sense even if it is economically “inefficient.” But this, of course, begs an important question. Social democrats will not get very far by proposing laudable social objectives that they themselves concede to cost more than the alternatives. We would end up acknowledging the virtues of social services, decrying their expense…and doing nothing. We need to rethink the devices we employ to assess all costs: social and economic alike.

Let me offer an example. It is cheaper to provide benevolent handouts to the poor than to guarantee them a full range of social services as of right. By “benevolent” I mean faith-based charity, private or independent initiative, income-dependent assistance in the form of food stamps, housing grants, clothing subsidies, and so on. But it is notoriously humiliating to be on the receiving end of that kind of assistance. The “means test” applied by the British authorities to victims of the 1930s depression is still recalled with distaste and even anger by an older generation.5

Conversely, it is not humiliating to be on the receiving end of a right. If you are entitled to unemployment payments, pension, disability, municipal housing, or any other publicly furnished assistance as of right—without anyone investigating to determine whether you have sunk low enough to “deserve” help—then you will not be embarrassed to accept it. However, such universal rights and entitlements are expensive.

But what if we treated humiliation itself as a cost, a charge to society? What if we decided to “quantify” the harm done when people are shamed by their fellow citizens before receiving the mere necessities of life? In other words, what if we factored into our estimates of productivity, efficiency, or well-being the difference between a humiliating handout and a benefit as of right? We might conclude that the provision of universal social services, public health insurance, or subsidized public transportation was actually a cost-effective way to achieve our common objectives. Such an exercise is inherently contentious: How do we quantify “humiliation”? What is the measurable cost of depriving isolated citizens of access to metropolitan resources? How much are we willing to pay for a good society? Unclear. But unless we ask such questions, how can we hope to devise answers?6

What do we mean when we speak of a “good society”? From a normative perspective we might begin with a moral “narrative” in which to situate our collective choices. Such a narrative would then substitute for the narrowly economic terms that constrain our present conversations. But defining our general purposes in that way is no simple matter.

In the past, social democracy unquestionably concerned itself with issues of right and wrong: all the more so because it inherited a pre-Marxist ethical vocabulary infused with Christian distaste for extremes of wealth and the worship of materialism. But such considerations were frequently trumped by ideological interrogations. Was capitalism doomed? If so, did a given policy advance its anticipated demise or risk postponing it? If capitalism was not doomed, then policy choices would have to be conceived from a different perspective. In either case the relevant question typically addressed the prospects of “the system” rather than the inherent virtues or defects of a given initiative. Such questions no longer preoccupy us. We are thus more directly confronted with the ethical implications of our choices.

What precisely is it that we find abhorrent in financial capitalism, or “commercial society” as the eighteenth century had it? What do we find instinctively amiss in our present arrangements and what can we do about them? What do we find unfair? What is it that offends our sense of propriety when faced with unrestrained lobbying by the wealthy at the expense of everyone else? What have we lost?

The answers to such questions should take the form of a moral critique of the inadequacies of the unrestricted market or the feckless state. We need to understand why they offend our sense of justice or equity. We need, in short, to return to the kingdom of ends. Here social democracy is of limited assistance, for its own response to the dilemmas of capitalism was merely a belated expression of Enlightenment moral discourse applied to “the social question.” Our problems are rather different.

We are entering, I believe, a new age of insecurity. The last such era, memorably analyzed by Keynes in The Economic Consequences of the Peace (1919), followed decades of prosperity and progress and a dramatic increase in the internationalization of life: “globalization” in all but name. As Keynes describes it, the commercial economy had spread around the world. Trade and communication were accelerating at an unprecedented rate. Before 1914, it was widely asserted that the logic of peaceful economic exchange would triumph over national self-interest. No one expected all this to come to an abrupt end. But it did.

We too have lived through an era of stability, certainty, and the illusion of indefinite economic improvement. But all that is now behind us. For the foreseeable future we shall be as economically insecure as we are culturally uncertain. We are assuredly less confident of our collective purposes, our environmental well-being, or our personal safety than at any time since World War II. We have no idea what sort of world our children will inherit, but we can no longer delude ourselves into supposing that it must resemble our own in reassuring ways.

We must revisit the ways in which our grandparents’ generation responded to comparable challenges and threats. Social democracy in Europe, the New Deal, and the Great Society here in the US were explicit responses to the insecurities and inequities of the age. Few in the West are old enough to know just what it means to watch our world collapse.7 We find it hard to conceive of a complete breakdown of liberal institutions, an utter disintegration of the democratic consensus. But it was just such a breakdown that elicited the Keynes–Hayek debate and from which the Keynesian consensus and the social democratic compromise were born: the consensus and the compromise in which we grew up and whose appeal has been obscured by its very success.

If social democracy has a future, it will be as a social democracy of fear.8 Rather than seeking to restore a language of optimistic progress, we should begin by reacquainting ourselves with the recent past. The first task of radical dissenters today is to remind their audience of the achievements of the twentieth century, along with the likely consequences of our heedless rush to dismantle them.

The left, to be quite blunt about it, has something to conserve. It is the right that has inherited the ambitious modernist urge to destroy and innovate in the name of a universal project. Social democrats, characteristically modest in style and ambition, need to speak more assertively of past gains. The rise of the social service state, the century-long construction of a public sector whose goods and services illustrate and promote our collective identity and common purposes, the institution of welfare as a matter of right and its provision as a social duty: these were no mean accomplishments.

That these accomplishments were no more than partial should not trouble us. If we have learned nothing else from the twentieth century, we should at least have grasped that the more perfect the answer, the more terrifying its consequences. Imperfect improvements upon unsatisfactory circumstances are the best that we can hope for, and probably all we should seek. Others have spent the last three decades methodically unraveling and destabilizing those same improvements: this should make us much angrier than we are. It ought also to worry us, if only on prudential grounds: Why have we been in such a hurry to tear down the dikes laboriously set in place by our predecessors? Are we so sure that there are no floods to come?

A social democracy of fear is something to fight for. To abandon the labors of a century is to betray those who came before us as well as generations yet to come. It would be pleasing—but misleading—to report that social democracy, or something like it, represents the future that we would paint for ourselves in an ideal world. It does not even represent the ideal past. But among the options available to us in the present, it is better than anything else to hand. In Orwell’s words, reflecting in Homage to Catalonia upon his recent experiences in revolutionary Barcelona:

There was much in it that I did not understand, in some ways I did not even like it, but I recognized it immediately as a state of affairs worth fighting for.

I believe this to be no less true of whatever we can retrieve from the twentieth-century memory of social democracy.

This Issue

December 17, 2009

-

1

See “High Gini Is Loosed Upon Asia,” The Economist, August 11, 2007. ↩

-

2

See Massimo Florio, The Great Divestiture: Evaluating the Welfare Impact of the British Privatizations, 1979–1997 (MIT Press, 2004), p. 163. For Harvard, see “Harvard Endowment Posts Solid Positive Return,” Harvard Gazette, September 12, 2008. For the GDP of Paraguay or Bosnia-Herzegovina, see www.cia.gov/library/publications/the-world-factbook/geos/xx.html. ↩

-

3

Bernard Williams, Philosophy as a Humanistic Discipline (Princeton University Press, 2006), p. 144. ↩

-

4

For these figures see my “‘Twas a Famous Victory,” The New York Review, July 19, 2001. ↩

-

5

For comparable recollections of humiliating handouts, see The Autobiography of Malcolm X (Ballantine, 1987). I am grateful to Casey Selwyn for pointing this out to me. ↩

-

6

The international Commission on Measurement of Economic Performance and Social Progress, chaired by Joseph Stiglitz and advised by Amartya Sen, recently recommended a different approach to measuring collective well-being. But despite the admirable originality of their proposals, neither Stiglitz nor Sen went much beyond suggesting better ways to assess economic performance; non-economic concerns did not figure prominently in their report. See www.stiglitz-sen-fitoussi.fr/en/index.htm. ↩

-

7

The exception, of course, is Bosnia, whose citizens are all too well aware of just what such a collapse entails. ↩

-

8

By analogy with “The Liberalism of Fear,” Judith Shklar’s penetrating essay on political inequality and power. ↩